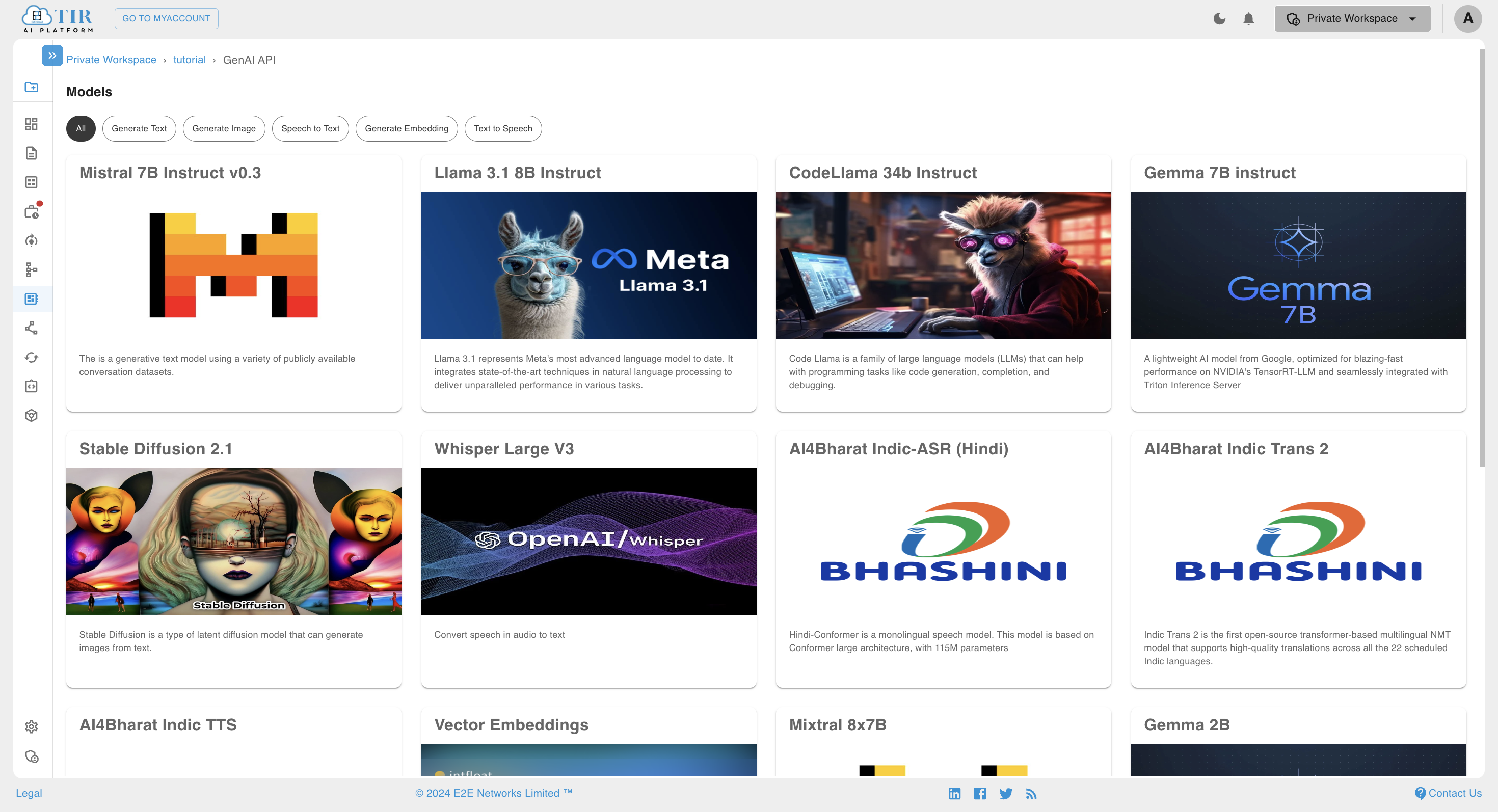

Generative AI API

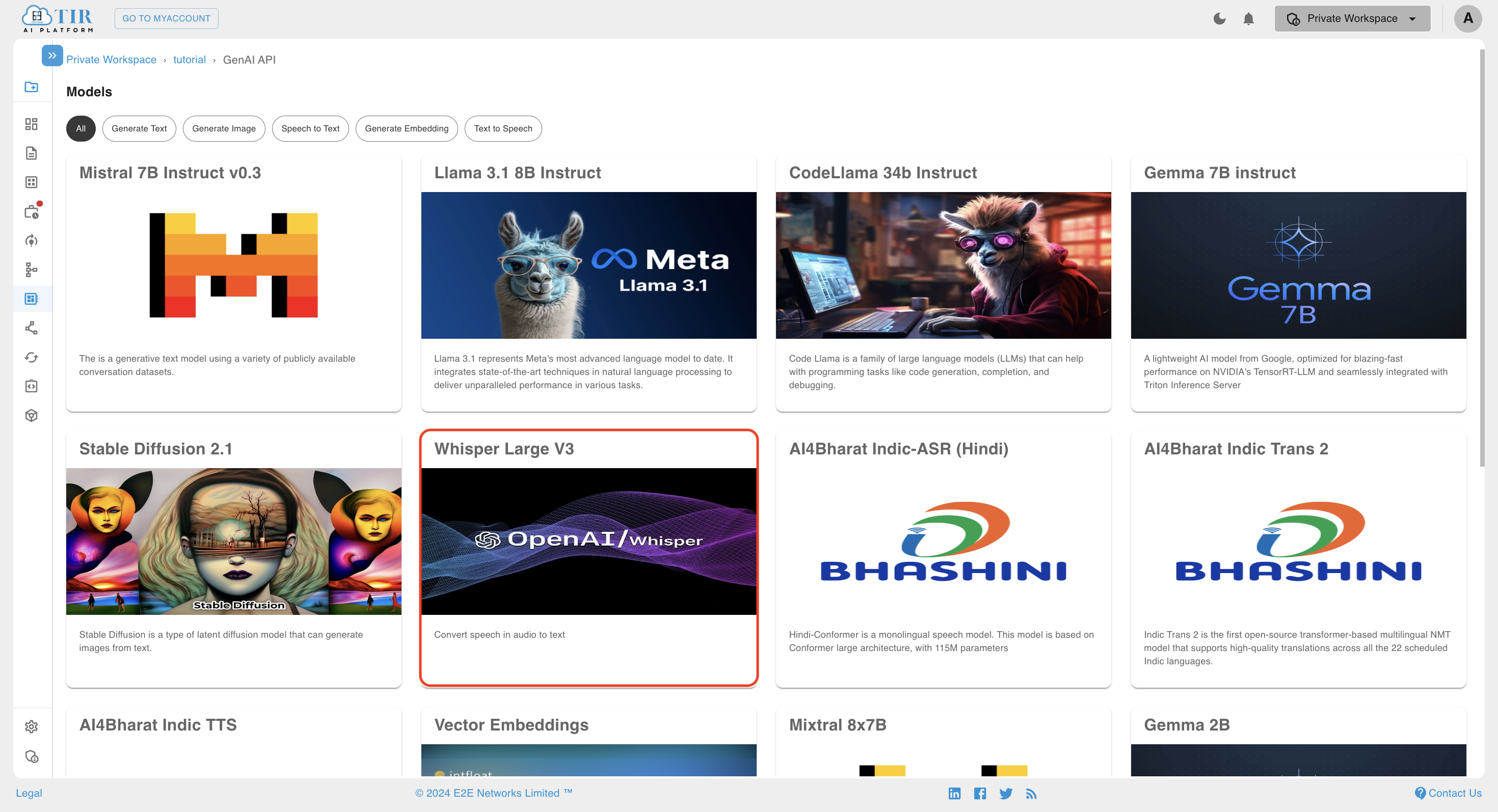

TIR's Generative AI (GenAI) API offers a suite of ready-to-use inference endpoints, enabling seamless interaction with advanced models for text-to-text, speech-to-text, embeddings, and text-to-speech tasks. These APIs provide developers with the tools to easily integrate generative AI capabilities into their applications.

Key Features

- Cost Effectiveness: You're only charged for the requests you make, allowing you to control expenses while scaling according to your needs.

- Ready to Use: GenAI APIs are always available and can be accessed anytime, providing instant integration into your applications with no commitments or long-term contracts. Use them as needed without worrying about setup or lock-in periods.

- Playground: Test and explore model capabilities directly on TIR's UI in the Playground section after selecting the model.

Note: Billing parameters may vary based on the model you use. For more information on pricing, select the model you are interested in and refer to the usage section.

Quick Start

- How to Integrate GenAI API with Your Application?

- Generating Token to Access GenAI Models

- How to Check Usage & Pricing of GenAI API?

How to Integrate GenAI API with Your Application?

Integration methods vary depending on the model you use. Detailed steps for each model can be found in the Playground or API section.

Available Integration Options:

- REST API: This method is compatible with all models. Use the REST API endpoints for straightforward integration.

- OpenAI SDK: All LLMs (text generation) models such as Llama and Mistral on TIR GenAI are OpenAI compatible. You can integrate by updating the

OPENAI_BASE_URLandOPENAI_API_KEYin your application if it uses the OpenAI SDK. Start with sample code provided on TIR's UI. - TIR SDK: This is a dedicated SDK for launching and managing services on E2E network's TIR platform.

We will go through all the integration options in this document. To access the model, you need to create a token.

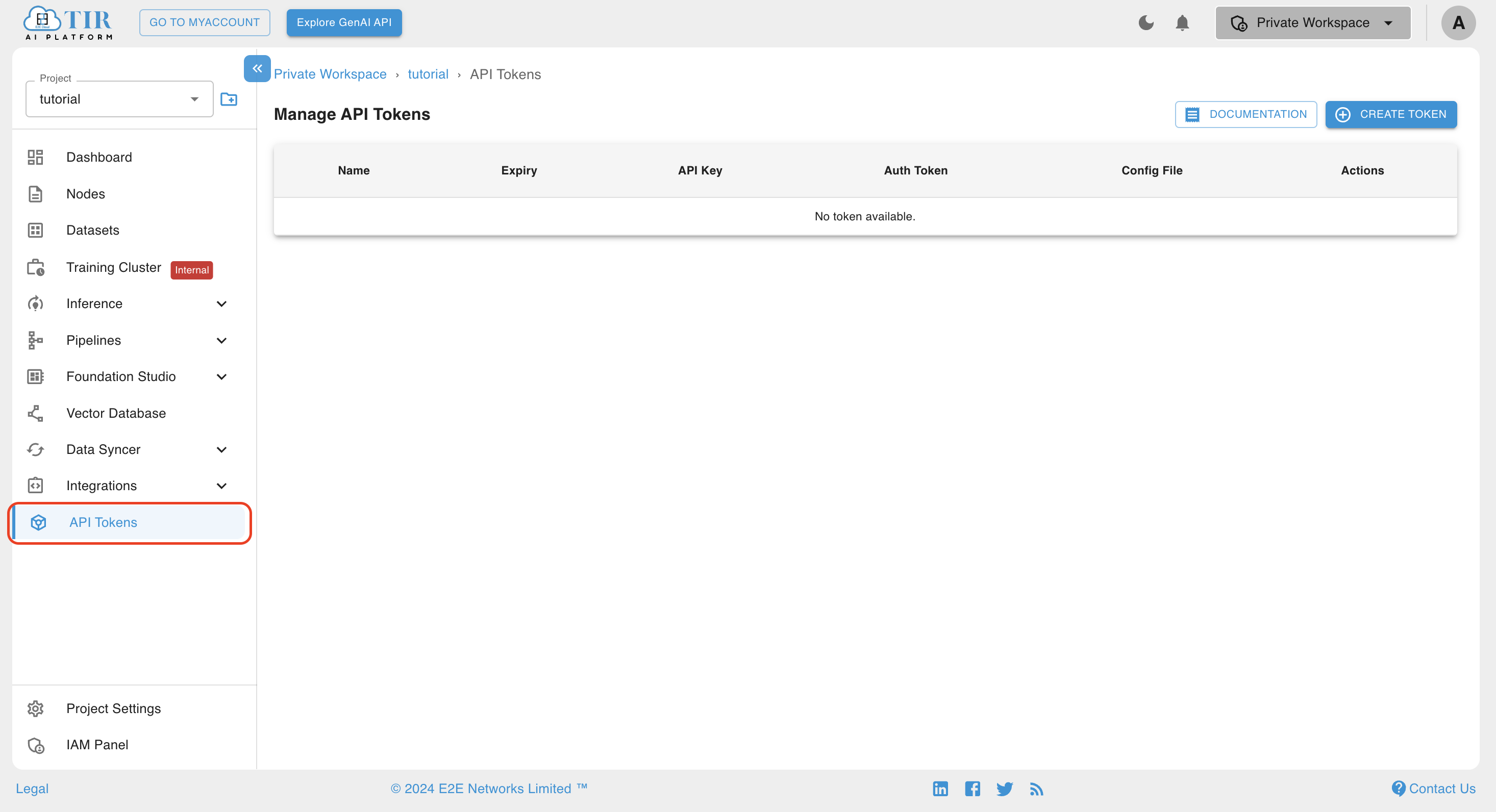

Generating Token To Access GenAI Models

-

Navigate to the API Token section on TIR's UI using the side navbar.

-

Create a new token.

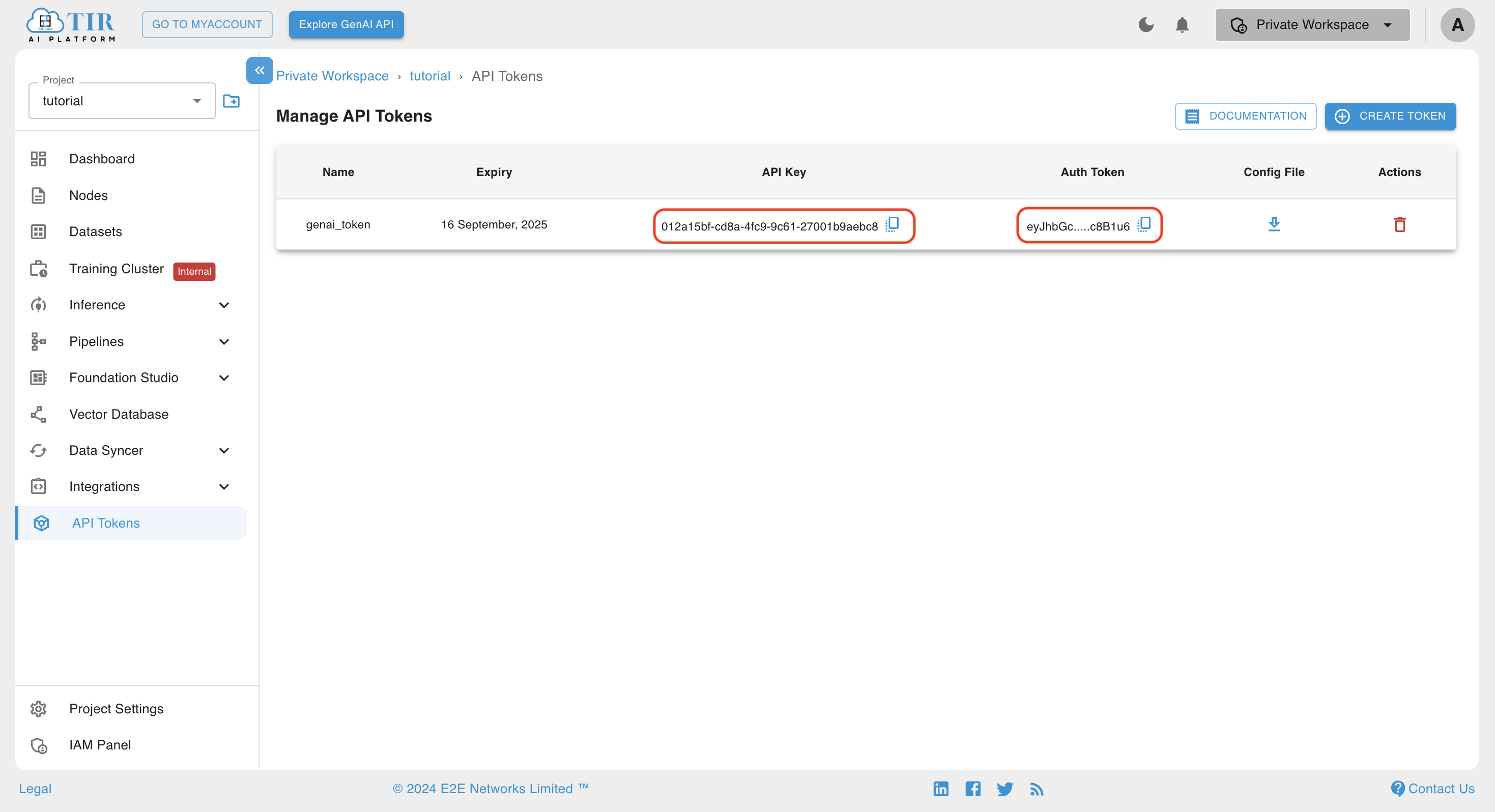

-

Copy the Auth Token and API Key.

Note:

OPENAI_API_KEYfor the OpenAI compatible model is the Auth Token copied in the previous step.

Accessing GenAI using REST API

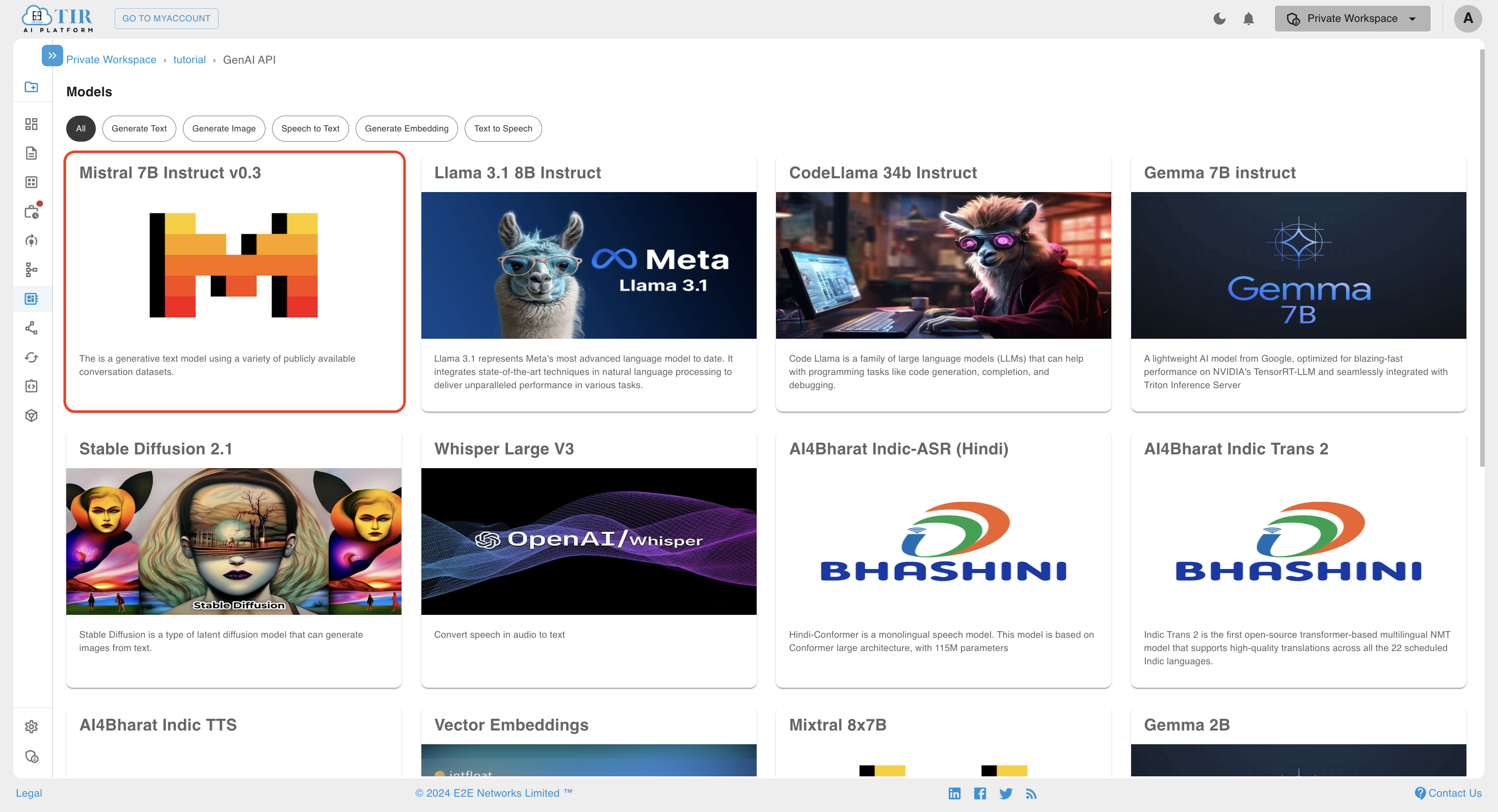

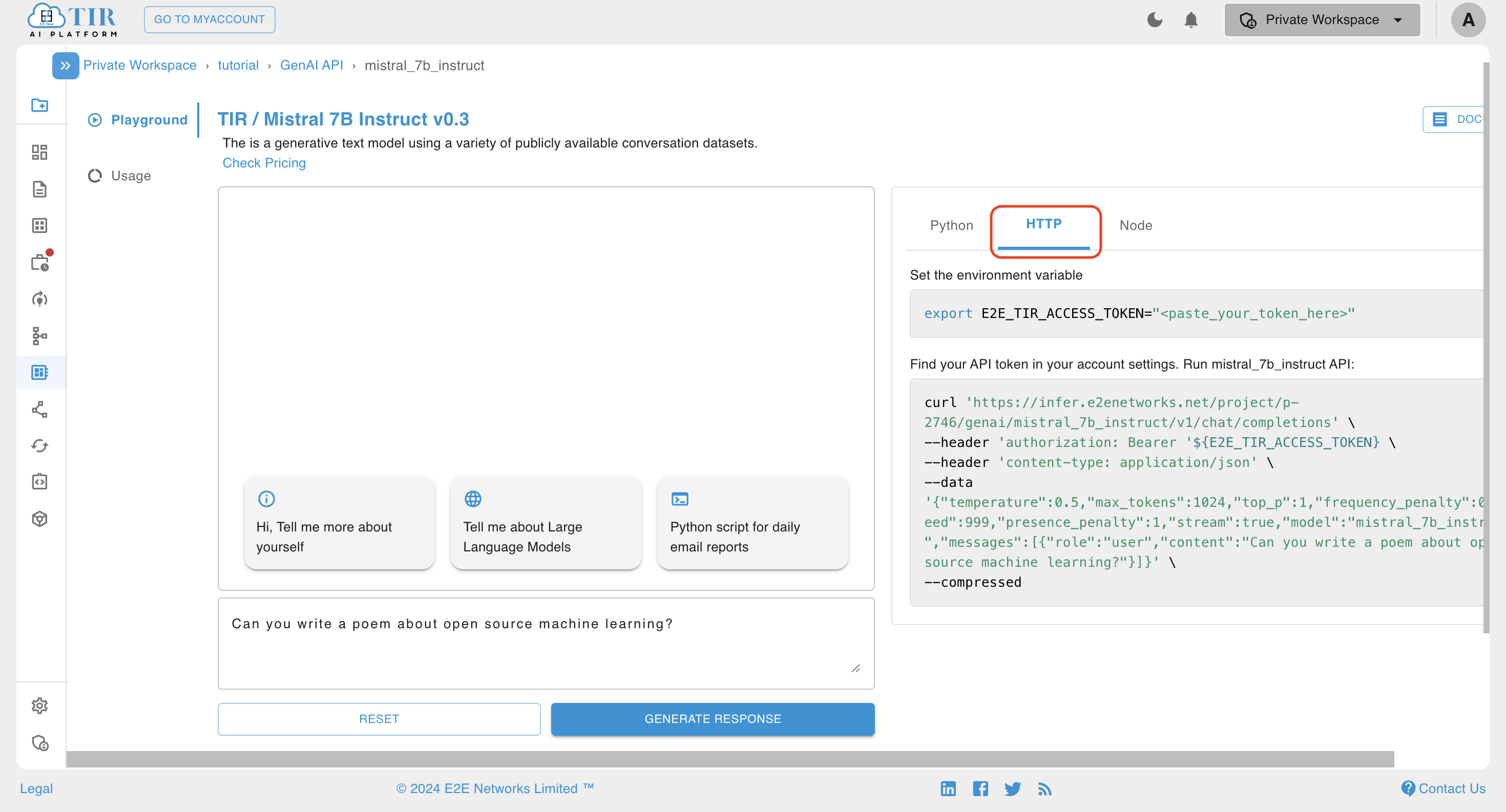

For this example, we will use the Mistral 7B Instruct v0.3 model. Mistral is a text generation large language model.

-

Select the Mistral 7B Instruct v0.3 card in the GenAI section.

-

Open the HTTP tab to find the cURL request for the model.

-

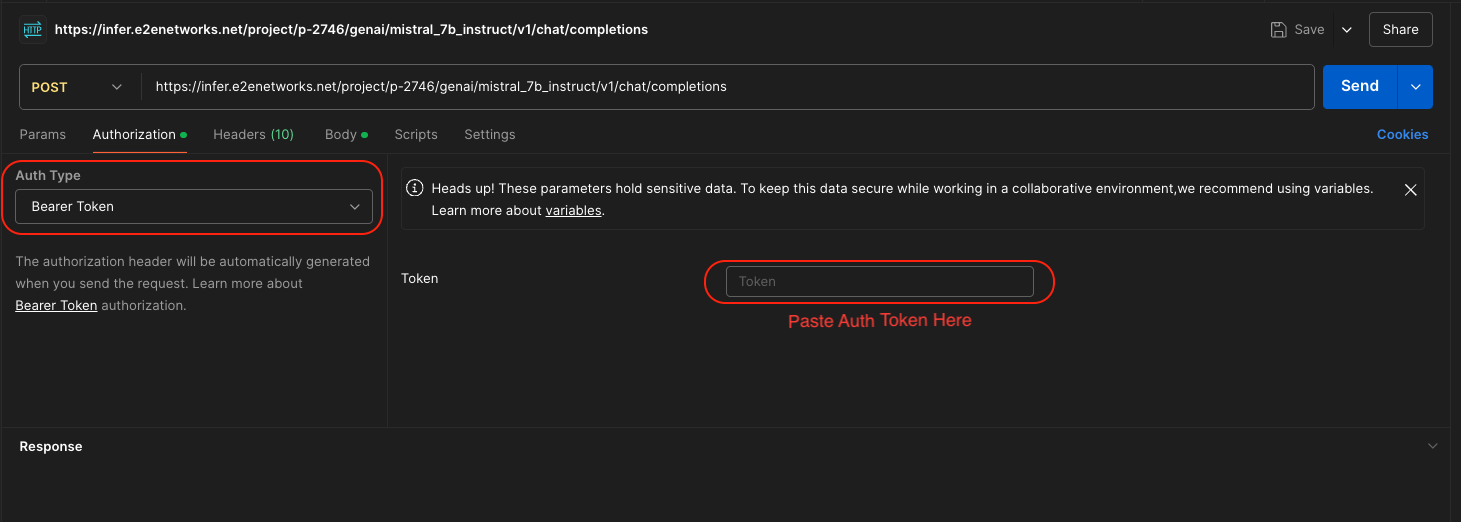

Copy the cURL request into any API testing tool. For this tutorial, we'll use Postman. Before making the request, add the Auth token (generated in the

Generating Token to Access GenAI Modelssection) to the Authorization Header.Note: For all API requests, the Auth token is of type Bearer.

-

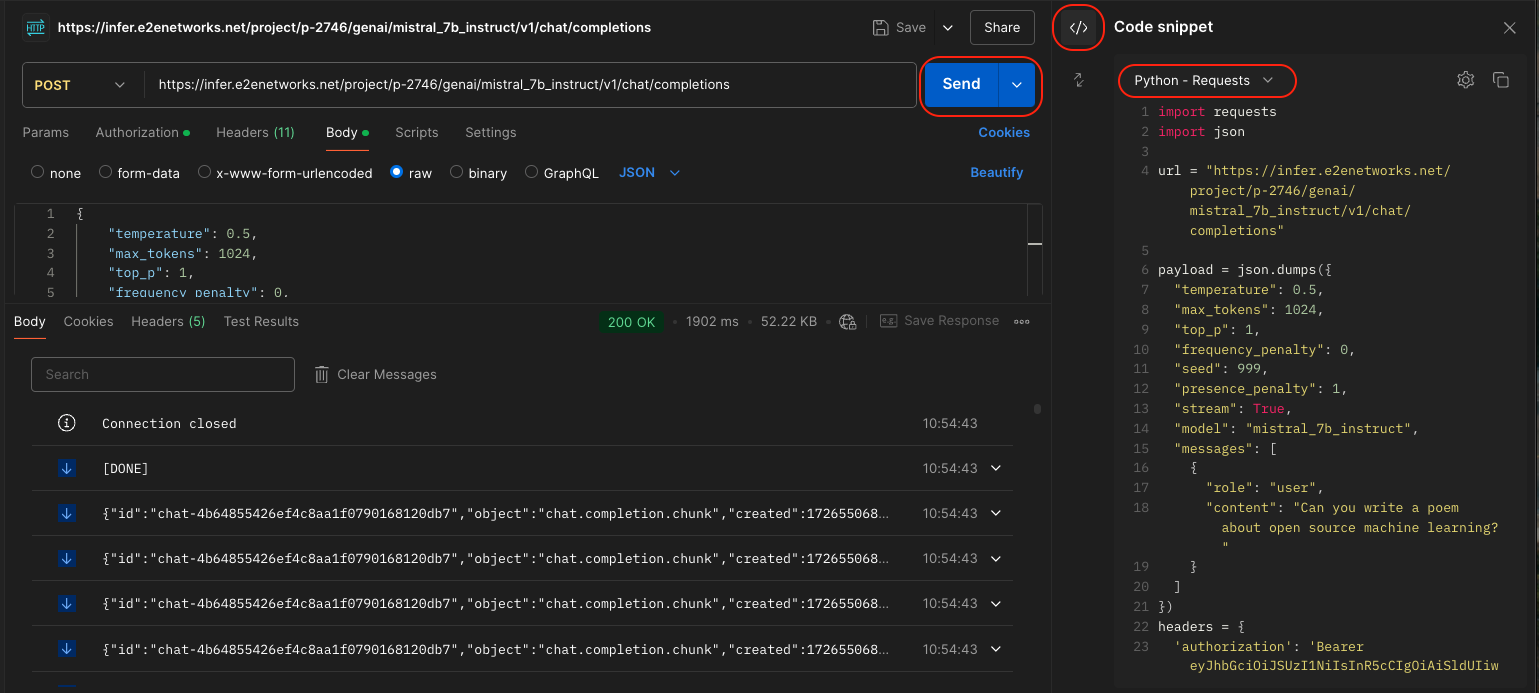

After adding the token, modify the payload based on your requirements and send the request. You can also generate sample code for various languages in Postman's Code section.

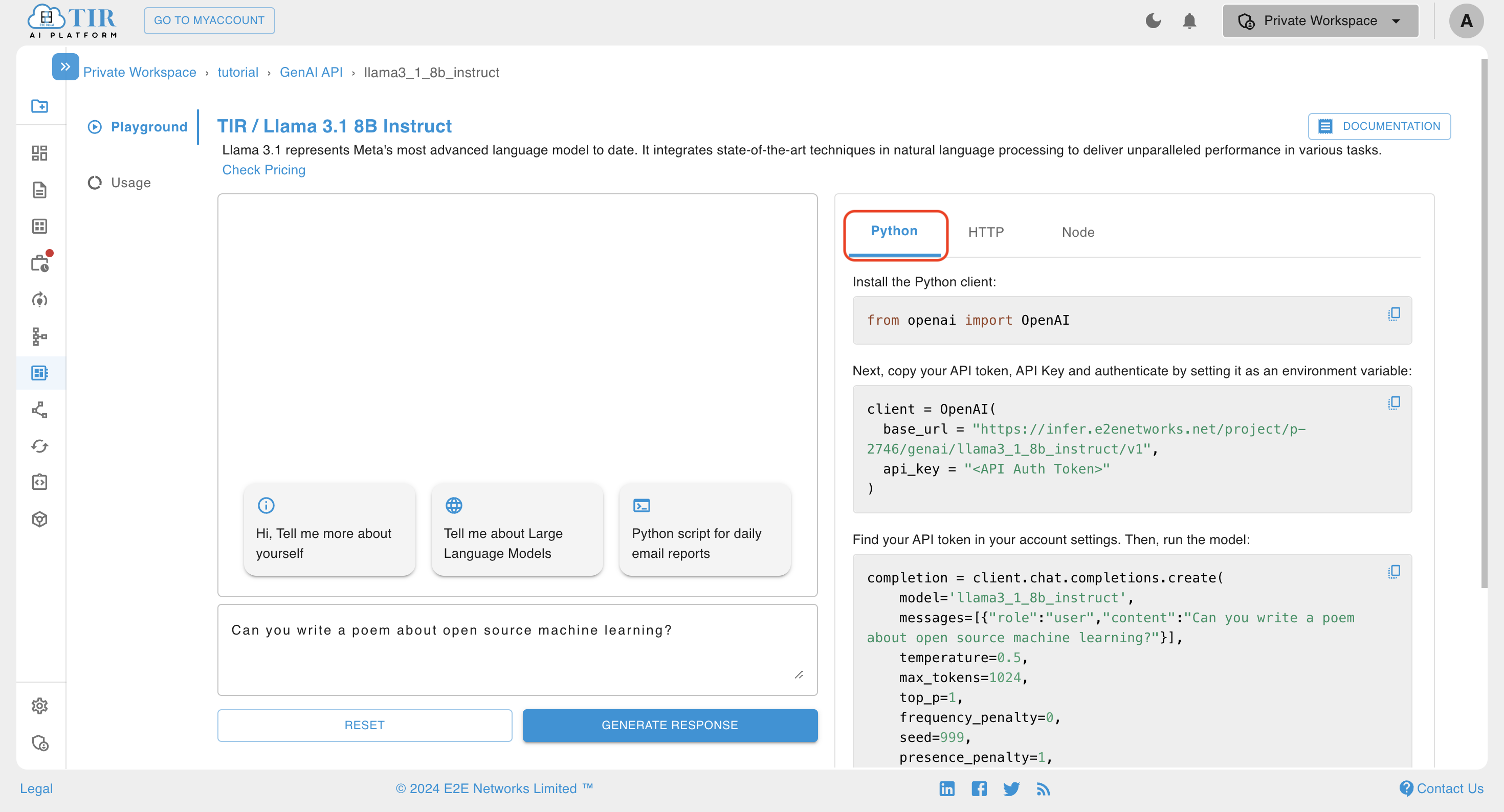

Accessing GenAI using OpenAI SDK

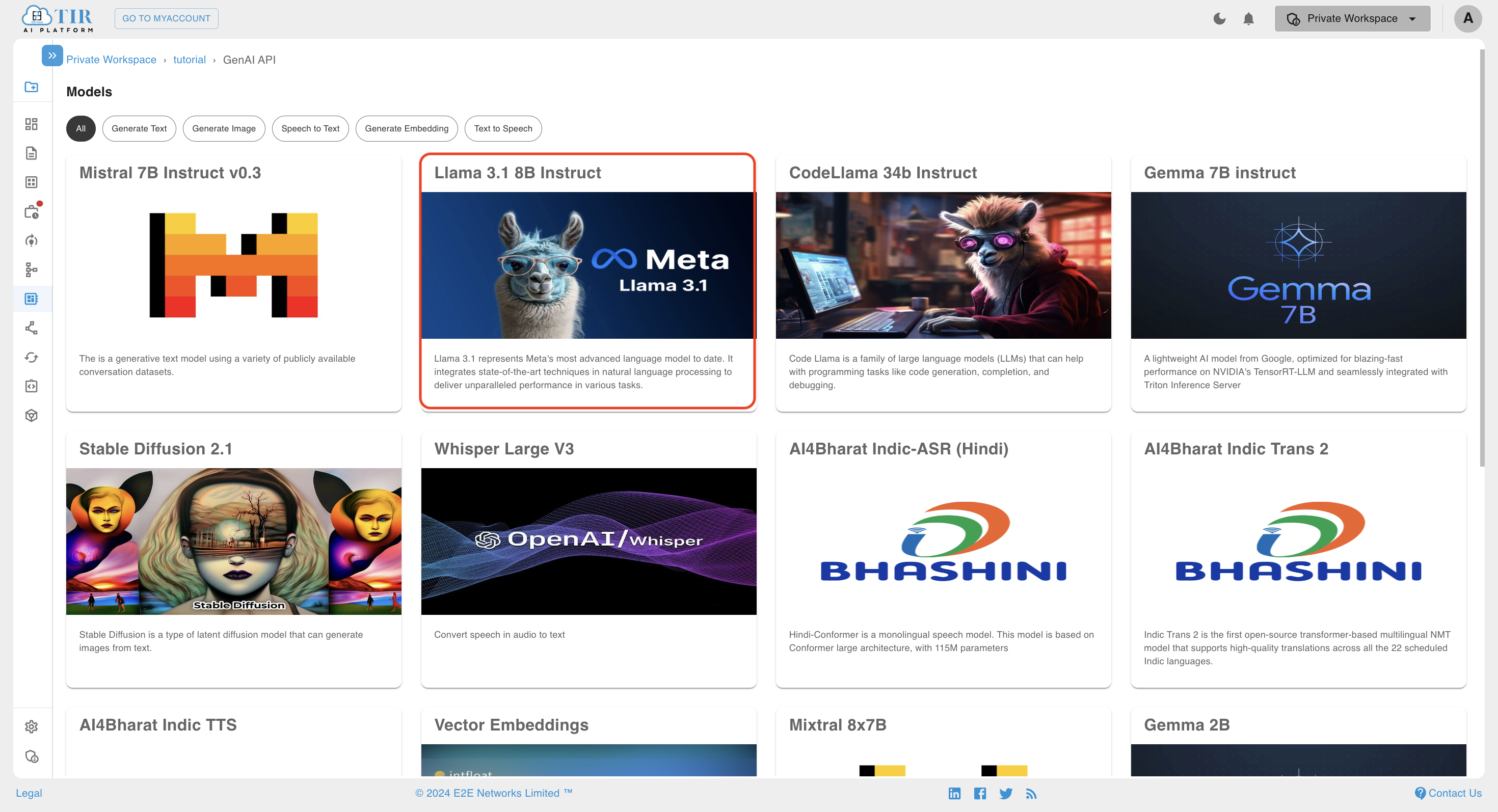

For this example, we will use the Llama 3.1 8B Instruct model. Llama is a text generation large language model.

-

Select the Llama 3.1 8B Instruct card in the GenAI section.

-

For this tutorial, we'll write the script in Python. Open the Python tab and copy the sample Python code.

-

Install the OpenAI package using pip.

pip install -U openai

Accessing GenAI using TIR SDK

Step 1: Generate Token

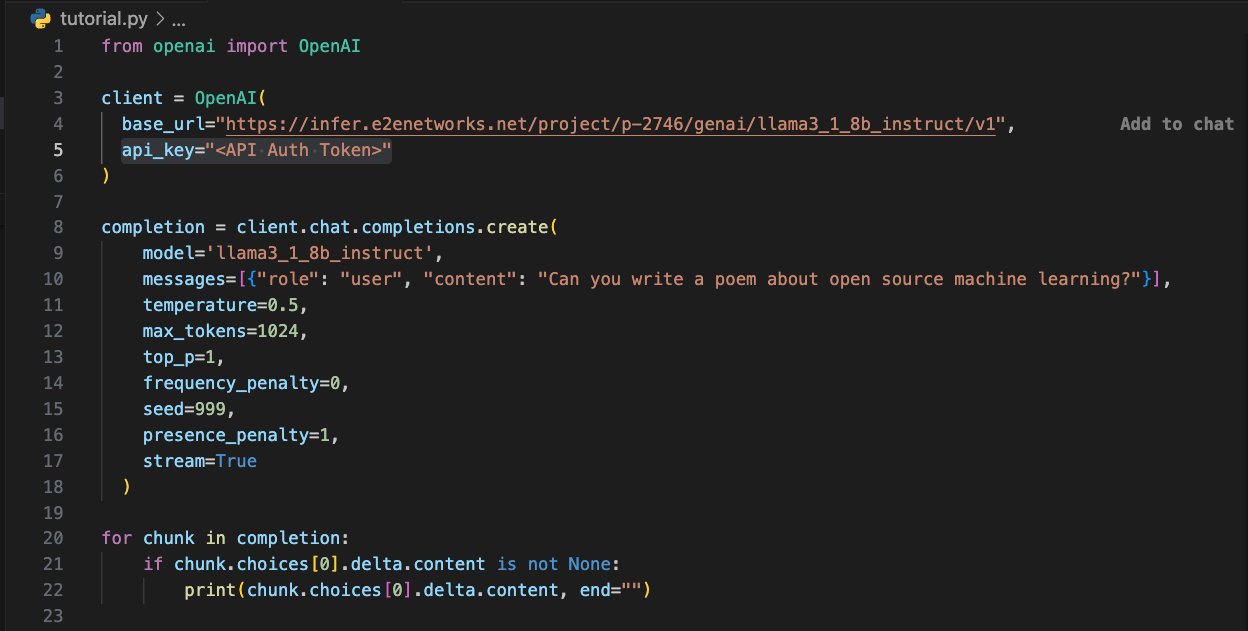

Paste the sample code in a Python file and change the value of the api_key parameter passed in the OpenAI client with the Auth Token generated in the Generating Token to Access GenAI Models section.

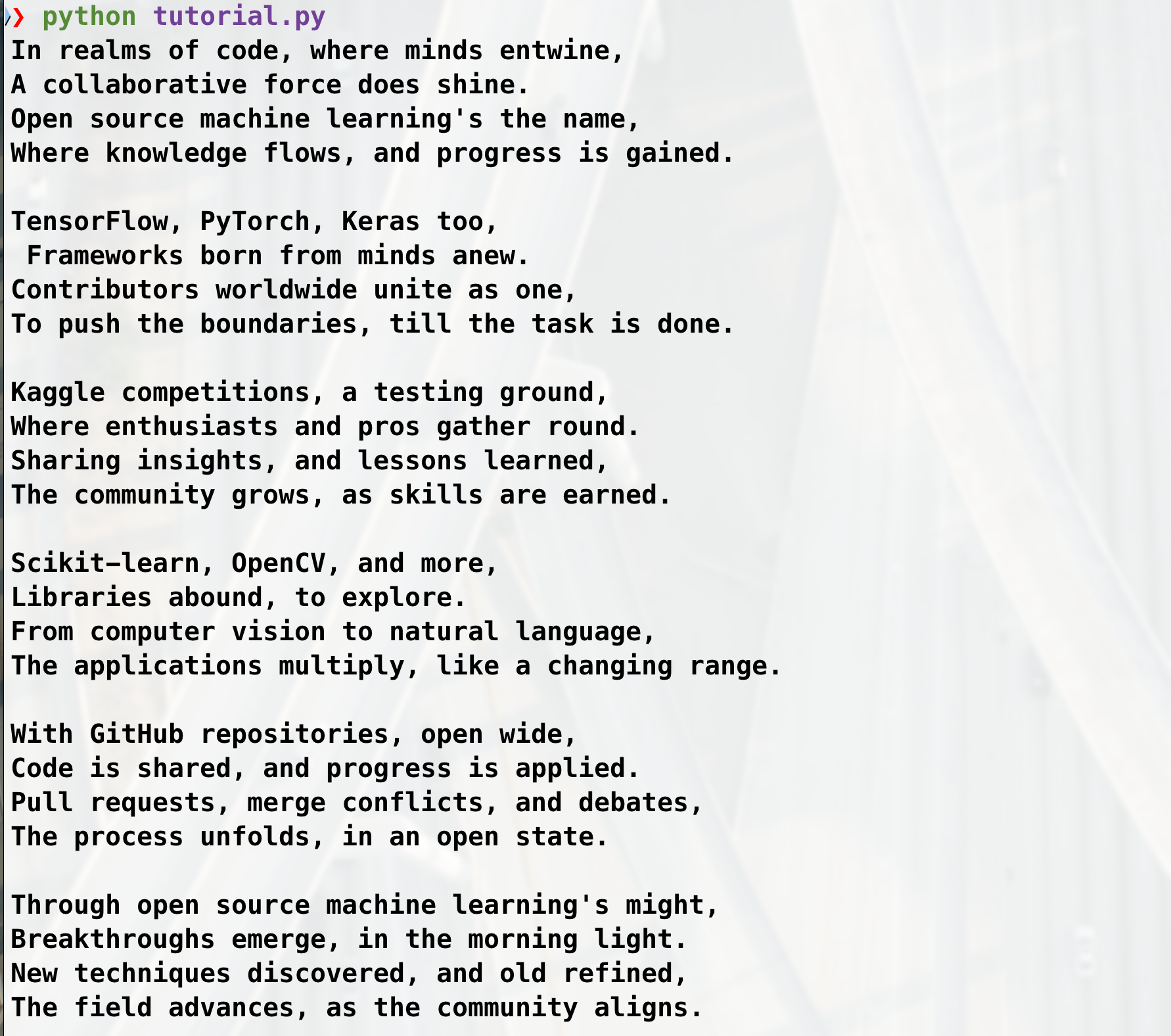

Step 2: Run the Python Script

Run the Python script, and the response will be printed.

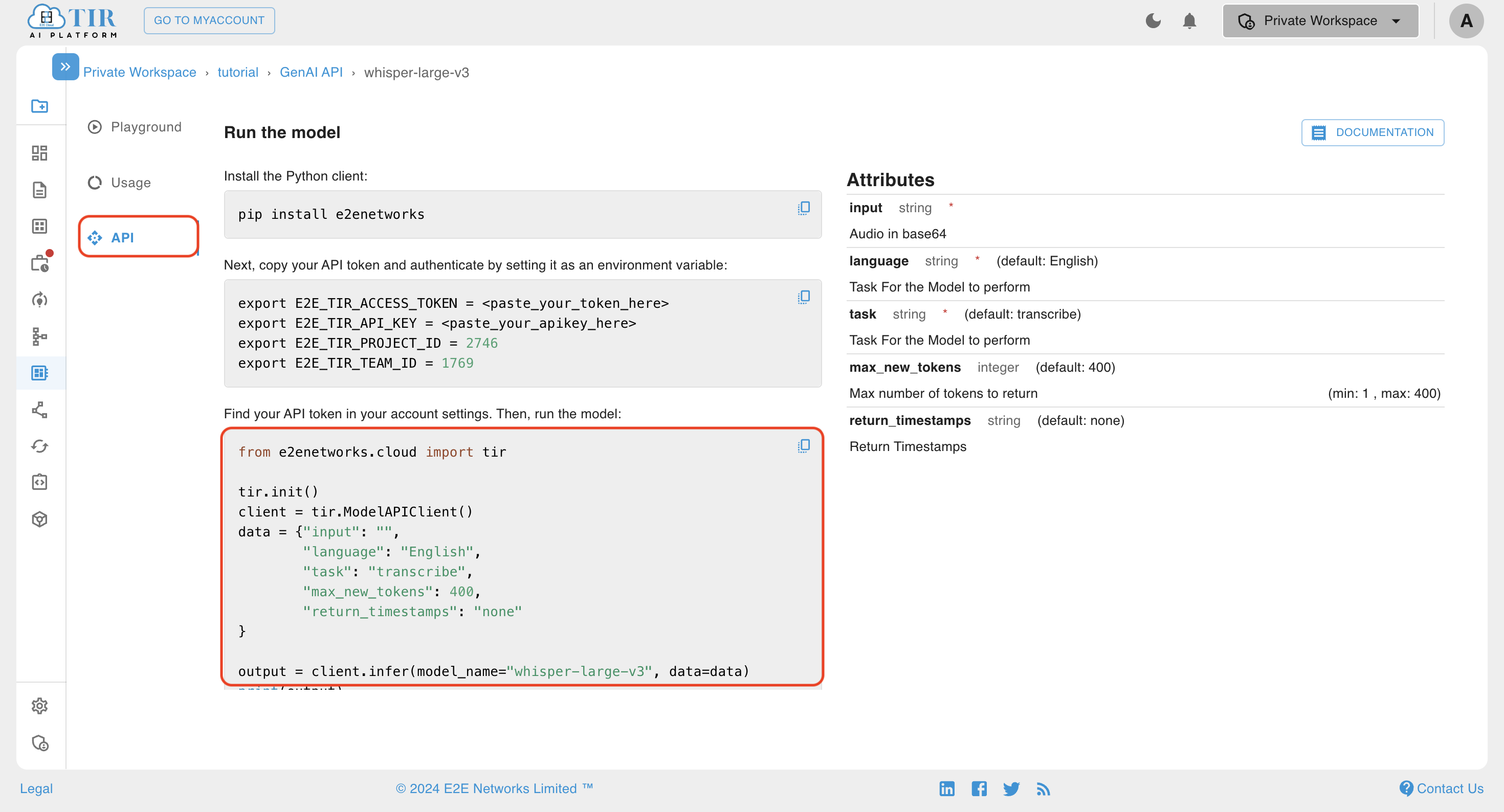

Using Whisper Large V3 Model

For this example, we will use the Whisper Large V3 model. Whisper is a speech-to-text model that can be used for tasks like transcription and translation.

Step 1: Select Whisper Large V3

Select the Whisper Large V3 card in the GenAI section.

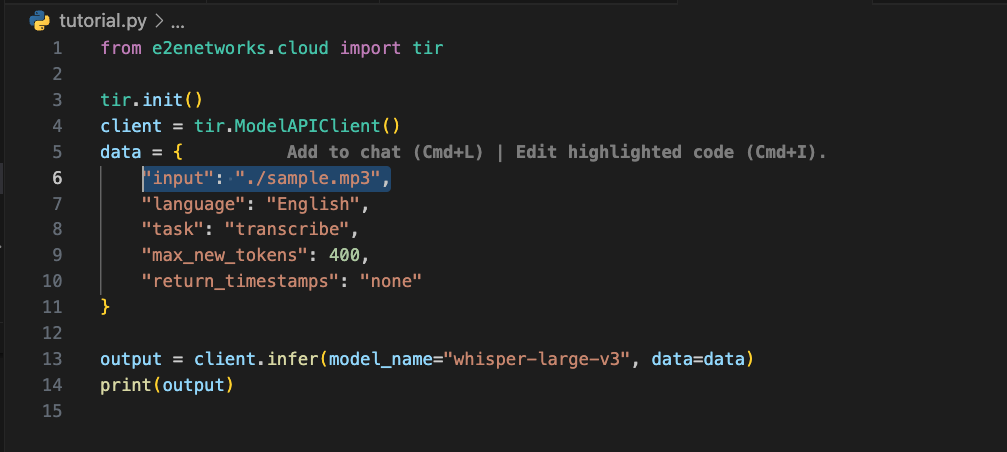

Step 2: Copy Sample Code

TIR SDK is written in Python. Head to the API tab to copy the sample code. Add the path of the audio file in the input field of the data dictionary and tweak other parameters.

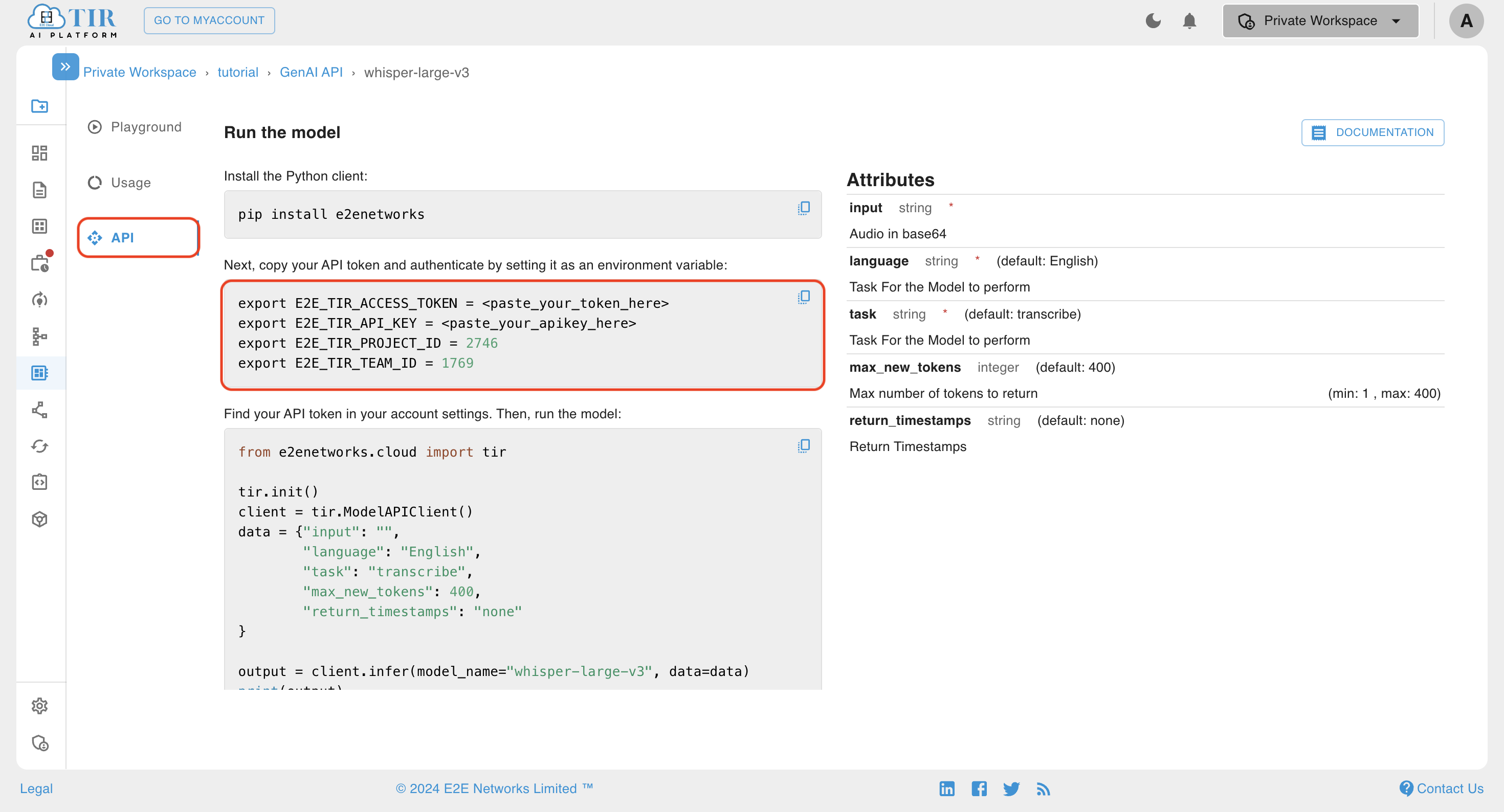

Step 3: Set Environment Variables

Before running the script, export the environment in the terminal. Replace env values for E2E_TIR_ACCESS_TOKEN and E2E_TIR_API_KEY with the token generated in the Generating Token to Access GenAI Models section.

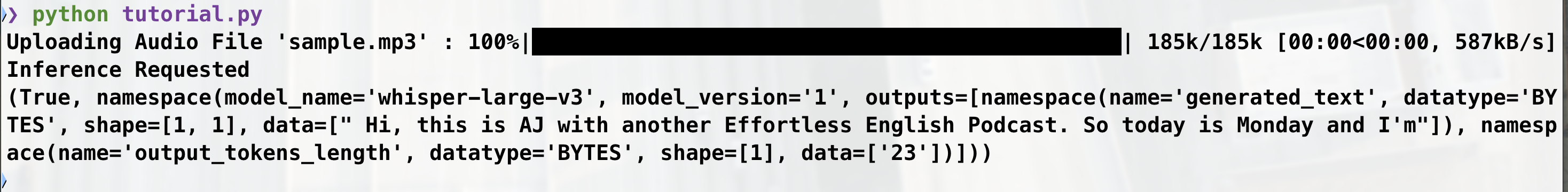

Step 4: Run the Script

Run the script, and the response will be printed.

How to Check Usage & Pricing of GenAI API?

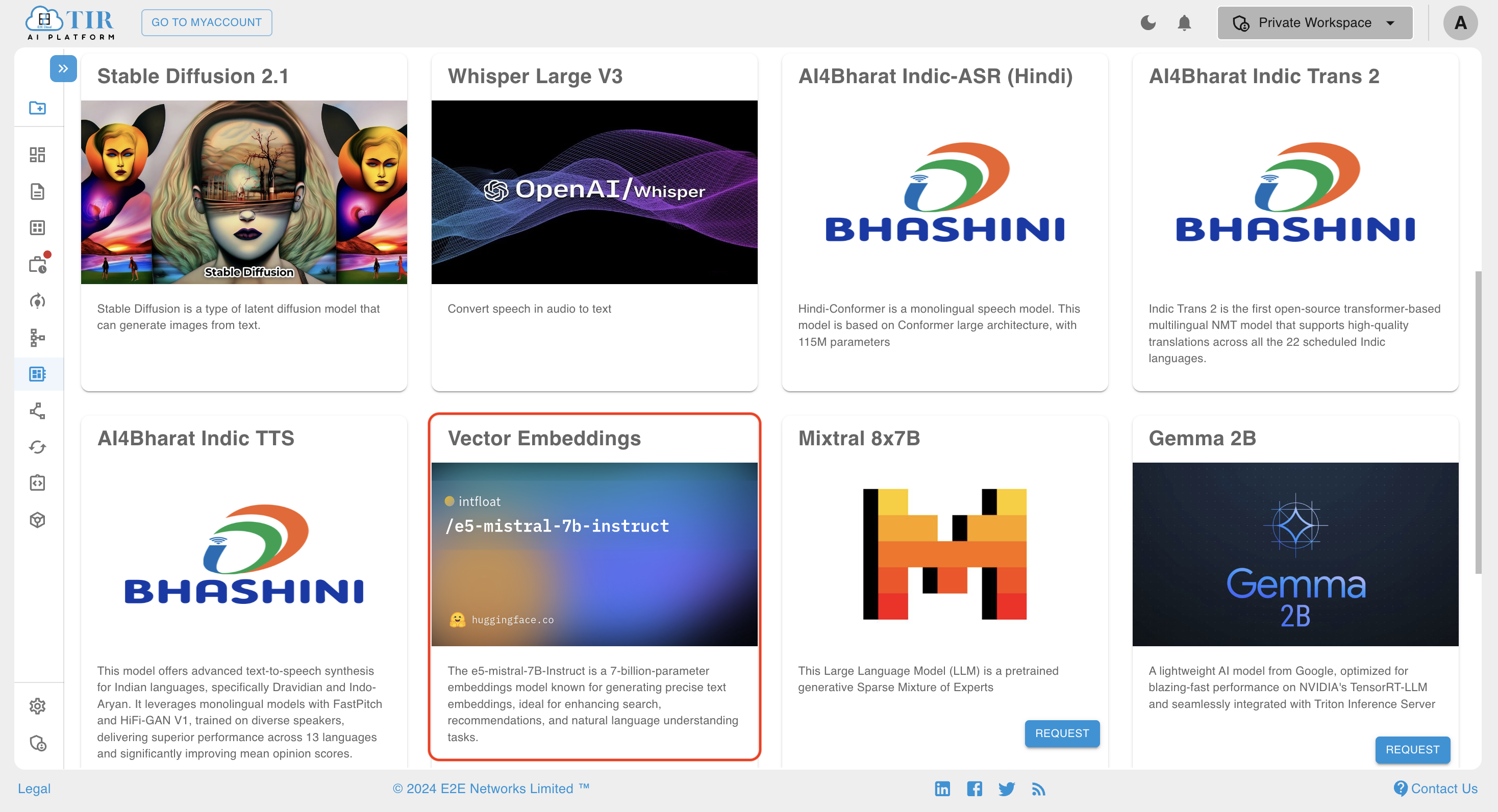

Step 1: Select Vector Embeddings Model

For example, select the Vector Embeddings model card in the GenAI section.

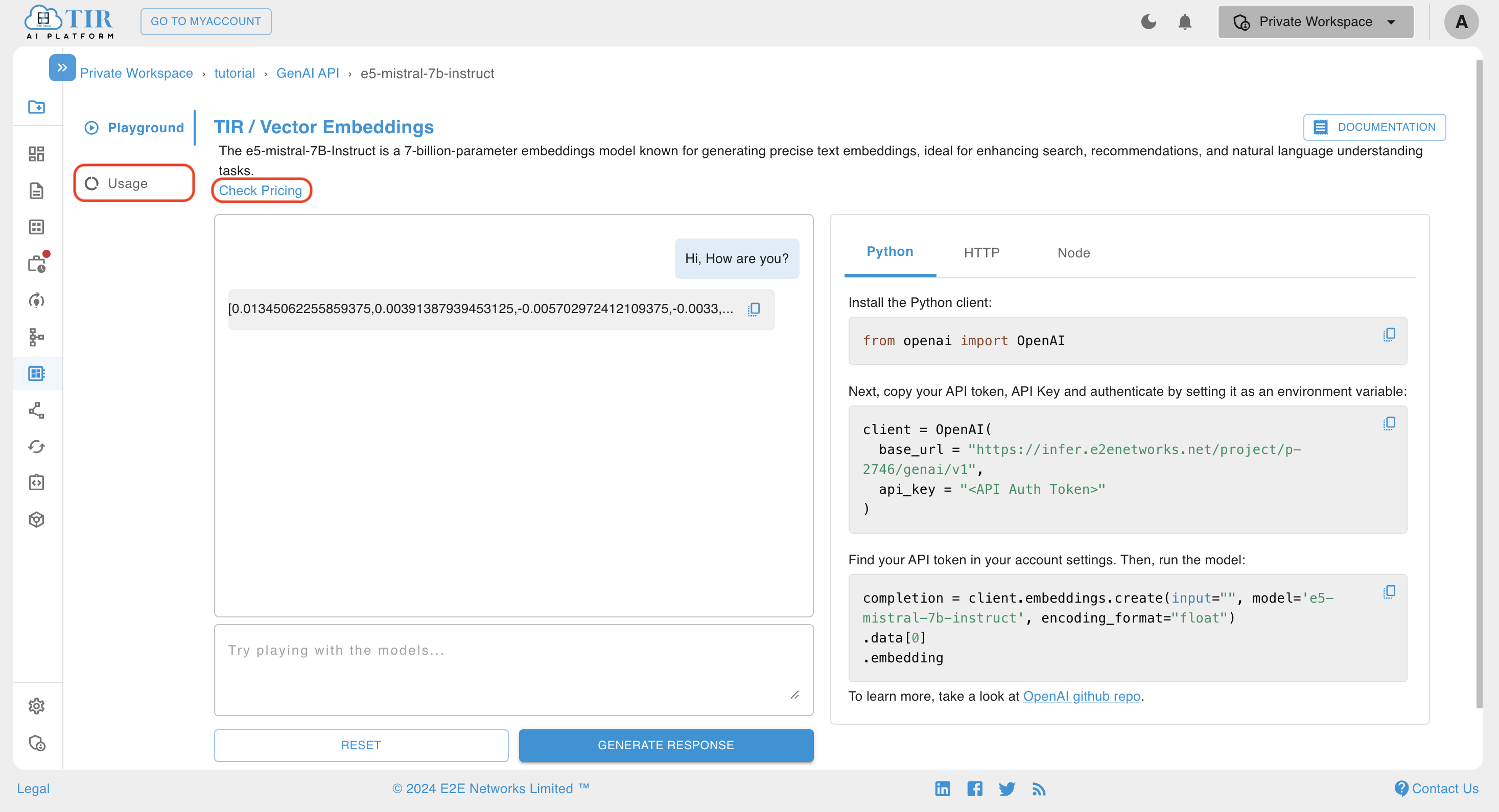

Step 2: Check Usage or Pricing

Open the Usage tab or click on Check Pricing.

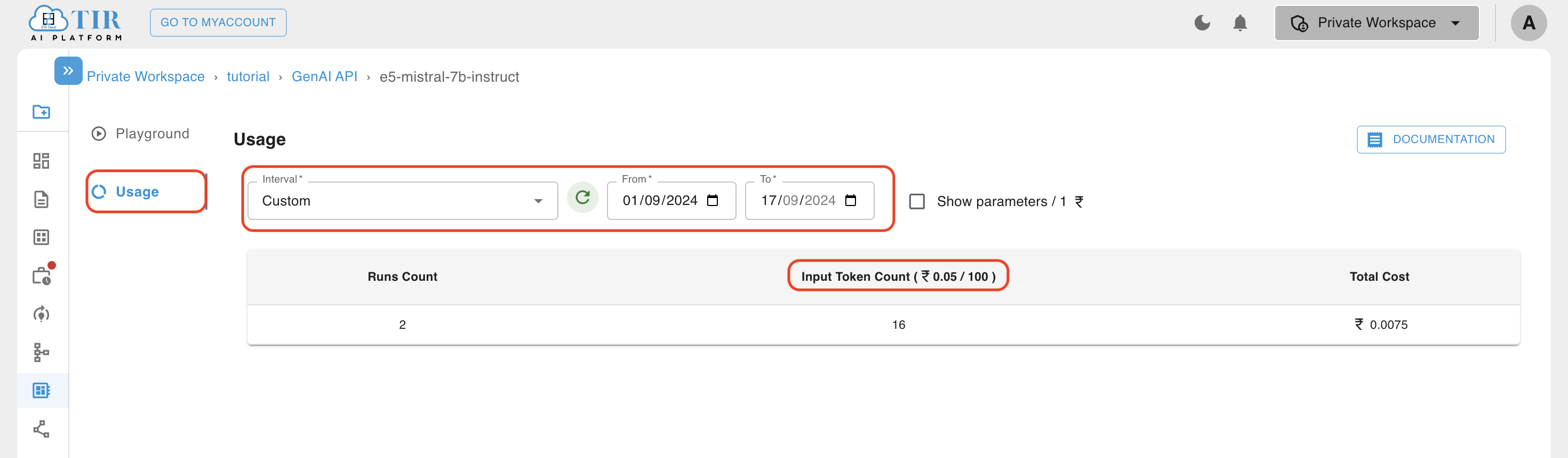

Step 3: Specify Custom Interval

You can also specify a custom interval to check billing between specific dates.

When multiple billing parameters are associated with a model, they will be mentioned in the usage table along with their costing.