Chat Assistant

A Chat Assistant (also known as a virtual assistant or conversational agent) is an AI-driven system designed to engage in conversations with users, providing assistance, answering questions, and completing tasks.

Guide to Chat Assistant

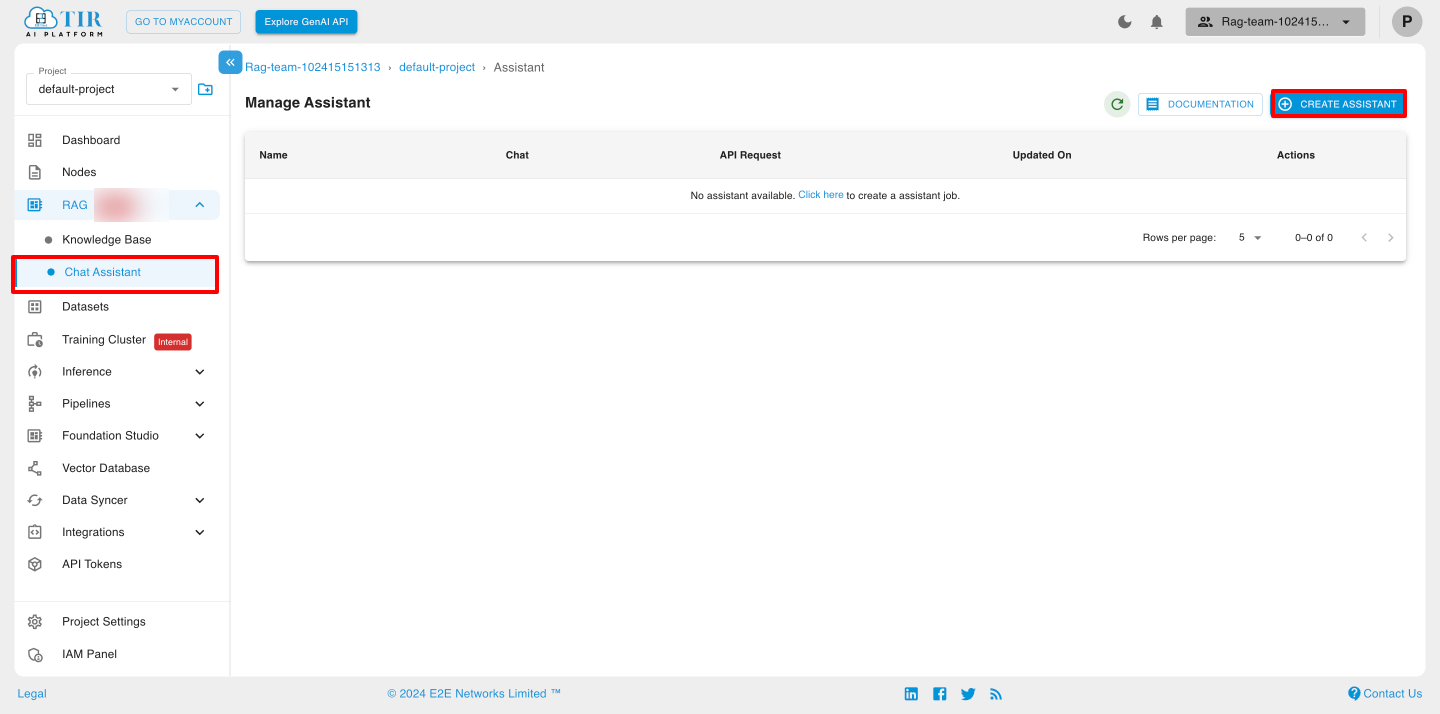

- Click on Chat Assistant under RAG on the left side menu bar and to start chat assistant click on 'CREATE ASSISTANT'.

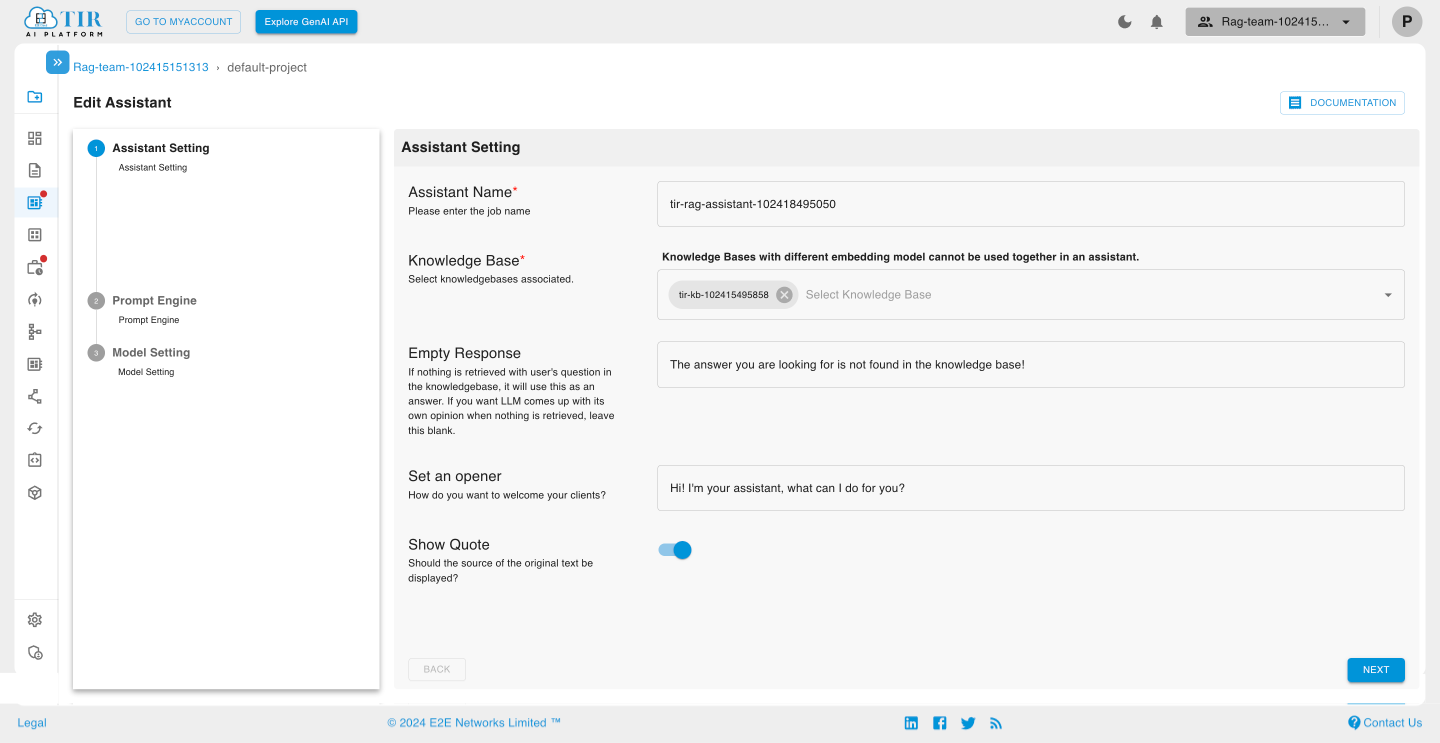

Assistant Settings

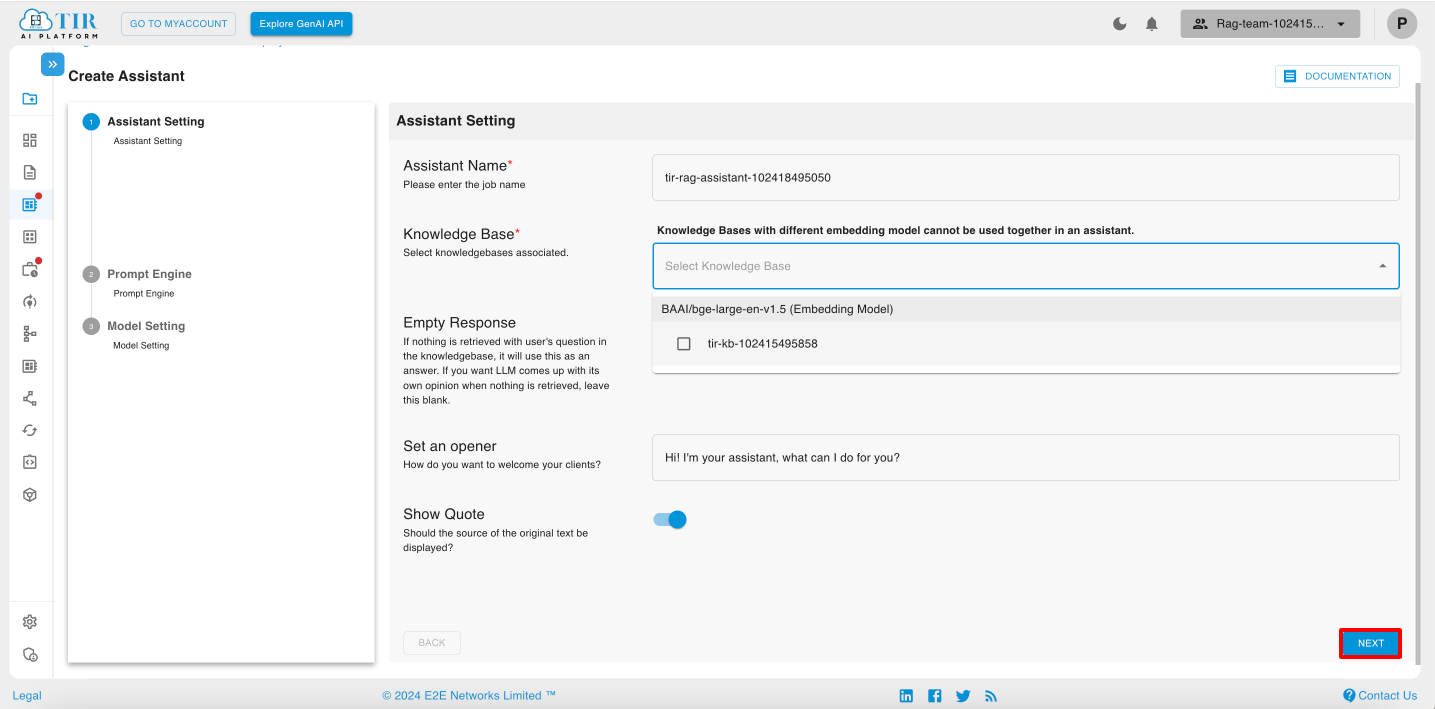

- After clicking on Create Assistant, you will be redirected to Assistant settings.

Note: Knowledge Bases with different embedding models cannot be used together in an assistant. However, you can select multiple Knowledge Bases that share the same embedding model.

- After selecting the Knowledge Base, click on the Next button.

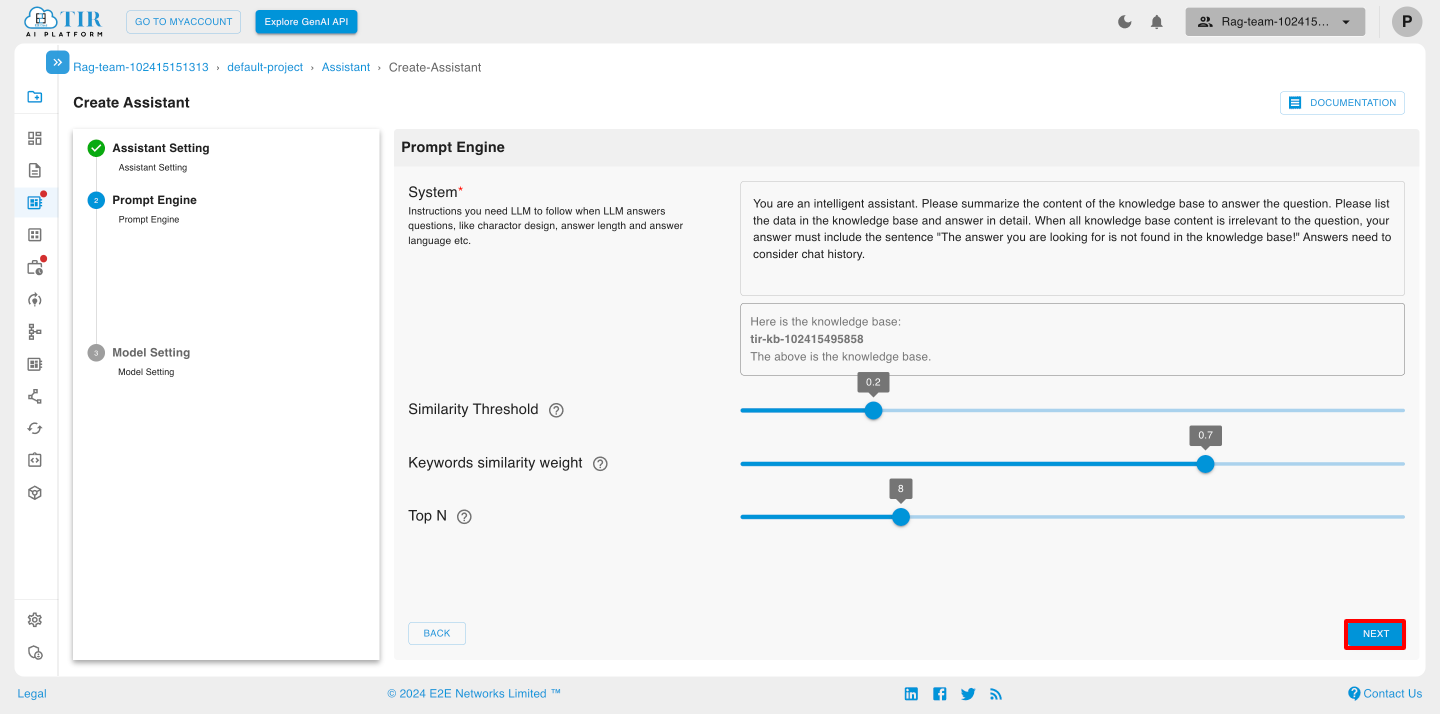

Prompt Engine

The Prompt Engine in a chat assistant is a crucial component that helps generate and manage the prompts given to language models, guiding them to produce relevant and contextually appropriate responses. It plays a key role in enhancing the quality of interactions between users and the assistant.

-

System: System refers to the foundational setup or instructions that define the assistant's overall behavior and objectives. This is typically set as part of the prompt in a prompt engine for models like RAG (Retrieval-Augmented Generation) or other LLMs.

-

Similarity Threshold: The similarity threshold acts as a cutoff point that dictates whether the input is considered similar enough to the training data or knowledge base to generate a relevant response.

-

Keywords Similarity Weight: This weight quantifies how closely related certain keywords in a user query are to the keywords associated with potential responses or entries in a knowledge base. It influences how much the presence of particular keywords affects the overall similarity score when comparing user input to predefined templates or responses.

-

Top N: "Top N" signifies the selection of the top N responses (e.g., 1, 3, or 5) that have the highest relevance or similarity to a user’s query. This method focuses on providing the best options rather than overwhelming users with a large number of results.

Model Settings

Model settings in chat assistants refer to the configuration parameters and options that dictate how the underlying language model operates, interacts with users, and processes input. These settings are crucial for customizing the assistant's behavior to meet specific use cases and user preferences.

Users must select both the LLM Model from the Model Endpoint and the Model Name, then validate its availability. This validation confirms that the model is present and ready for use before creating the Chat Assistant.

Key Aspects of Model Settings

-

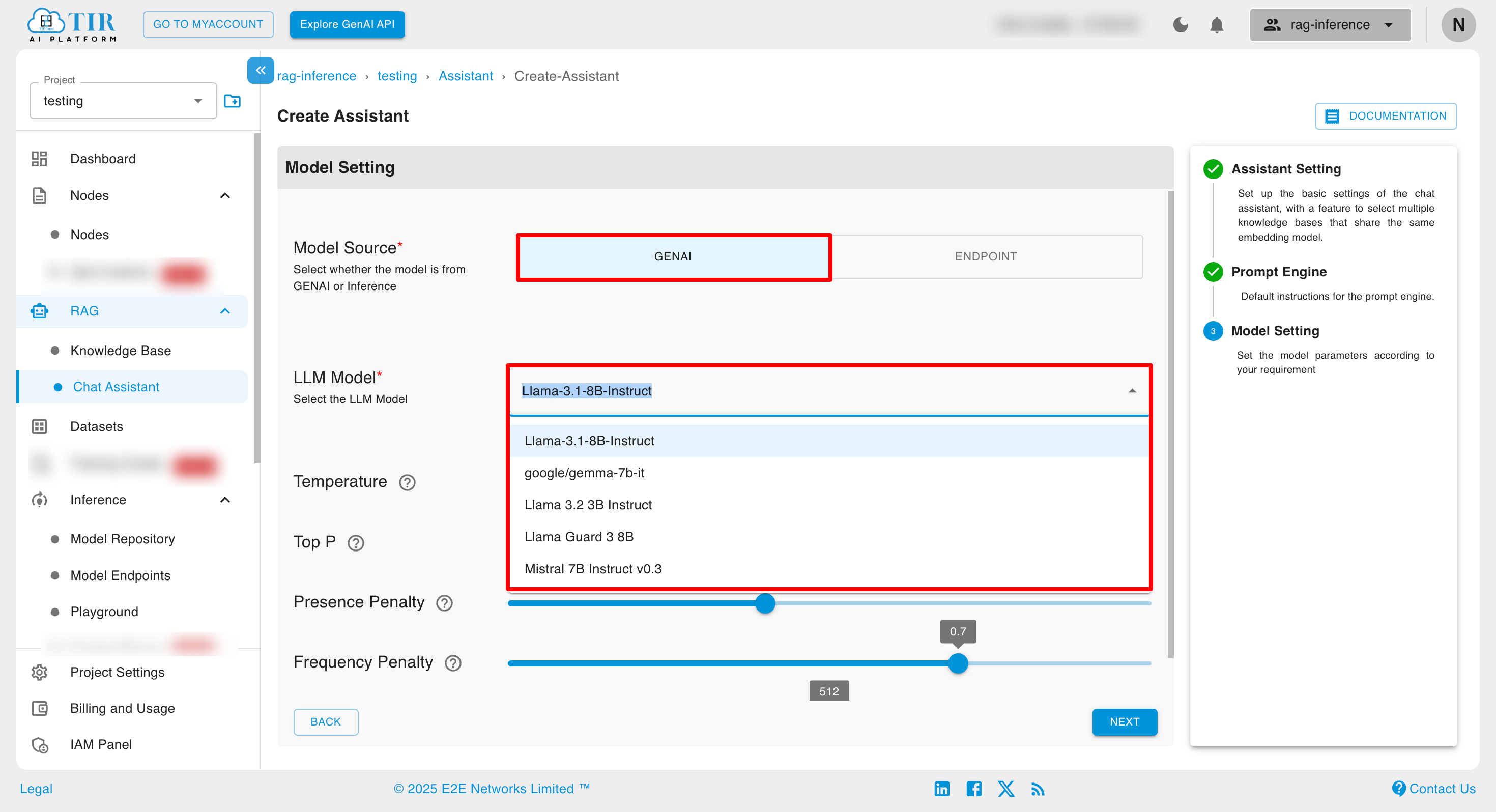

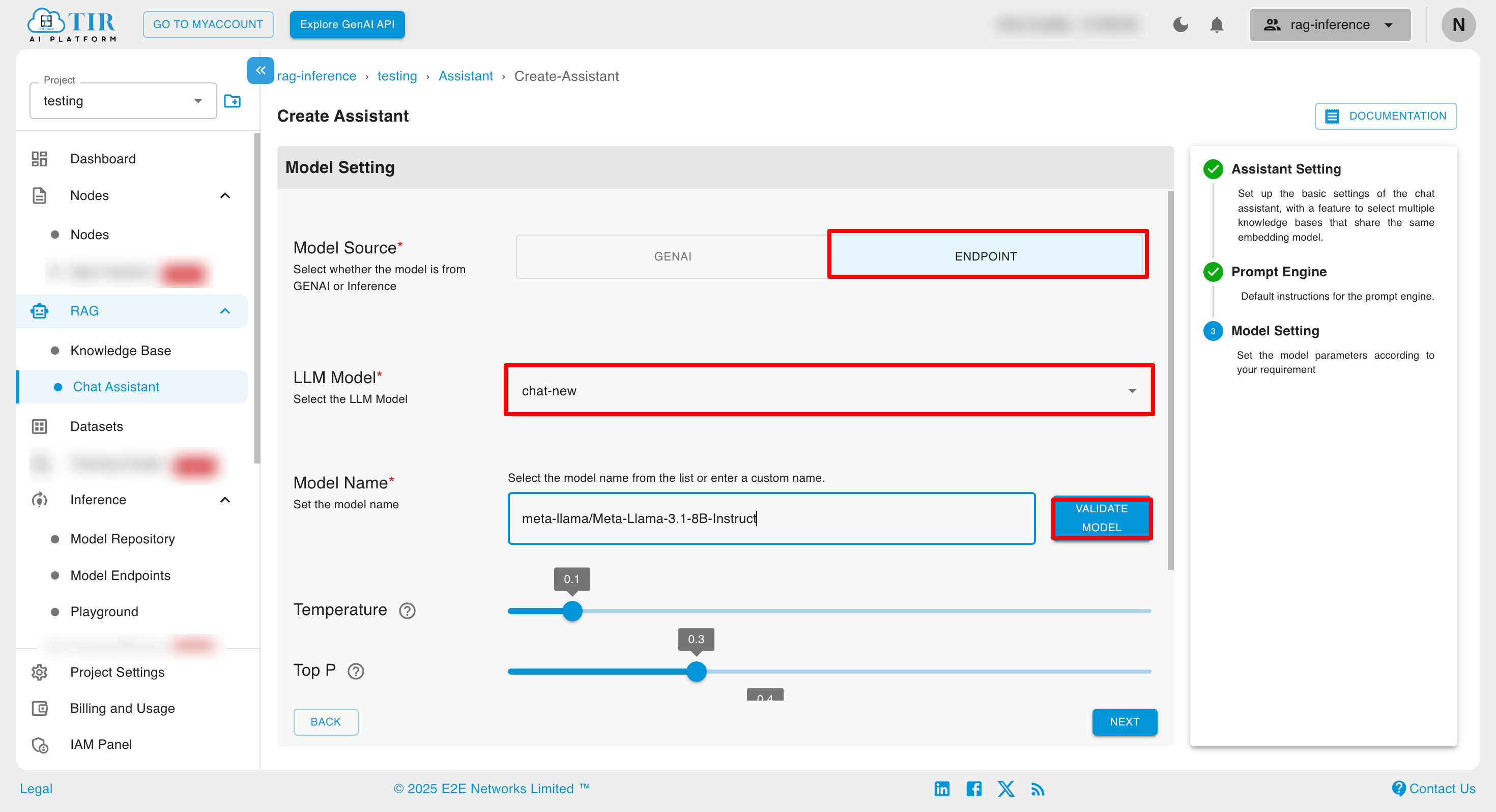

Model Source: Users can choose the model source as either GenAI or Endpoint.

- If GenAI is selected: Users must select from predefined LLM Model to proceed.

- If Endpoint is selected: Users are required to select both the LLM Model from the Model Endpoint as well as the Model Name and validate its availability in the Endpoint. This validation ensures the model exists and is ready for use before proceeding to create the Chat Assistant.

-

LLM Model:

Specifies which language model is being used (e.g., Llama-3.1-8B-Instruct, Llama-3.2-3B-Instruct, Mistral 7B Instruct v0.3). Different models may excel in various tasks or domains. Each model may have different capabilities regarding understanding context, generating responses, and handling complex queries.

- Temperature:

Controls the randomness of the model's output. A higher temperature (e.g., 0.8) makes the output more random and diverse, while a lower temperature (e.g., 0.2) results in more focused and deterministic responses. Adjusting the temperature can help fine-tune how creative or conservative the assistant��’s responses are.

- Top P:

A sampling technique that selects from the top P percentage of probable next tokens. For example, if P is set to 0.9, only the most probable 90% of tokens will be considered for generating the next word. This can help maintain coherence and relevance in responses while allowing for some level of randomness.

- Presence Penalty:

Discourages the model from introducing new topics not mentioned in the conversation, helping keep the discussion focused on the context established by the user’s queries.

- Frequency Penalty:

Adjusts the likelihood of the model repeating tokens in its output. A higher penalty discourages repetition, promoting more varied language in responses. This setting can enhance dialogue quality by ensuring the assistant provides diverse answers.

- Max Tokens:

Defines the maximum number of tokens (words and punctuation) the model can generate in a single response. Setting this limit helps prevent overly long or verbose answers. Balancing this setting is essential for providing complete answers without overwhelming users.

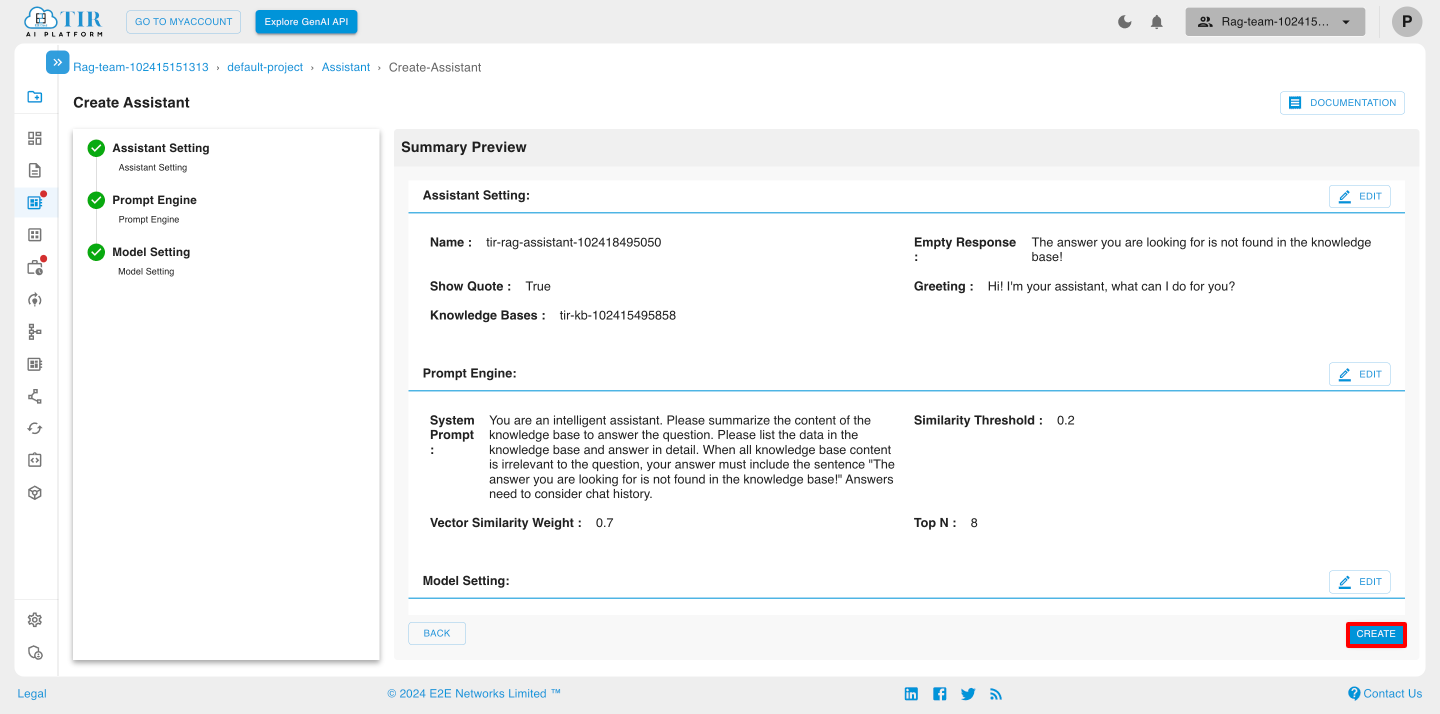

Summary Preview

In Summary Preview, you can edit Assistant Settings, the Prompt Engine, and Model Settings.

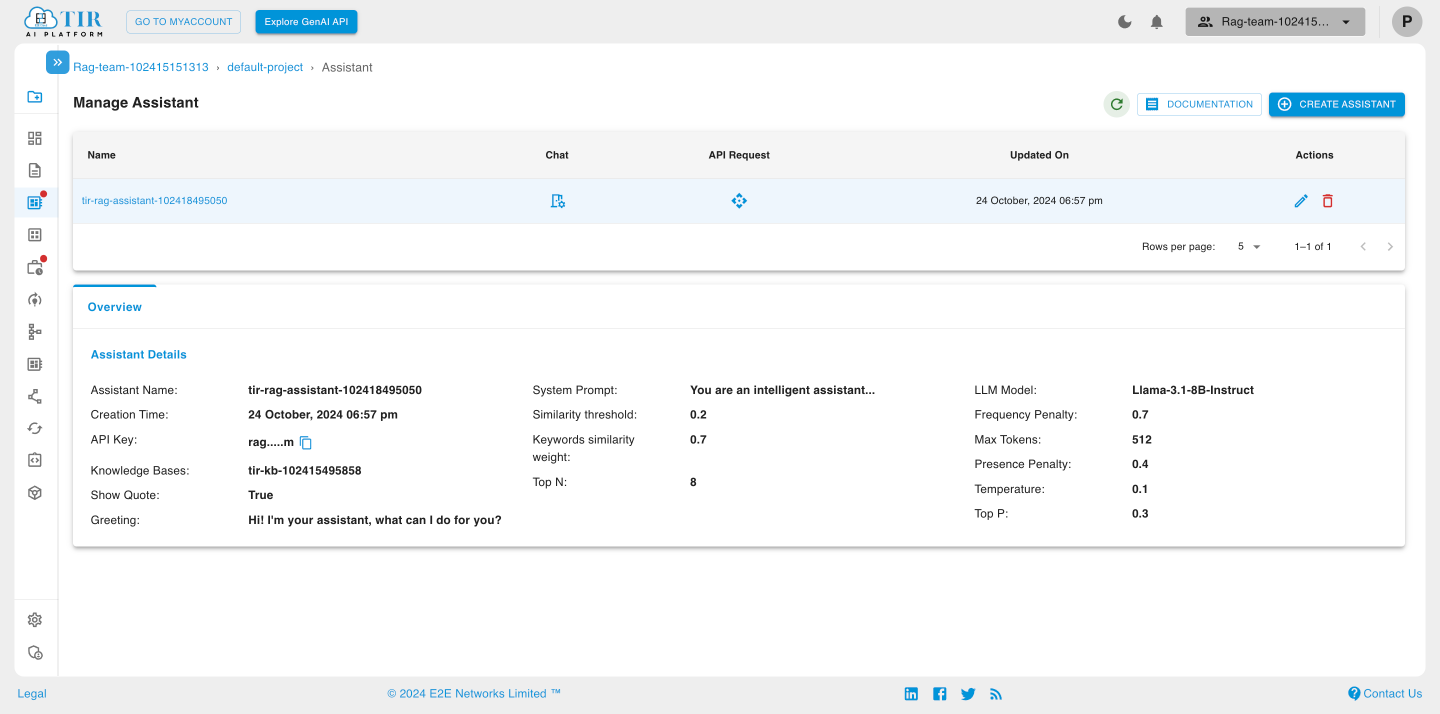

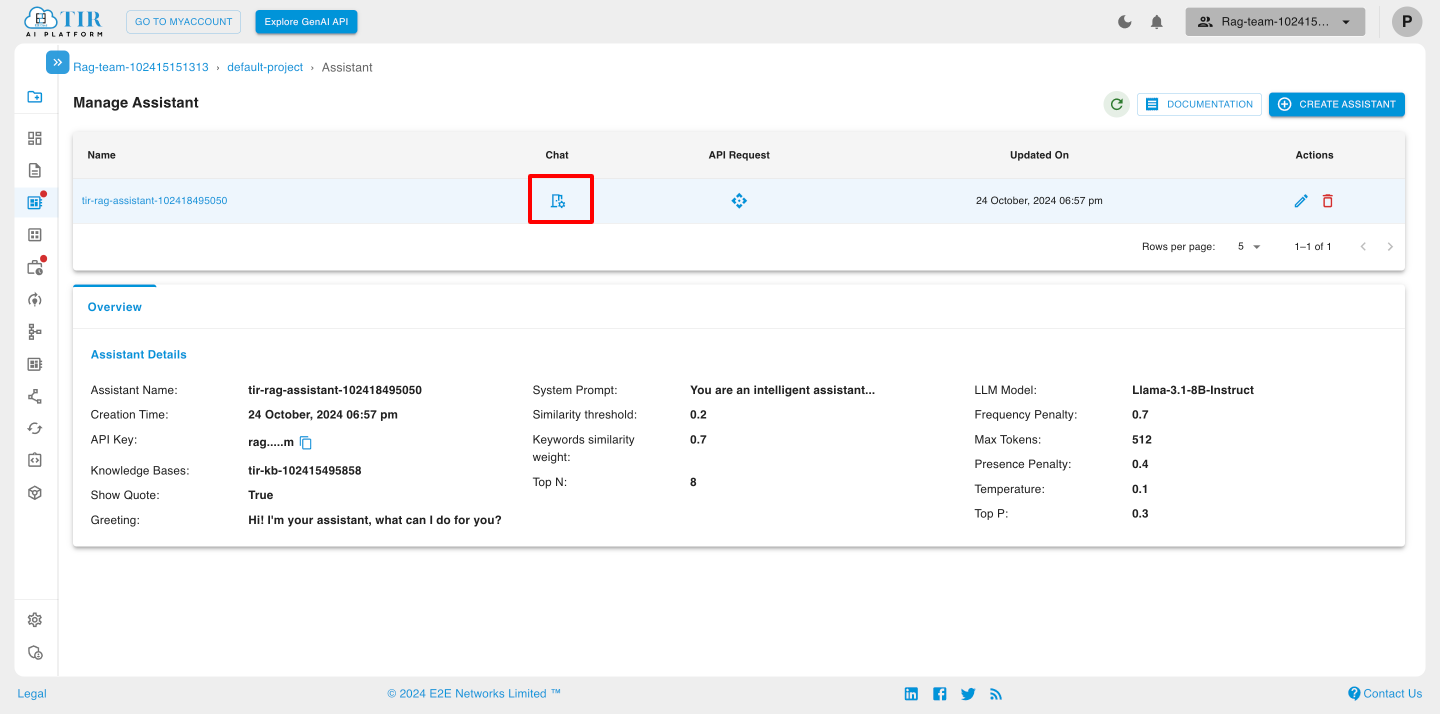

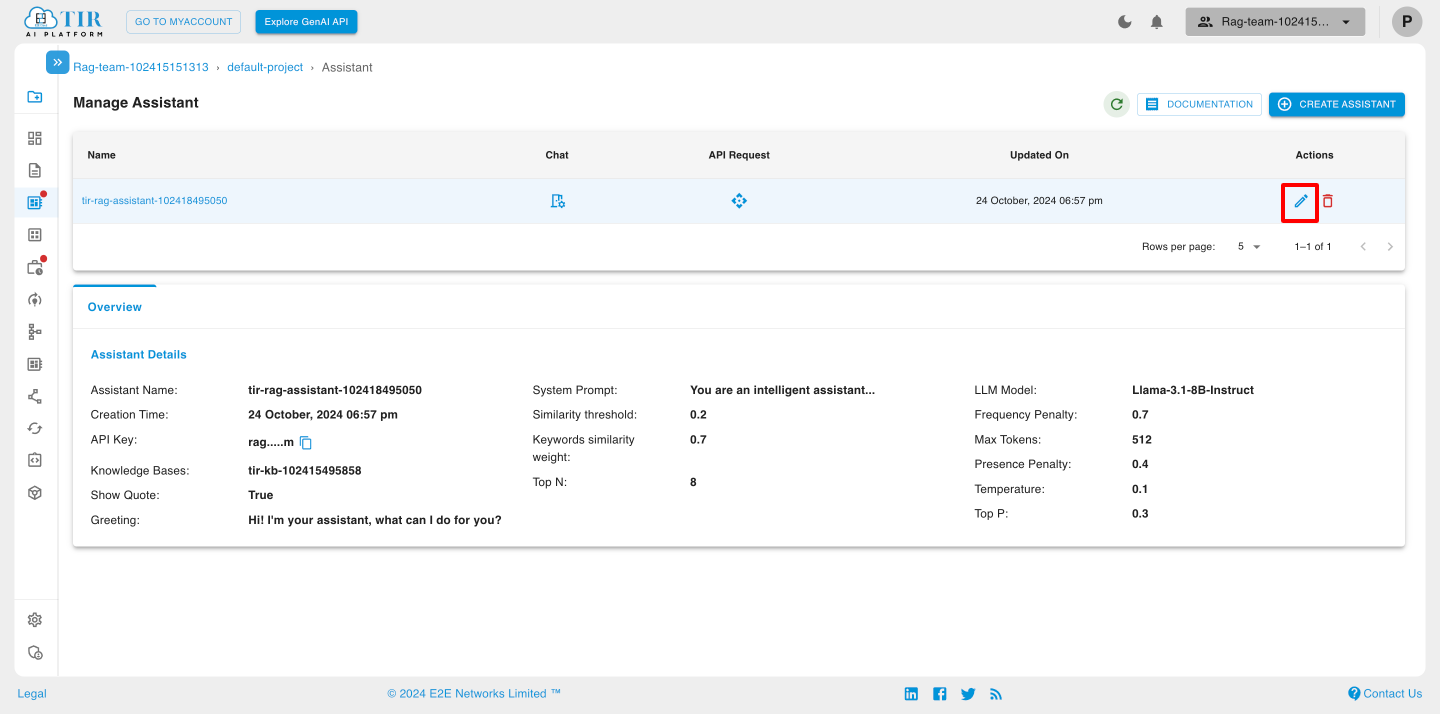

Manage Assistant

Overview

After successfully creating the assistant, you will be redirected to the Manage Assistant page, where you can view the Assistant Details in the Overview section.

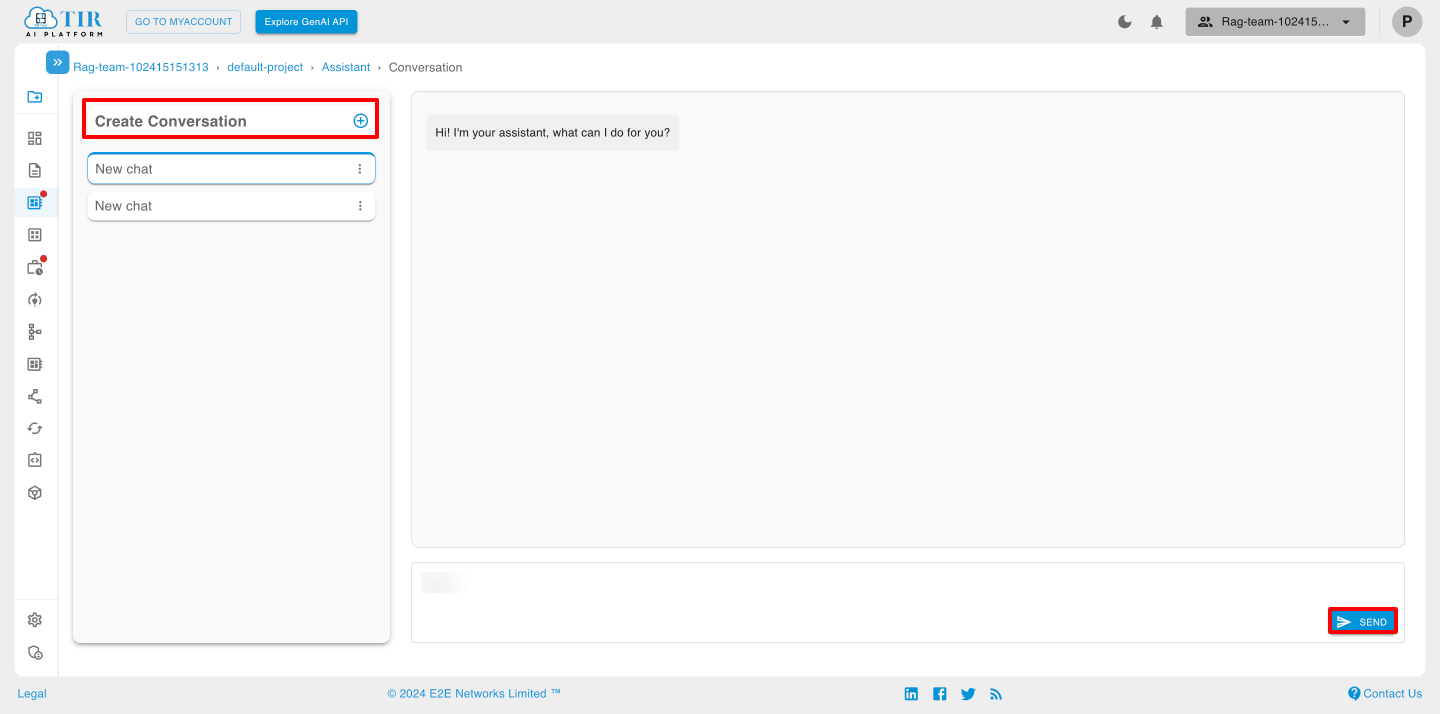

Chat

Chat functionality in a chat assistant refers to the interactive dialogue system that allows users to communicate with the assistant through natural language. This feature enables users to ask questions, seek information, and receive responses in real-time, creating a seamless and engaging experience.

Create Conversations

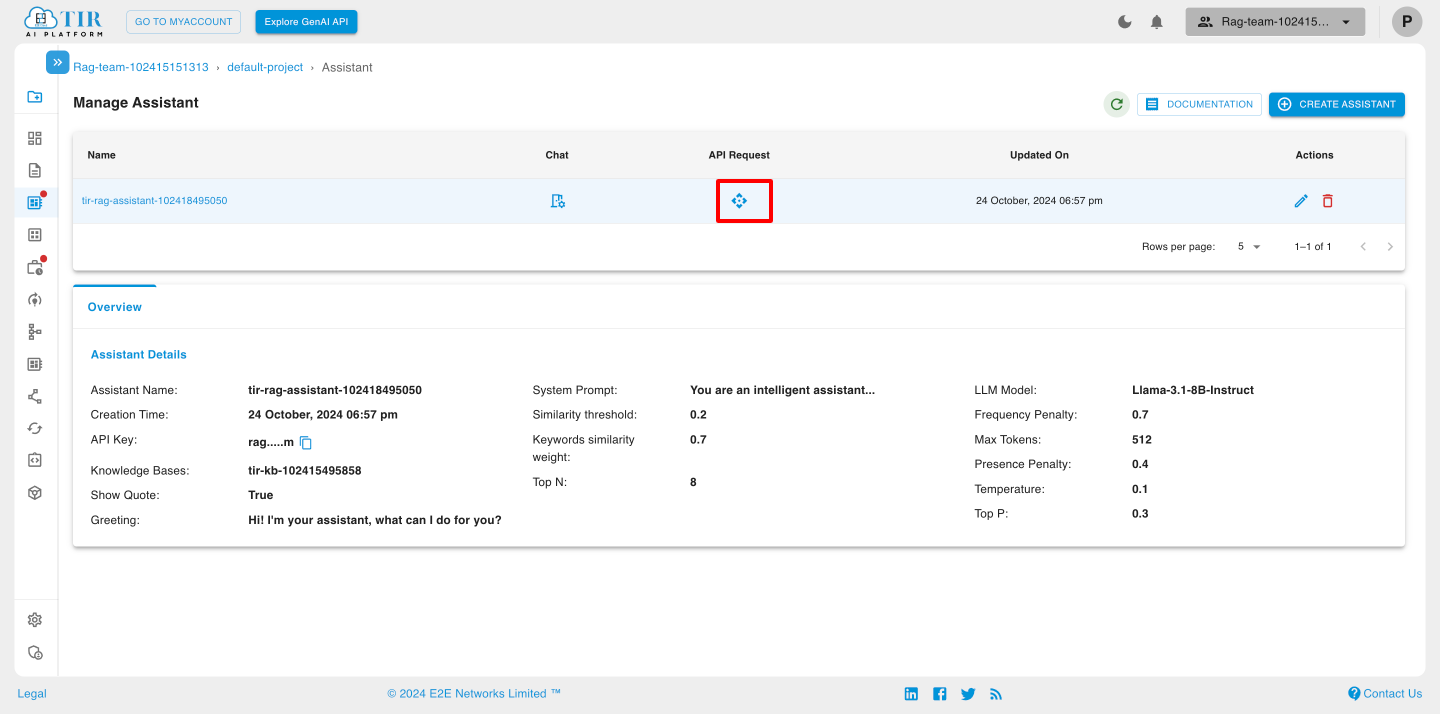

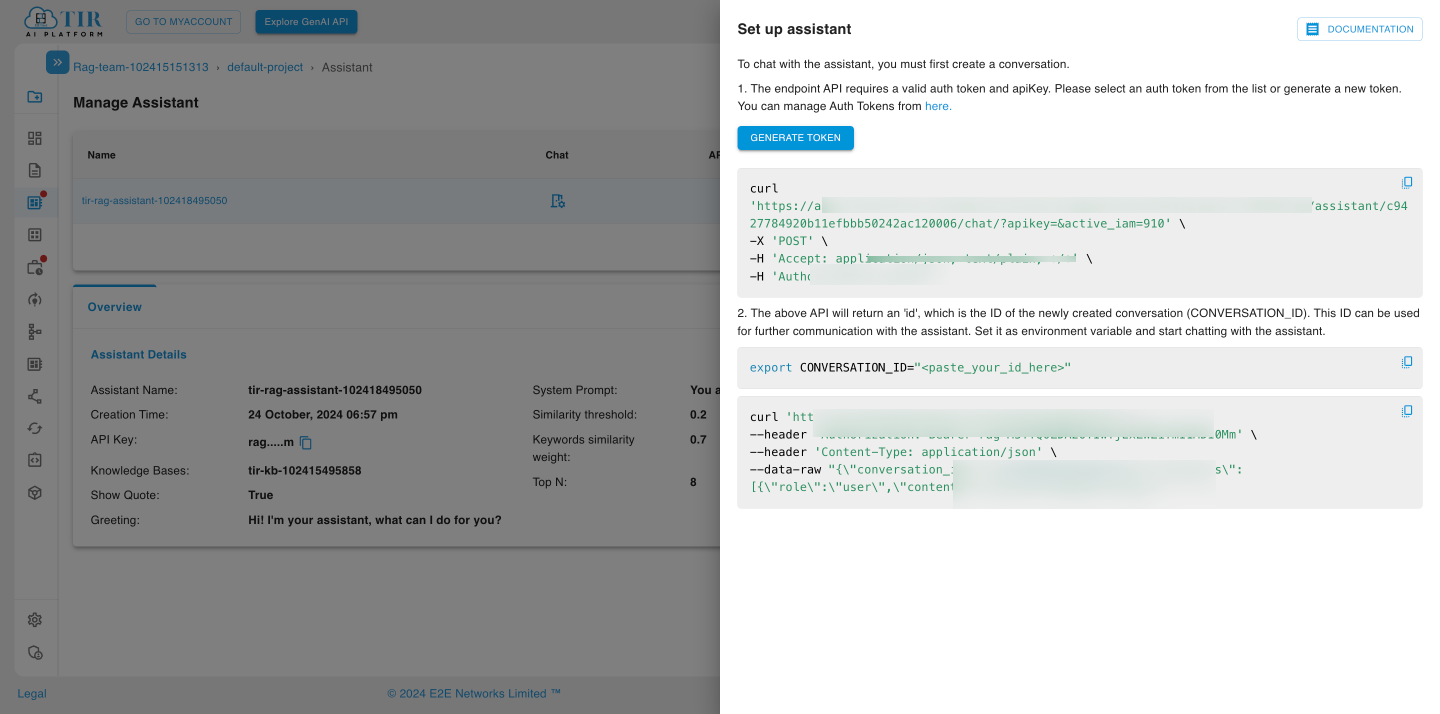

API Request

API requests in chat assistants refer to interactions between the assistant and external services or databases through Application Programming Interfaces (APIs). These requests enable the assistant to retrieve or send data, enhancing its functionality and allowing it to provide real-time information and services to users.

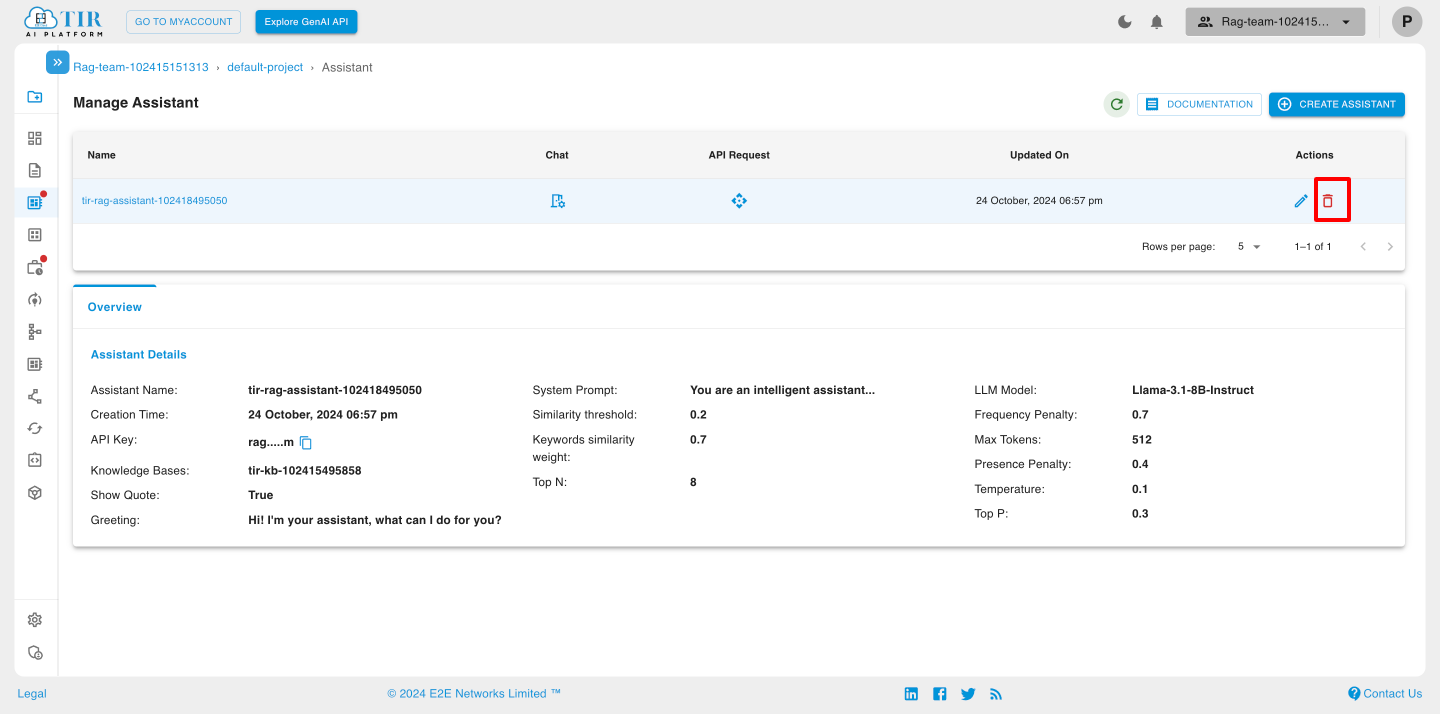

Actions

Edit Assistant

You can edit the Assistant by clicking the Edit icon.

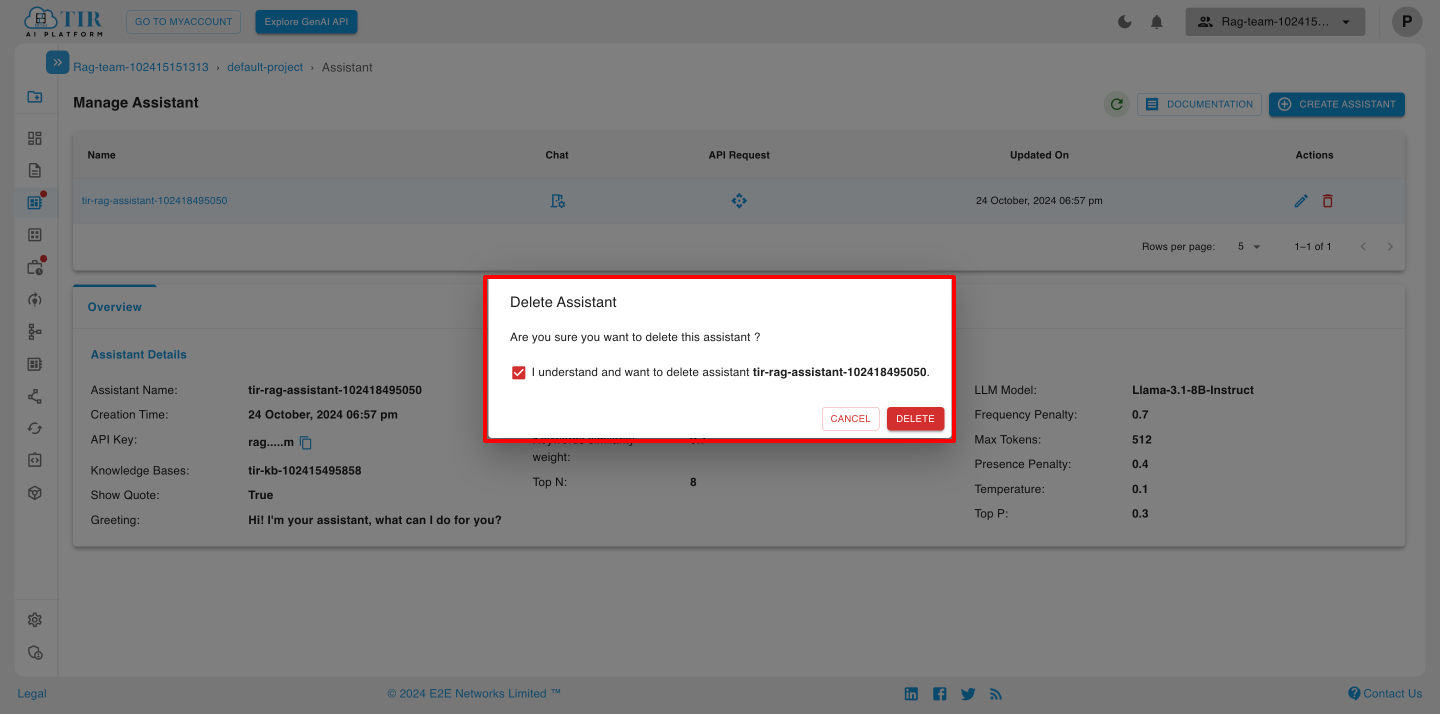

Delete Assistant

You can delete the Assistant by clicking the delete icon.

Click the delete button.