Deploy Model Endpoint for YOLOv8

YOLOv8 is the newest model in the YOLO algorithm series ��– the most well-known family of object detection and classification models in the Computer Vision (CV) field.

Deploy Model Endpoint for YOLOv8

The tutorial will mainly focus on the following:

- A step-by-step guide on Model Endpoint creation & for detecting objects in a video

- Creating Model endpoint with custom model weights

For the scope of this tutorial, we will use a pre-built container (YOLOv8) for the model endpoint, but you may choose to create your own custom container by following this tutorial.

In most cases, the pre-built container would work for your use case. The advantage is—you won't have to worry about building an API handler. An API handler will be automatically created for you.

So let's get started!

A guide on Model Endpoint creation and video processing using YOLO

Step 1: Create a Model Endpoint for YOLO on TIR

-

Go to TIR AI Platform.

-

Choose a project.

-

Go to the Model Endpoints section.

-

Click on the Create Endpoint button on the top-right corner.

-

Choose the YOLOv8 model card in the Choose Framework section.

-

Pick any suitable GPU or CPU plan of your choice. You can proceed with the default values for replicas, disk size, and endpoint details.

-

Add your environment variables, if any. Otherwise, proceed further.

-

Model Details: For now, we will skip the model details and continue with the default model weights.

If you wish to load your custom model weights (fine-tuned or not), select the appropriate model. (See Creating Model endpoint with custom model weights section below.)

-

Complete the endpoint creation.

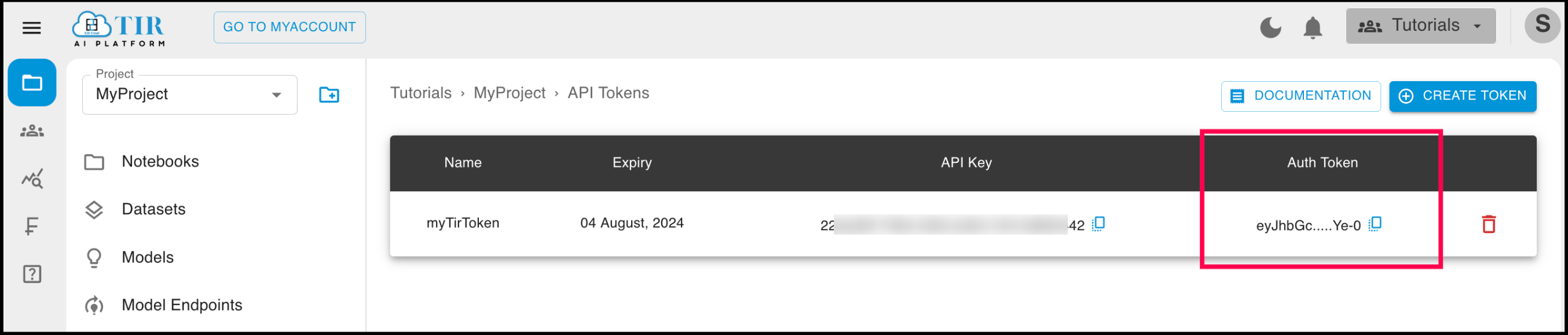

Step 2: Generate your API TOKEN

The model endpoint API requires a valid auth token, which you'll need to perform further steps. So, let's generate one.

-

Go to the API Tokens section under the project.

-

Create a new API Token. by clicking on the Create Token button on the top right corner. You can also use an existing token if one has already been created.

-

Once created, you'll see the list of API Tokens containing the API Key and Auth Token. You will need this Auth Token in the next step.

Step 3: Generate a Video with Object Detection using YOLOv8 Model

There are several steps involved in using the YOLOv8 model endpoints:

- First, you have to create a bucket to upload your input video. Please refer to Bucket Creation.

- After creating the bucket, upload your input video to that bucket. Please refer to Object upload in Bucket.

- Once your model endpoint is Ready, visit the Sample API Request section of that model endpoint and copy the Python code or cURL request.

- You will receive two Python scripts or cURL requests: one for processing the video and another for tracking the video processing.

- Launch a TIR Notebook with PyTorch or any appropriate image with any basic machine plan. Once it is in the Running state, launch it, and start a new notebook

untitled.ipynbin Jupyter Labs. - Paste the Sample API Request code (for Python) in the notebook cell. Below is the sample code.

- For processing a video, the Python script is given below:

import requests

# Enter your auth_token here

auth_token = "your-auth-token"

url = "https://jupyterlabs.e2enetworks.net/project/p-<project-id>/endpoint/is-<inference-id>/predict"

payload = {

"input": "$INPUT_VIDEO_FILE_NAME", # Path to the video file in the bucket you want to upload

"bucket_name": "$BUCKET_NAME", # Bucket name where input video file is stored and output will be stored

"access_key": "$ACCESS_KEY", # Access key of the bucket

"secret_key": "$SECRET_KEY" # Secret key of the bucket

}

headers = {

'Content-Type': 'application/json',

'Authorization': f'Bearer {auth_token}'

}

response = requests.post(url, json=payload, headers=headers)

print(response.content) # This will provide a JOB_ID which you can use to track the status of video processing by using the request below

- You have to mention the correct input path in the

inputsection,bucket_name,access_key, andsecret_keyin the payload. Otherwise, it will lead to an error. - You will get a job ID by hitting the above URL. Using that job ID, you can track the status of video processing.

Tracking the Status of Video Processing

To track the status of video processing using the job ID, use the following Python script:

import requests

# Enter your auth_token here

auth_token = "your_auth_token"

url = "https://jupyterlabs.e2enetworks.net/project/p-<project-id>/endpoint/is-<inference-id>/status/$JOB_ID"

headers = {

'Content-Type': 'application/json',

'Authorization': f'Bearer {auth_token}'

}

response = requests.get(url, headers=headers)

print(response.content)

- Please mention the correct job ID in the URL.

- Copy the Auth Token generated in Step 2 and use it in place of

$AUTH_TOKENin the Sample API Request. - Also, ensure to mention the appropriate

input,bucket_name,access_key, andsecret_keyin the above Python script.

Video output file should of .avi extension. As of now we are supporting only .avi files as output.

Execute the Code & Send Request

- Execute the code and send the request.

- You can view your video, which has been downloaded in the bucket, and the output path is

yolov8/outputs/.

That's it! Your YOLOv8 model endpoint is up and ready for inference.

You can also try providing different images to see the generated videos. The model supports only the detection of objects.

Creating Model Endpoint with Custom Model Weights

To create inference against the YOLOv8 model with custom model weights, we will:

- Download the YOLO model in PyTorch format like "yolo.pt". No other format is supported.

- Upload the model to Model Bucket (EOS).

- Create an inference endpoint (model endpoint) in TIR to serve API requests.

Step 1.1: Define a Model in TIR Dashboard

Before we proceed with downloading or fine-tuning (optional) the model weights, let us first define a model in the TIR dashboard.

- Go to the TIR AI Platform.

- Choose a project.

- Go to the Model section.

- Click on Create Model.

- Enter a model name of your choosing (e.g.,

stable-video-diffusion). - Select Model Type as Custom.

- Click on CREATE.

- You will now see details of the EOS (E2E Object Storage) bucket created for this model.

- EOS provides a S3 compatible API to upload or download content. We will be using MinIO CLI in this tutorial.

- Copy the Setup Host command from the Setup Minio CLI tab to a notepad or leave it in the clipboard. We will soon use it to set up MinIO CLI.

In case you forget to copy the setup host command for MinIO CLI, don't worry. You can always go back to model details and get it again.

Step 2: Upload the Model to Model Bucket (EOS)

- Upload the model to the bucket. You can use the MinIO client for this.

# Push the contents of the folder to the EOS bucket.

# Go to TIR Dashboard >> Models >> Select your model >> Copy the cp command from the **Setup MinIO CLI** tab.

# The copy command would look like this:

# mc cp -r <MODEL_NAME> stable-video-diffusion/stable-video-diffusion-854588

# Here we replace <MODEL_NAME> with '*' to upload all contents of the snapshots folder.

mc cp -r * stable-video-diffusion/stable-video-diffusion-854588

The model directory name may be a little different (we assume it is models--stabilityai--stable-video-diffusion-img2vid-xt). In case, this command does not work, list the directories in the below path to identify the model directory

$HOME/.cache/huggingface/hub

Step 3: Create an Endpoint for Our Model

Head back to the section on A guide on Model Endpoint creation and for object detection of a video above and follow the steps to create the endpoint for your model.

While creating the endpoint, make sure you select the appropriate model in the model details sub-section. If your model is not in the root directory of the bucket, make sure to specify the path where the model is saved in the bucket.

- You need to mention the correct path of the model and ensure the extension of the model is

.pt.