Kubernetes

With the E2E MyAccount portal, you can quickly launch the Kubernetes master and worker nodes and get started with your Kubernetes cluster in just a minute.

Getting Started

How to Launch Kubernetes Service from MyAccount Portal

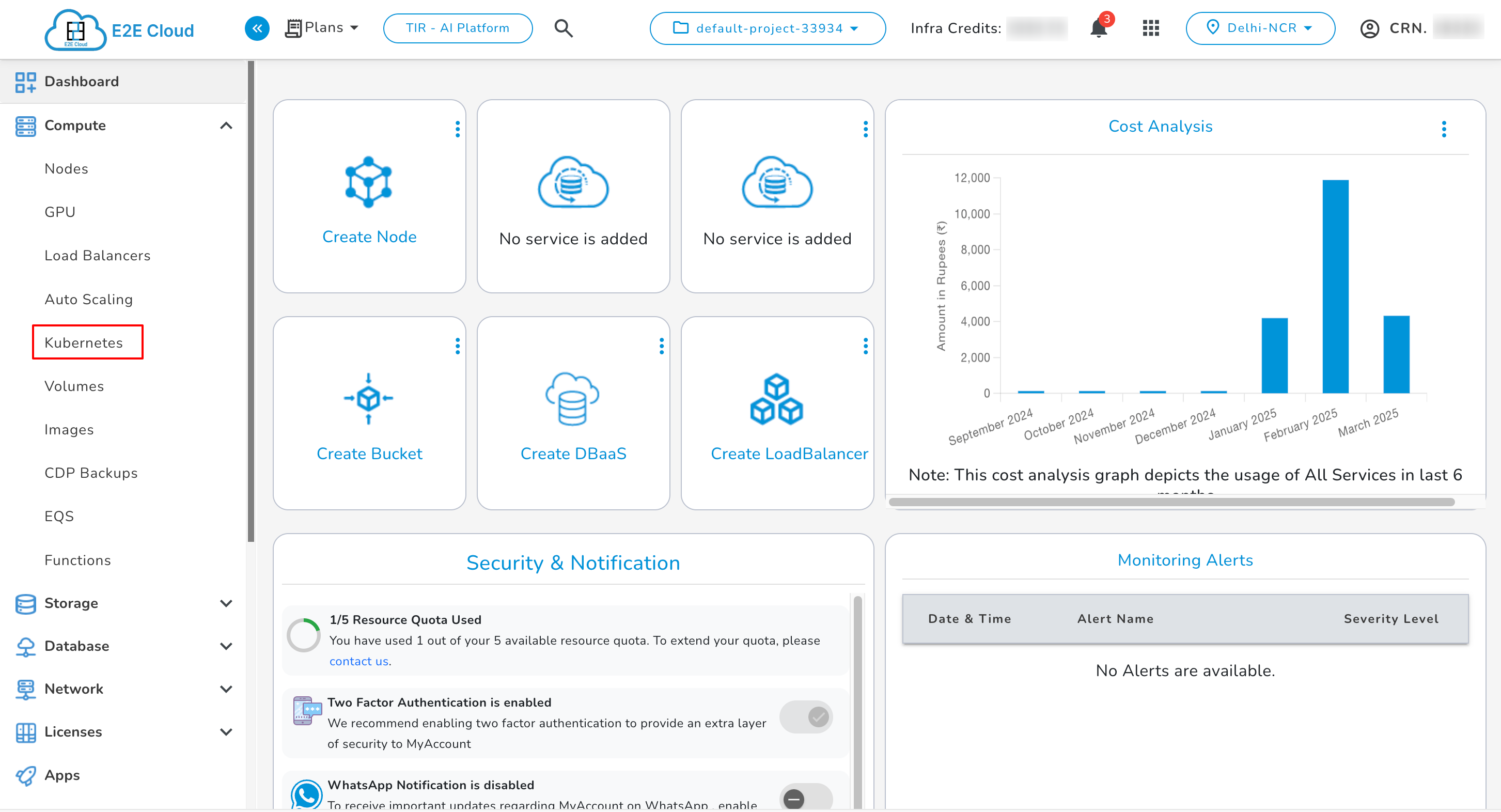

Login into MyAccount

Please go to ‘MyAccount’ and log in using your credentials set up at the time of creating and activating the E2E Networks ‘MyAccount’.

Navigate to Kubernetes Service Create Page

After logging into the E2E Networks ‘MyAccount’, click on the following options:

- On the left side of the MyAccount dashboard, click on the “Kubernetes” sub-menu available under the Compute section.

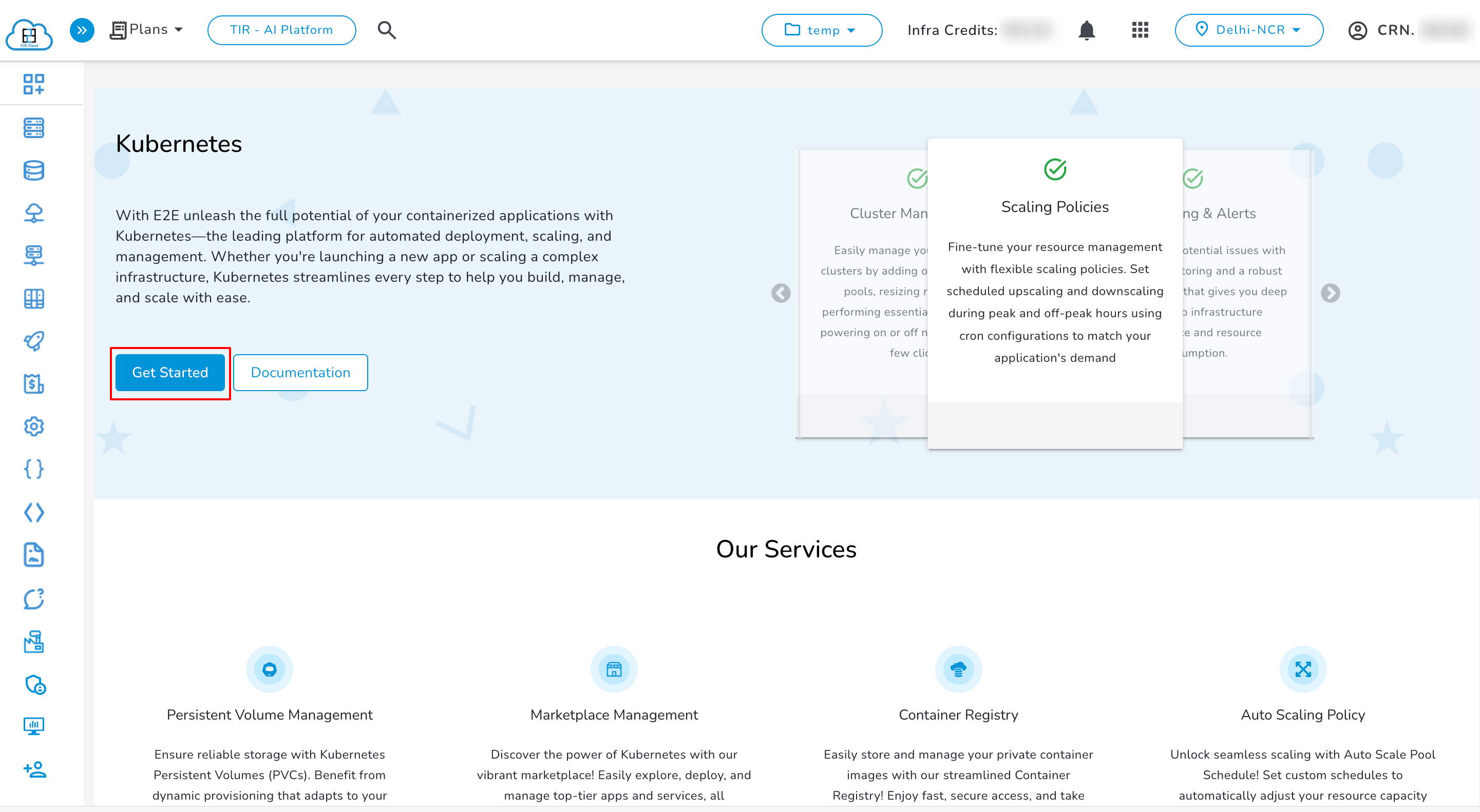

Create Kubernetes Service

In the top right section of the Kubernetes service dashboard, click on the Create Kubernetes icon, which will prompt you to the cluster page where you will select the configuration and enter the details of your database.

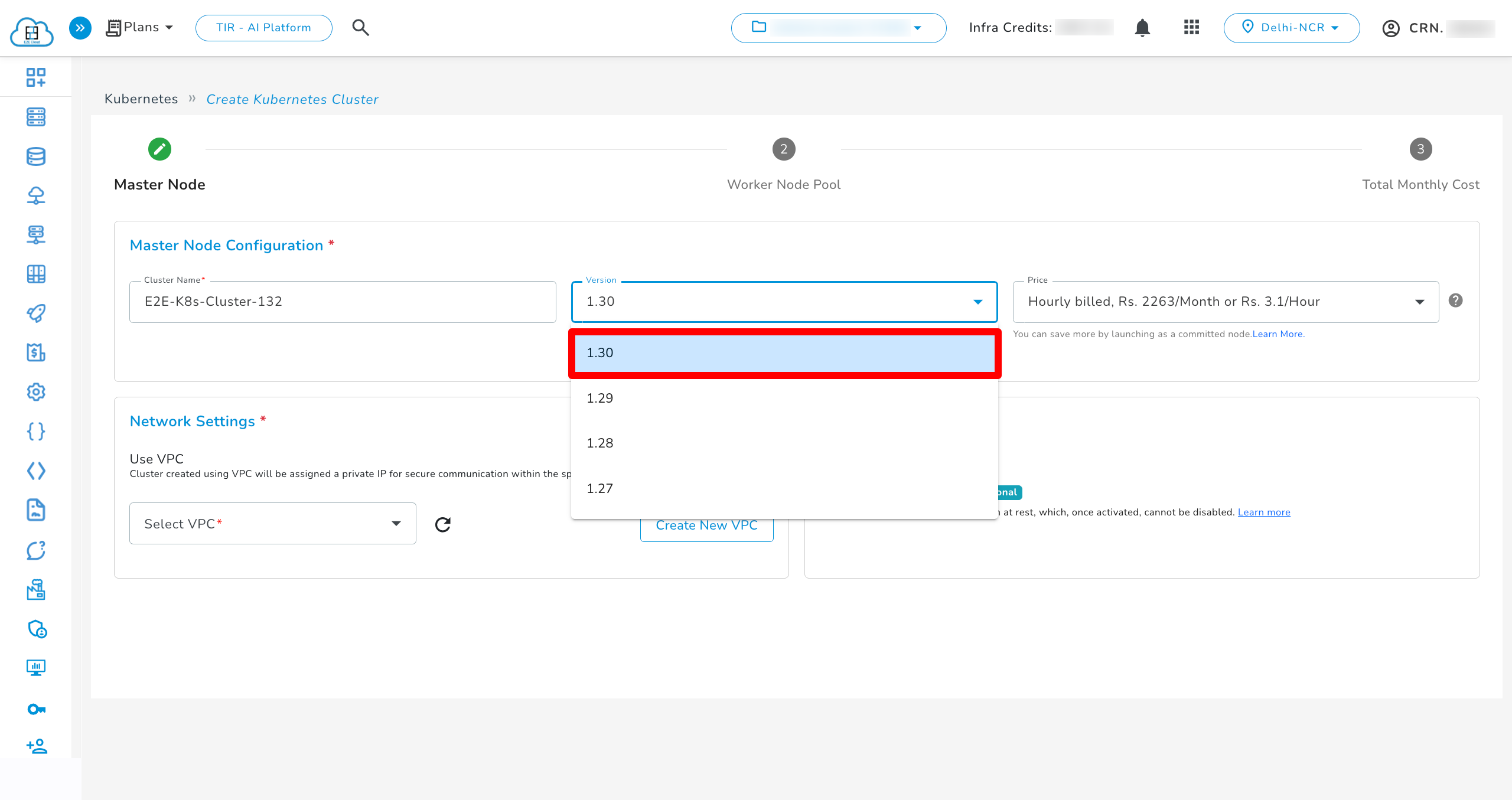

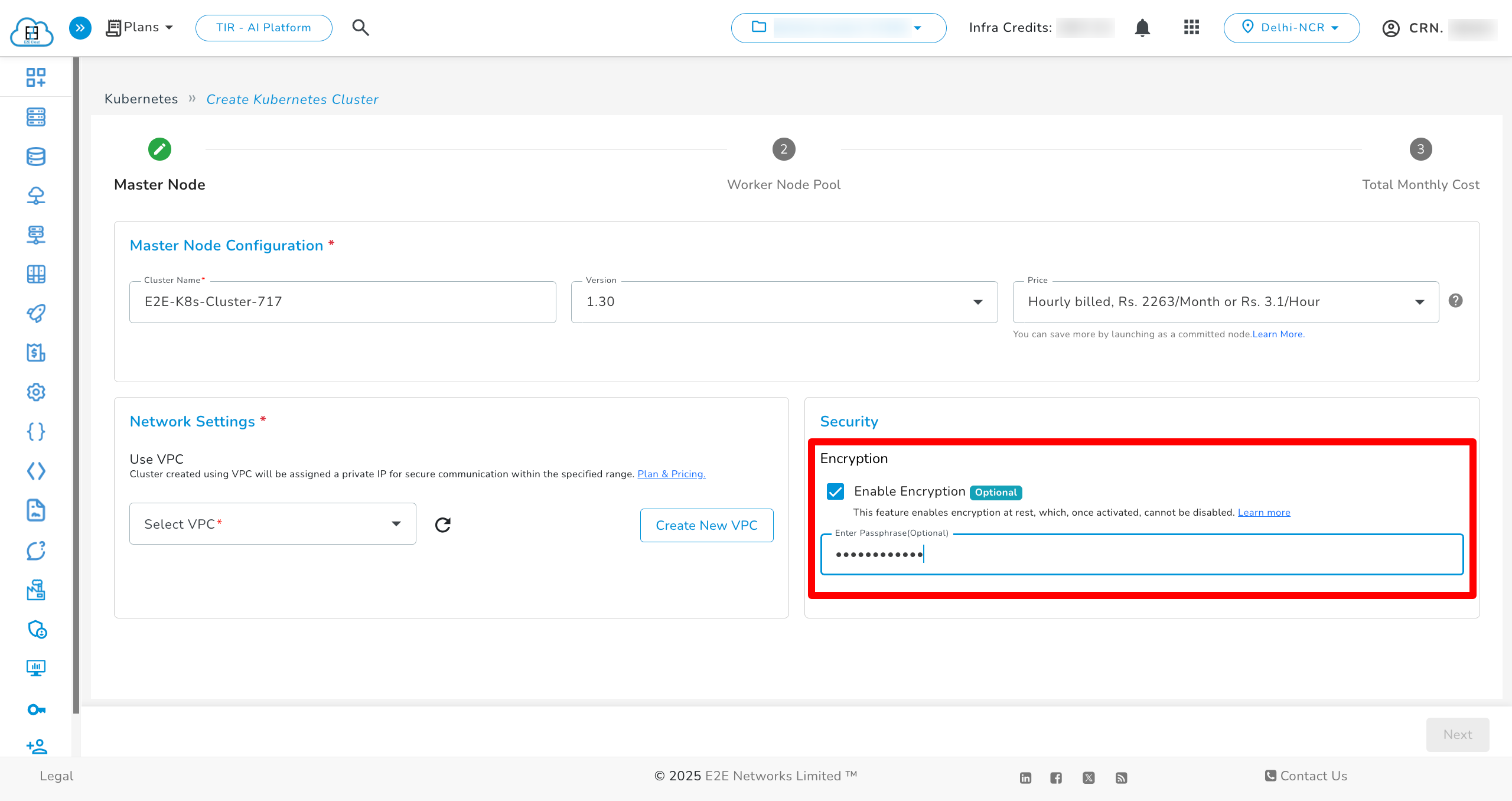

Kubernetes Configuration and Settings

After clicking on Create Kubernetes, you need to select the required master node configuration and network settings for your Kubernetes, as shown below. Once done, click on next to add worker node pools.

- You can choose from multiple Kubernetes versions when configuring your cluster. Each version is labeled with its release number and support status. Make sure to select a version that aligns with your application requirements or compatibility needs.

- If the user want to Encrypt the Kubernetes then select the checkbox of Enable Encryption and entering of Passphrase is Optional.

What is a Worker Node Pool?

A Worker Node Pool is a collection of nodes with the same configuration (CPU, memory, disk) used to run workloads in your Kubernetes cluster. Each node pool can be scaled individually and allows you to optimize resource allocation for different workloads (e.g., compute-intensive apps vs memory-heavy apps).

You can create multiple pools to manage diverse workloads efficiently. Adding pools also enables granular scaling and maintenance flexibility without impacting the entire cluster.

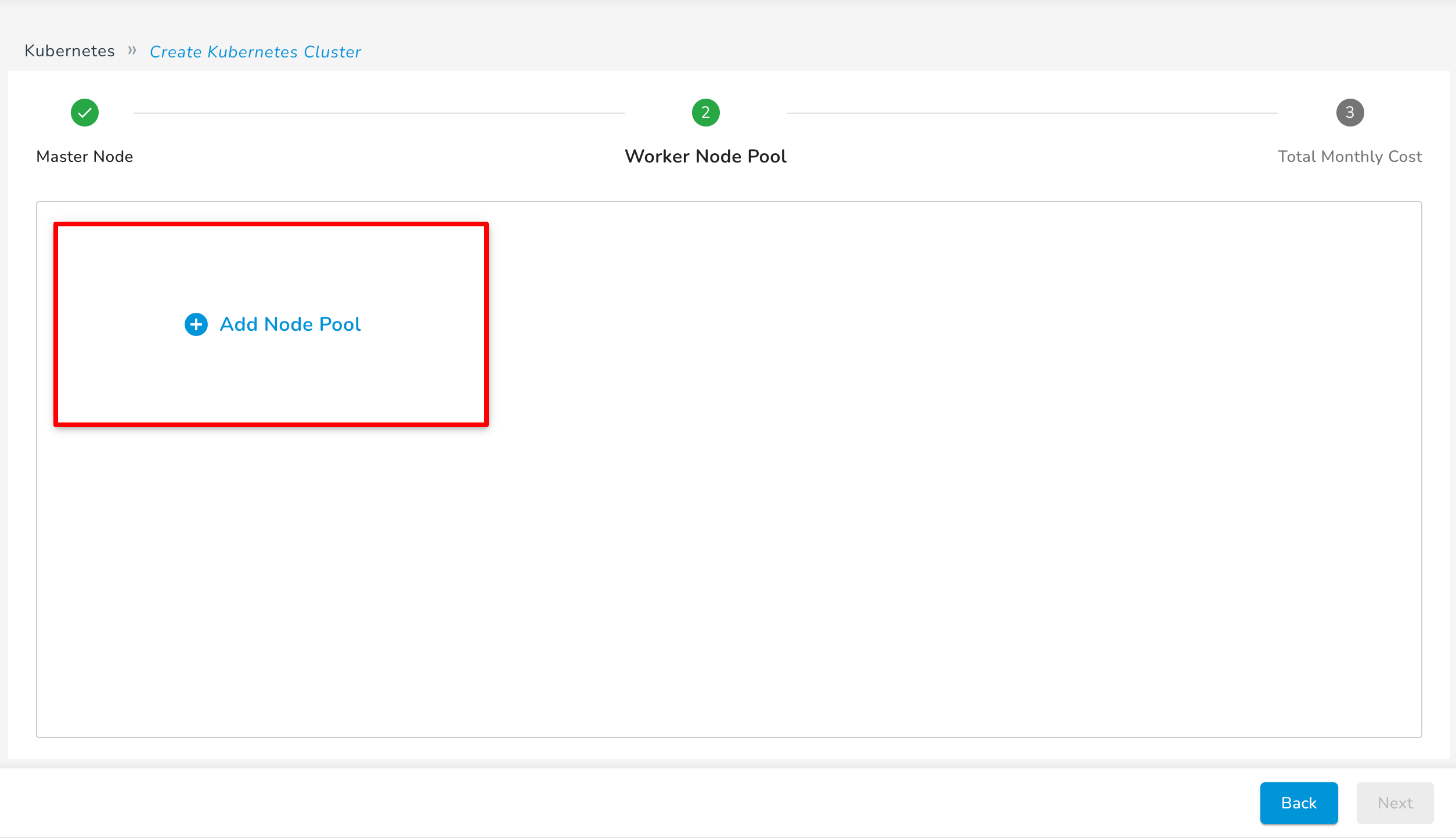

Add Worker Node Pool

On the worker node pool page, click on "Add Node Pool"

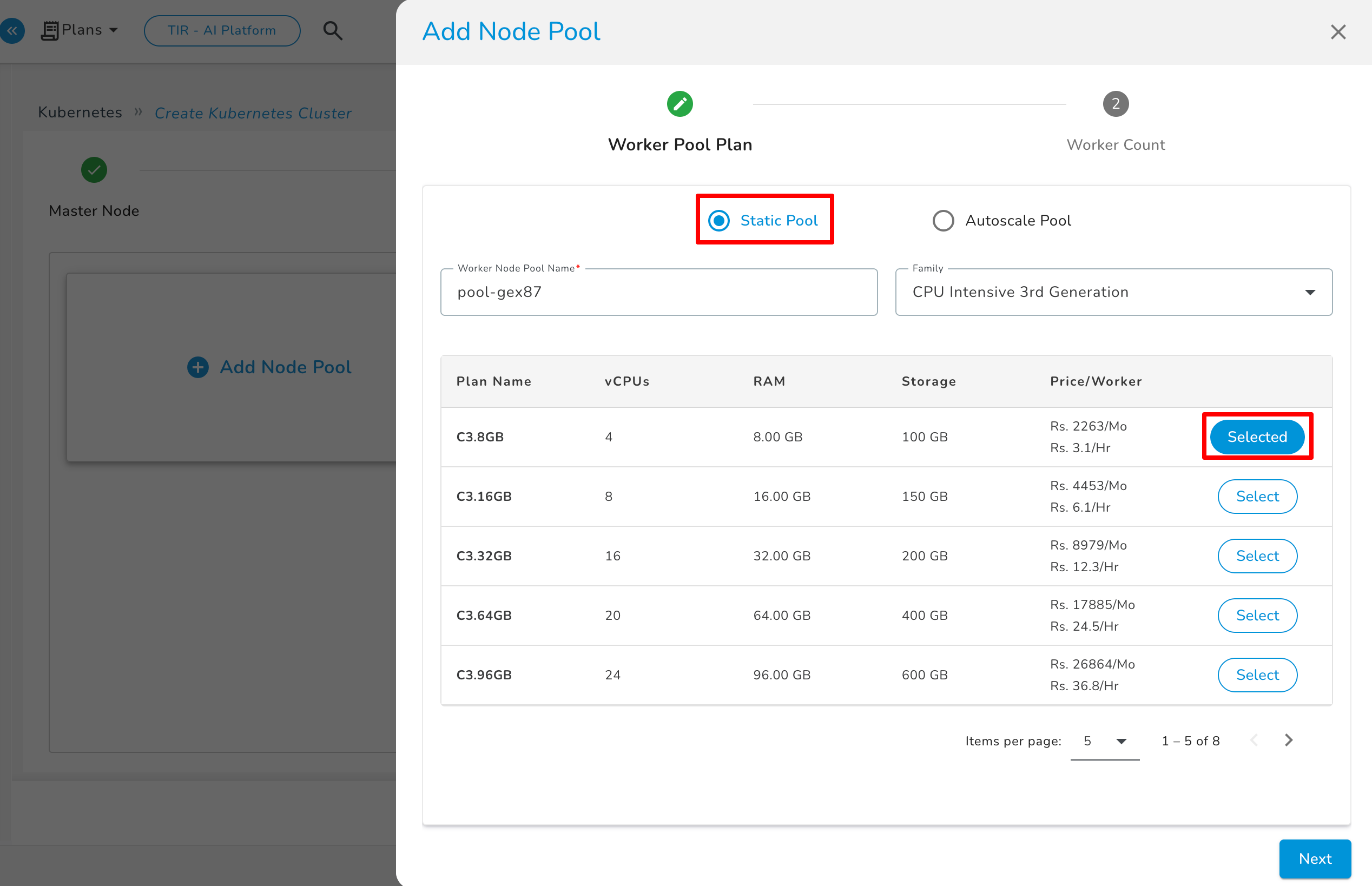

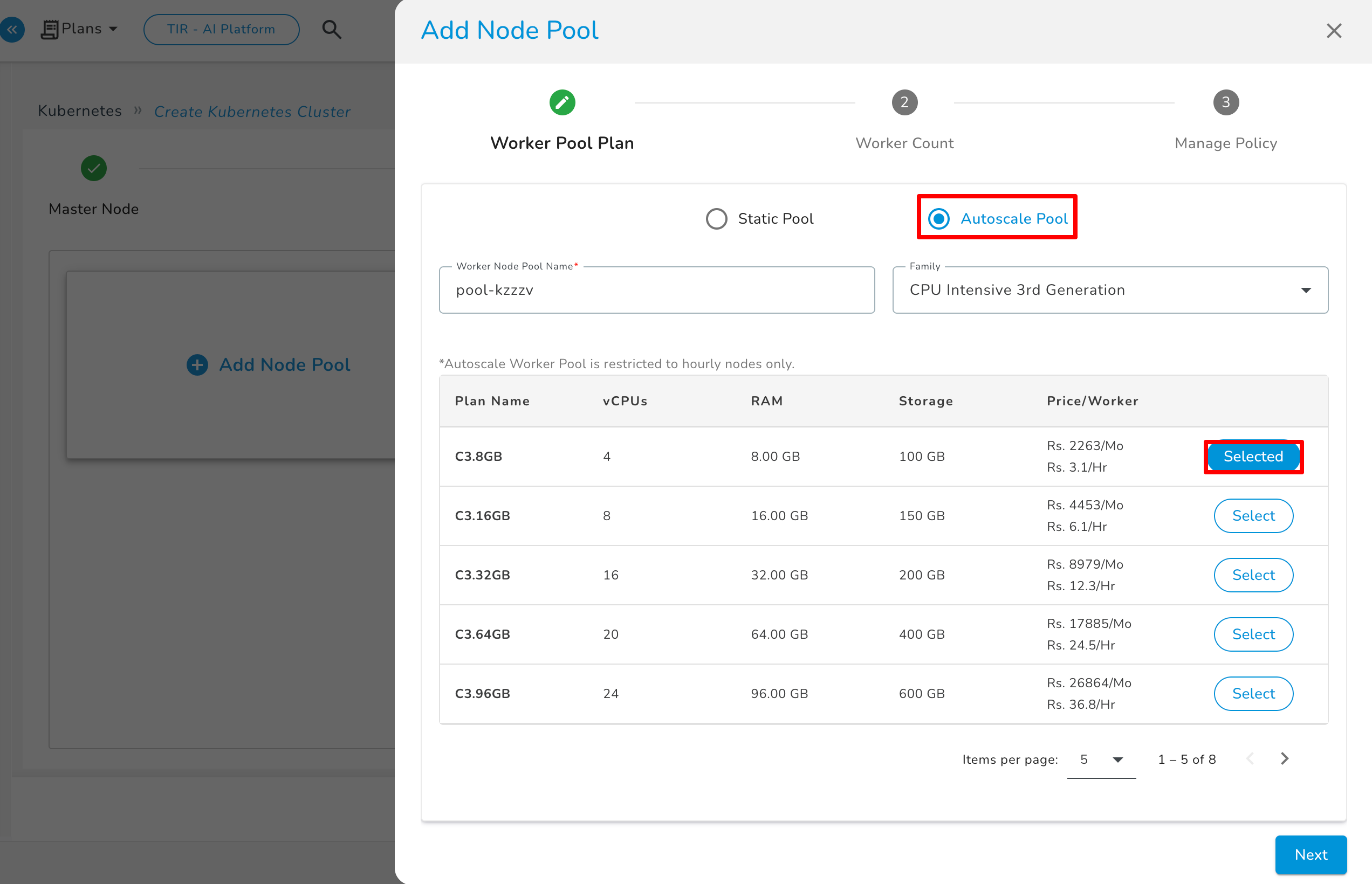

You can select either a Static Pool or an AutoScale Pool.

Static Pool

Pick the worker nodes configuration and click on next to decide the number of nodes in the pool.

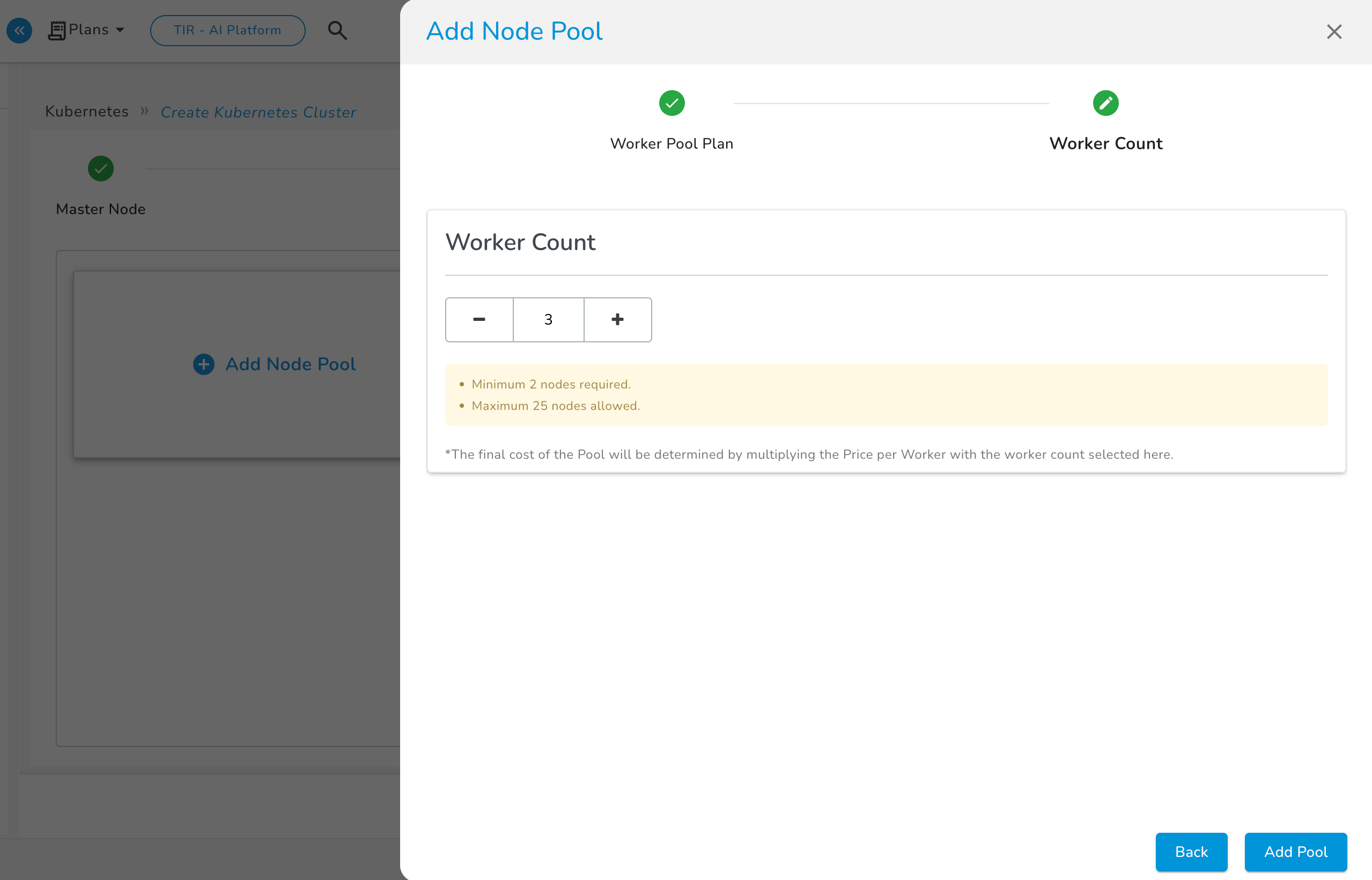

On the worker count page, set the desired number of worker nodes in your static pool.

AutoScale Pool

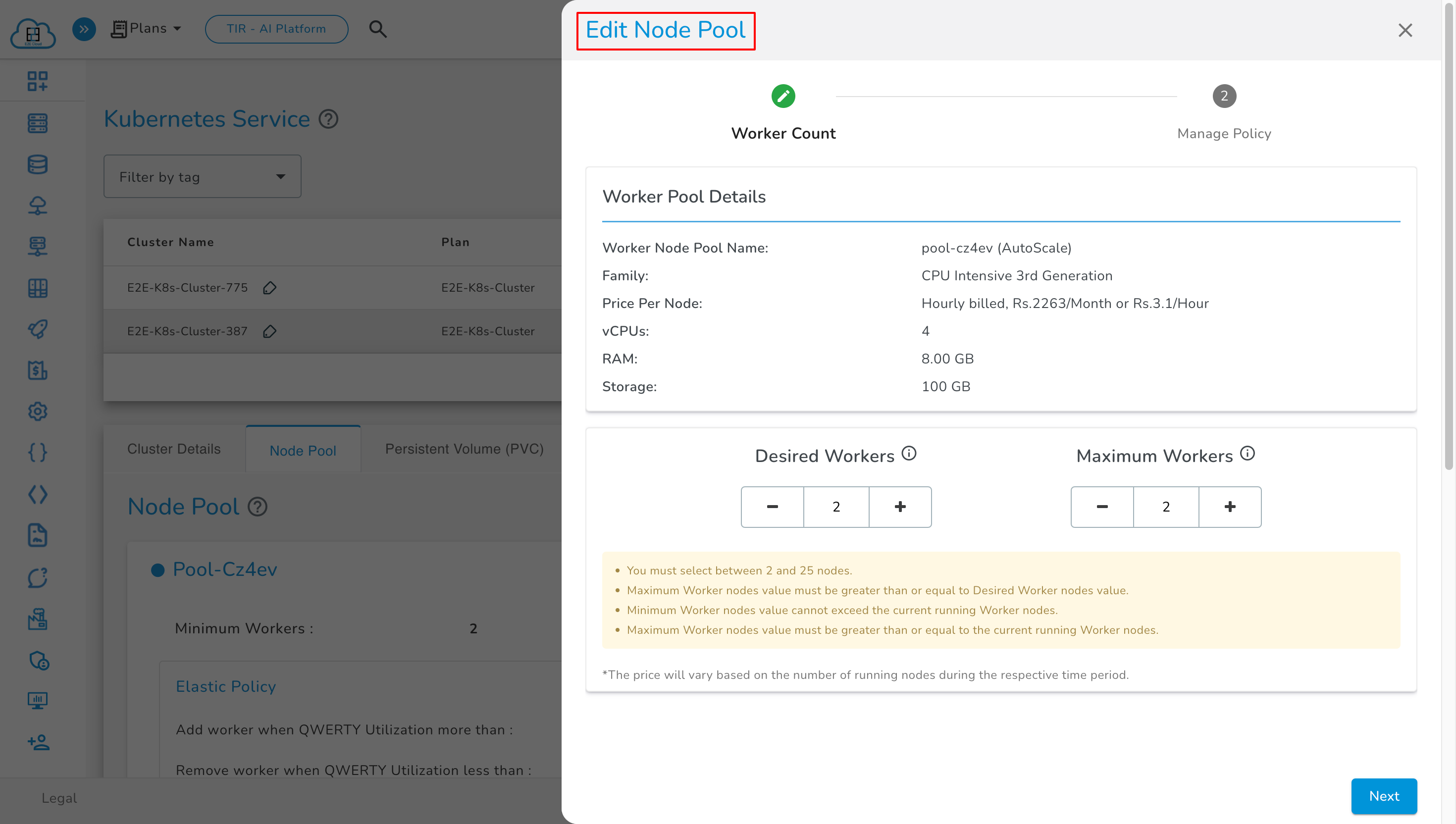

In case of an AutoScale Pool, select the configuration of worker nodes and proceed by clicking on next.

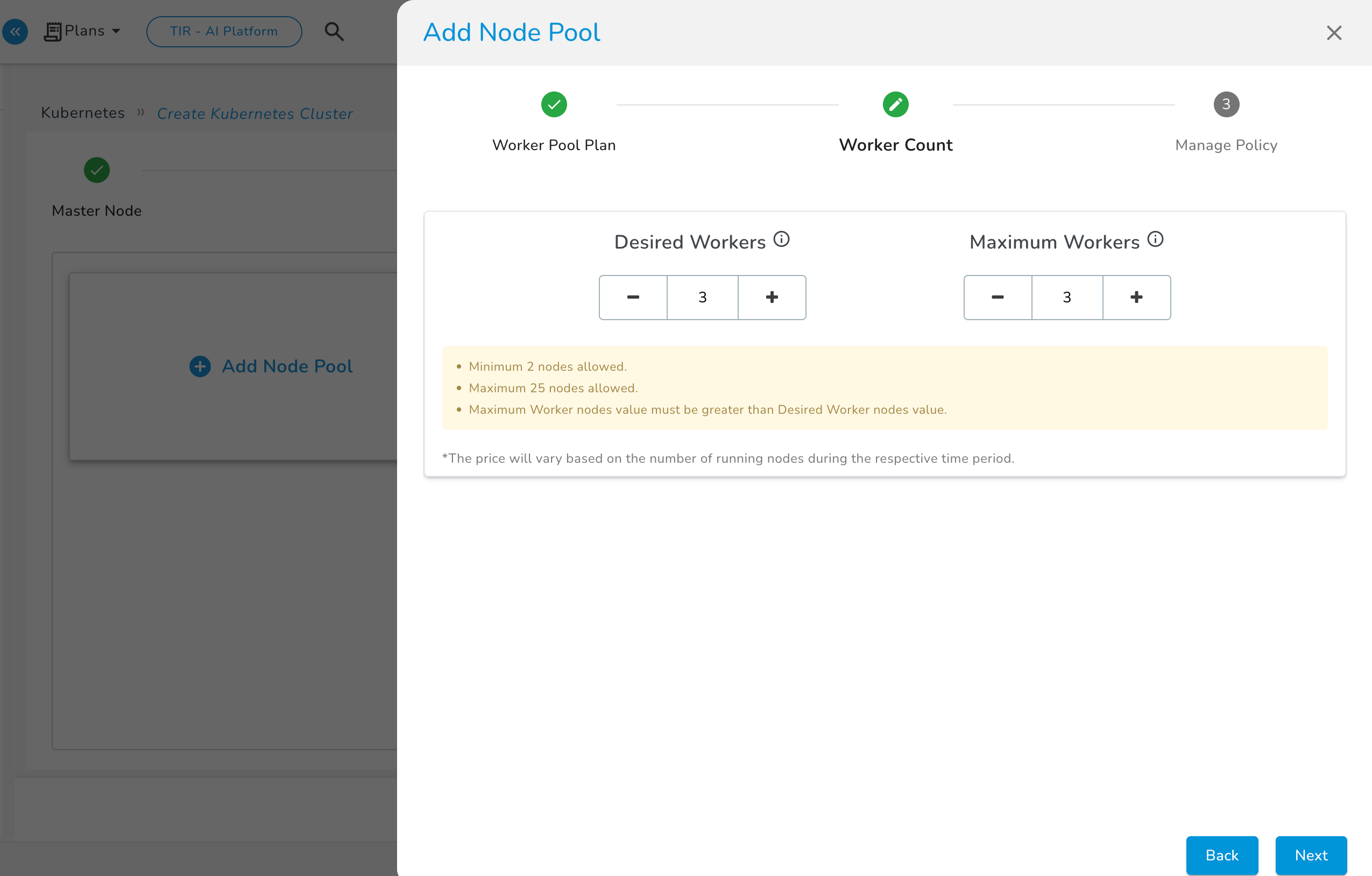

On the worker count page, set the desired and maximum number of worker nodes in your Autoscale pool.

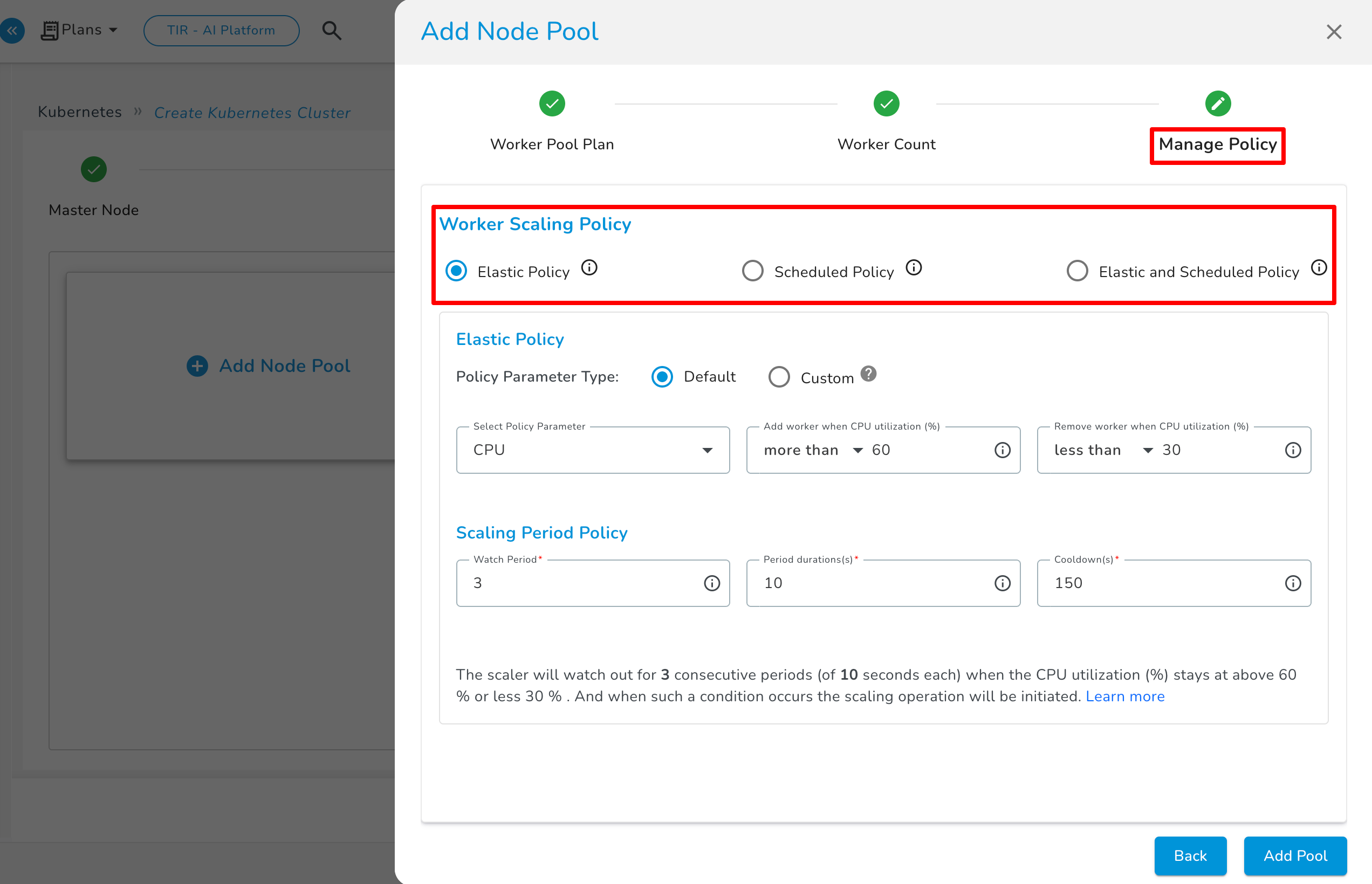

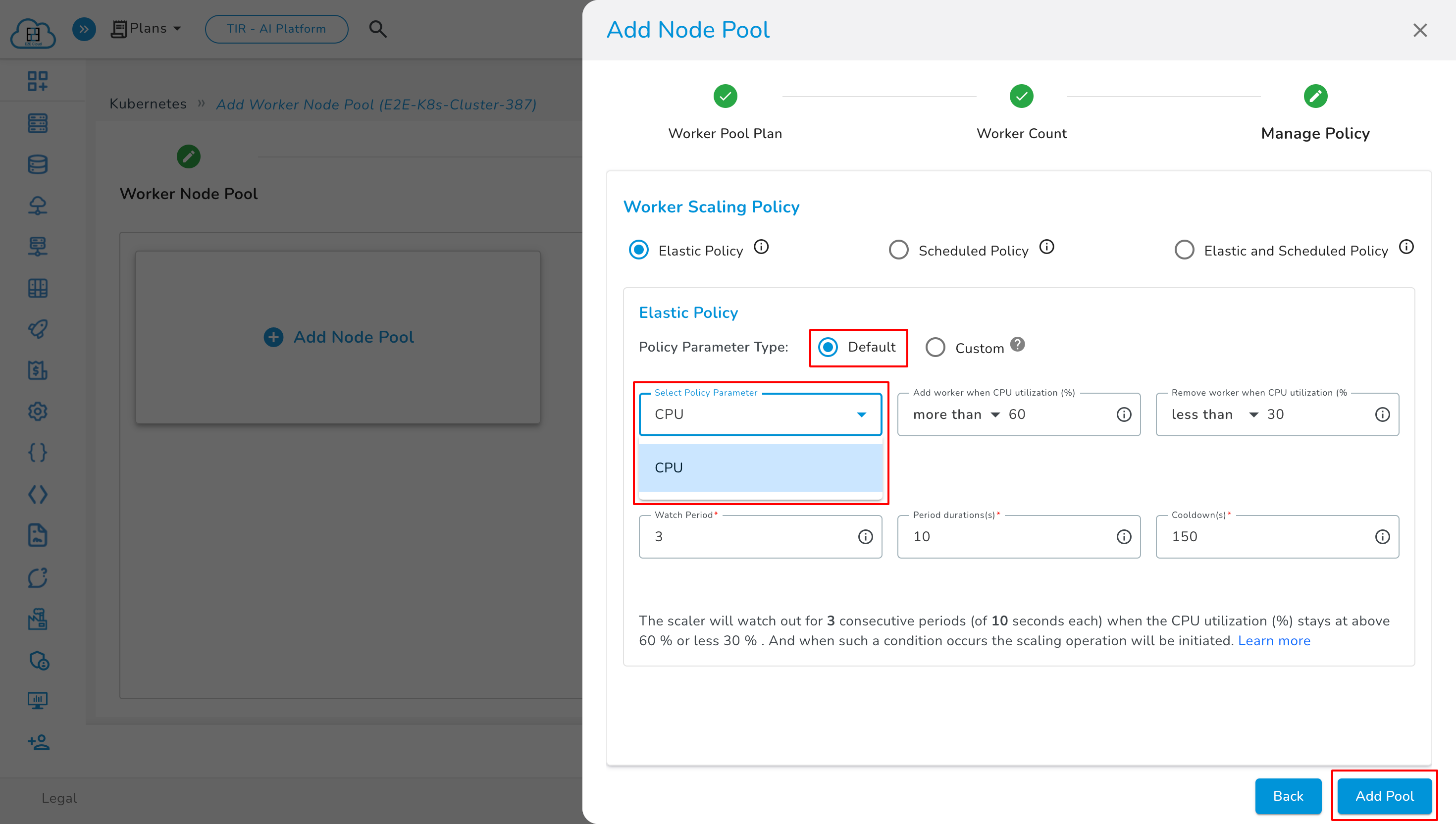

Click "Next" to proceed to the manage policy page. Define the scaling policy for the node pool here.

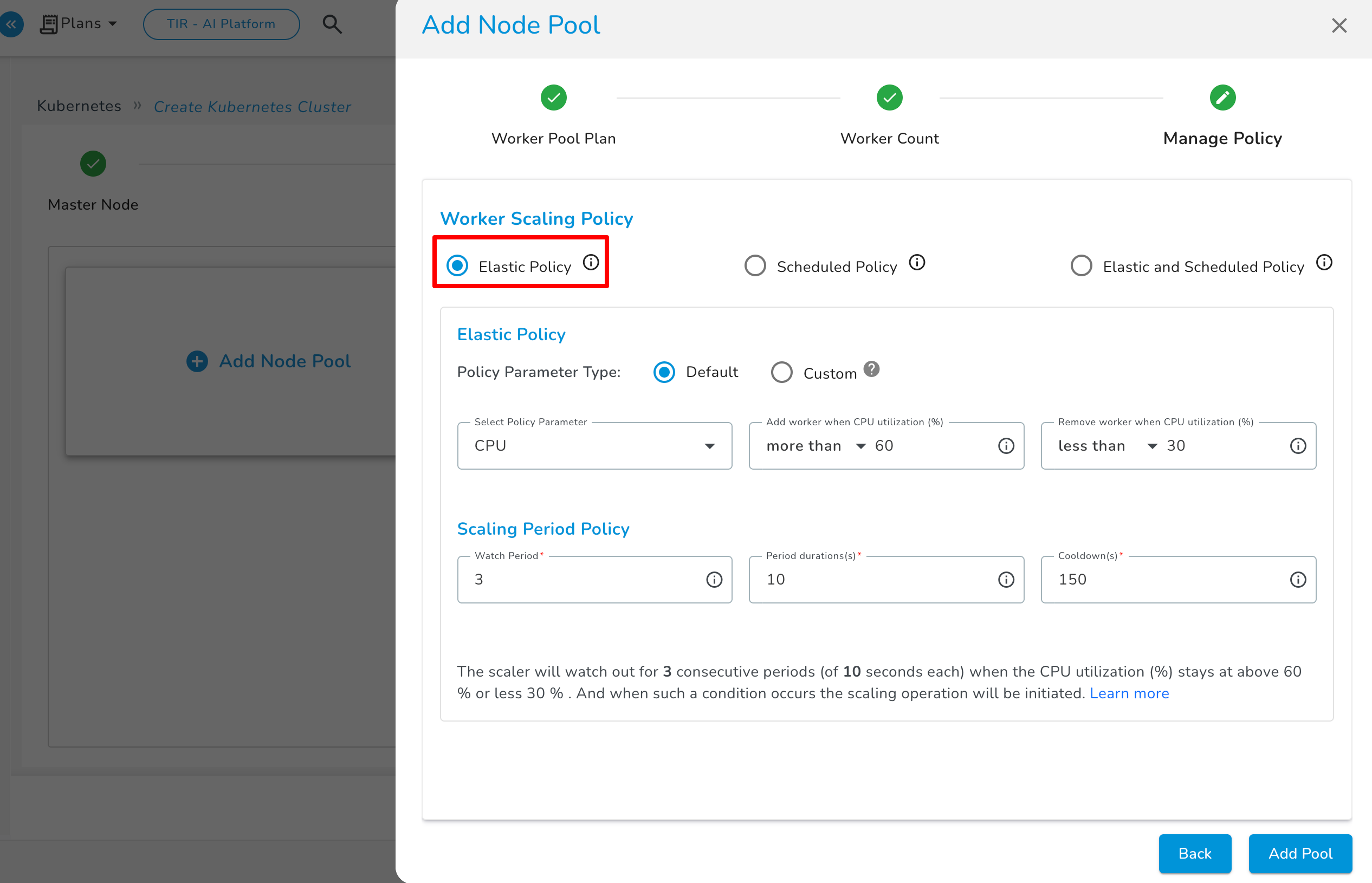

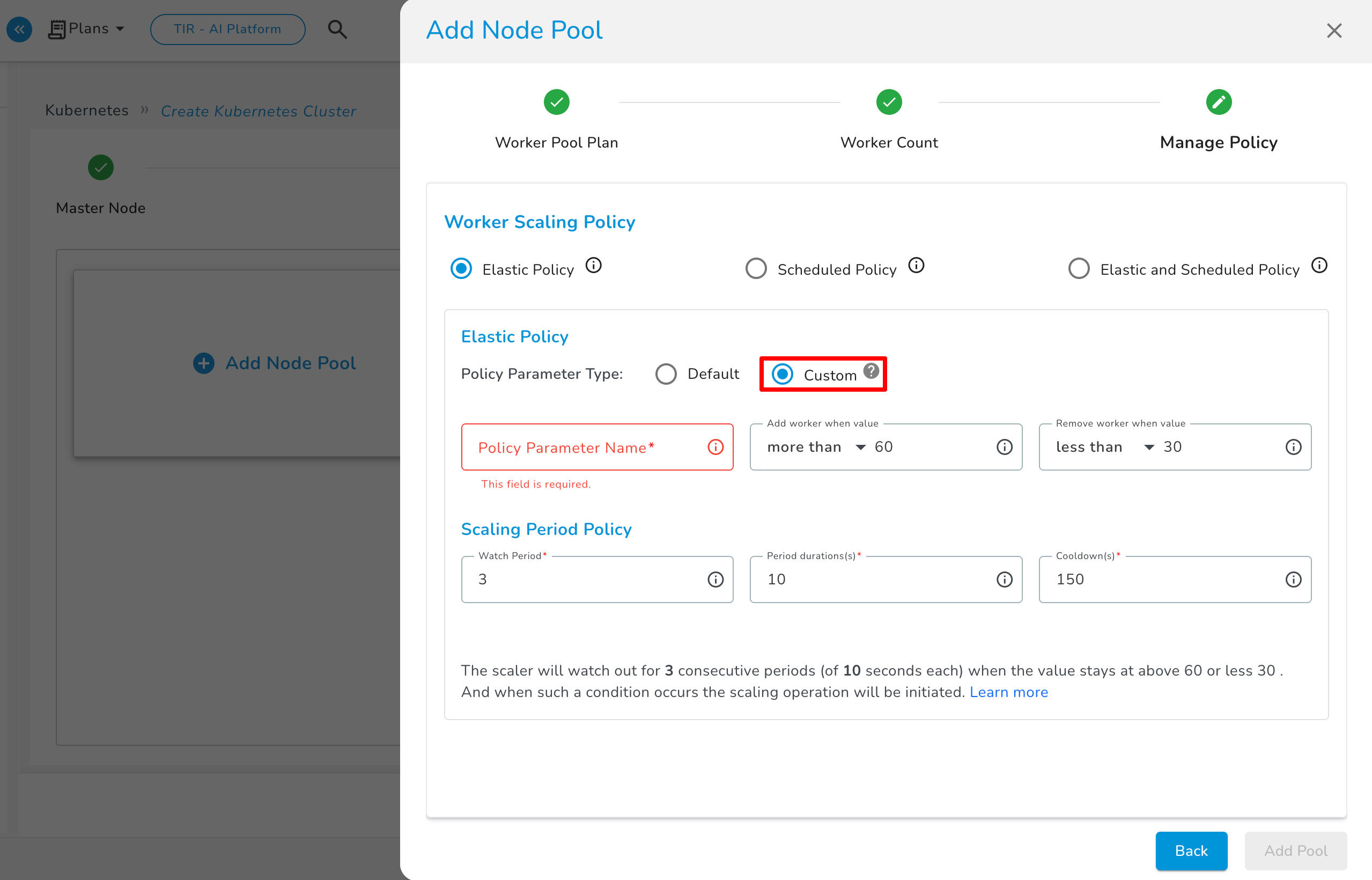

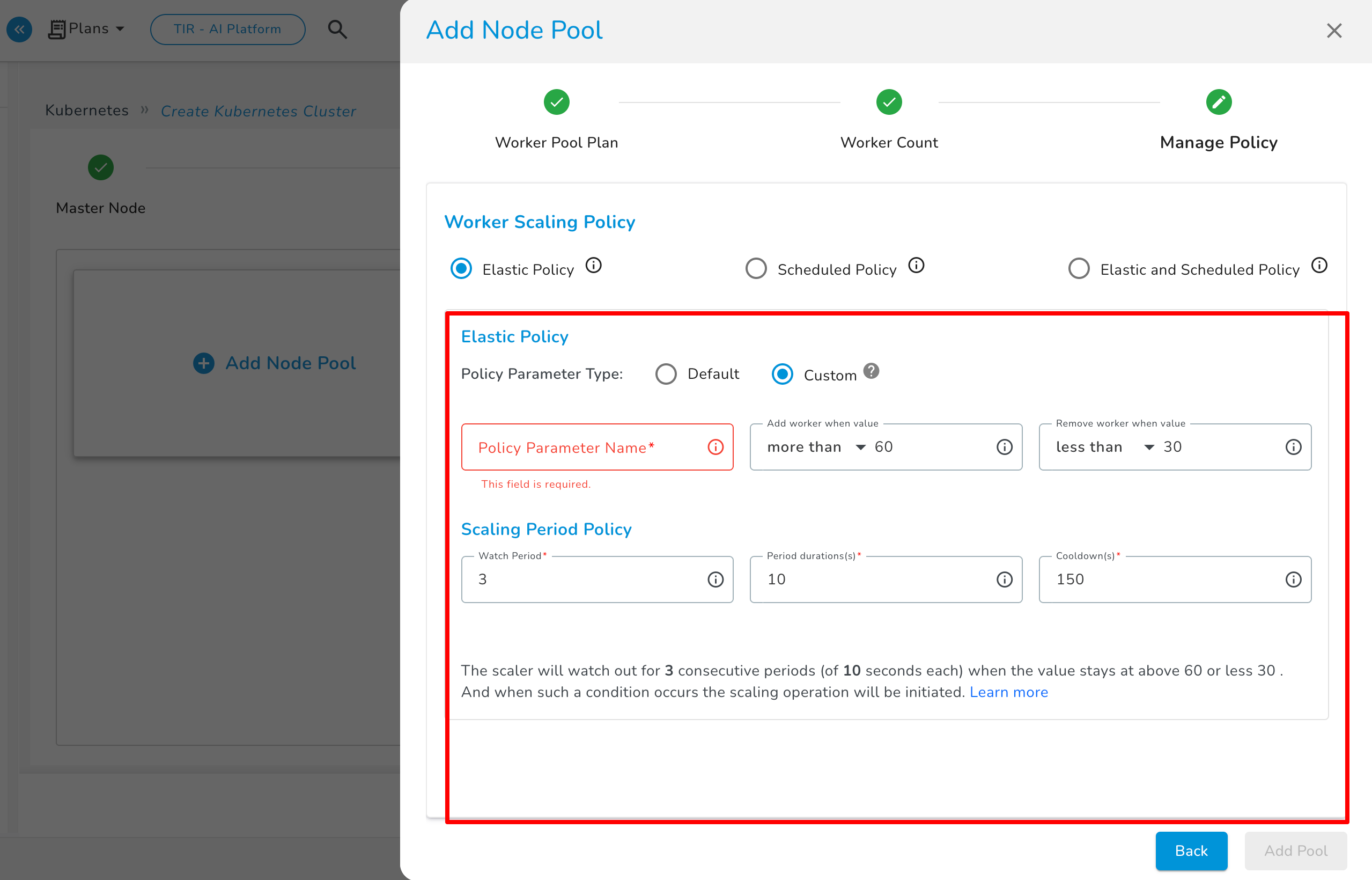

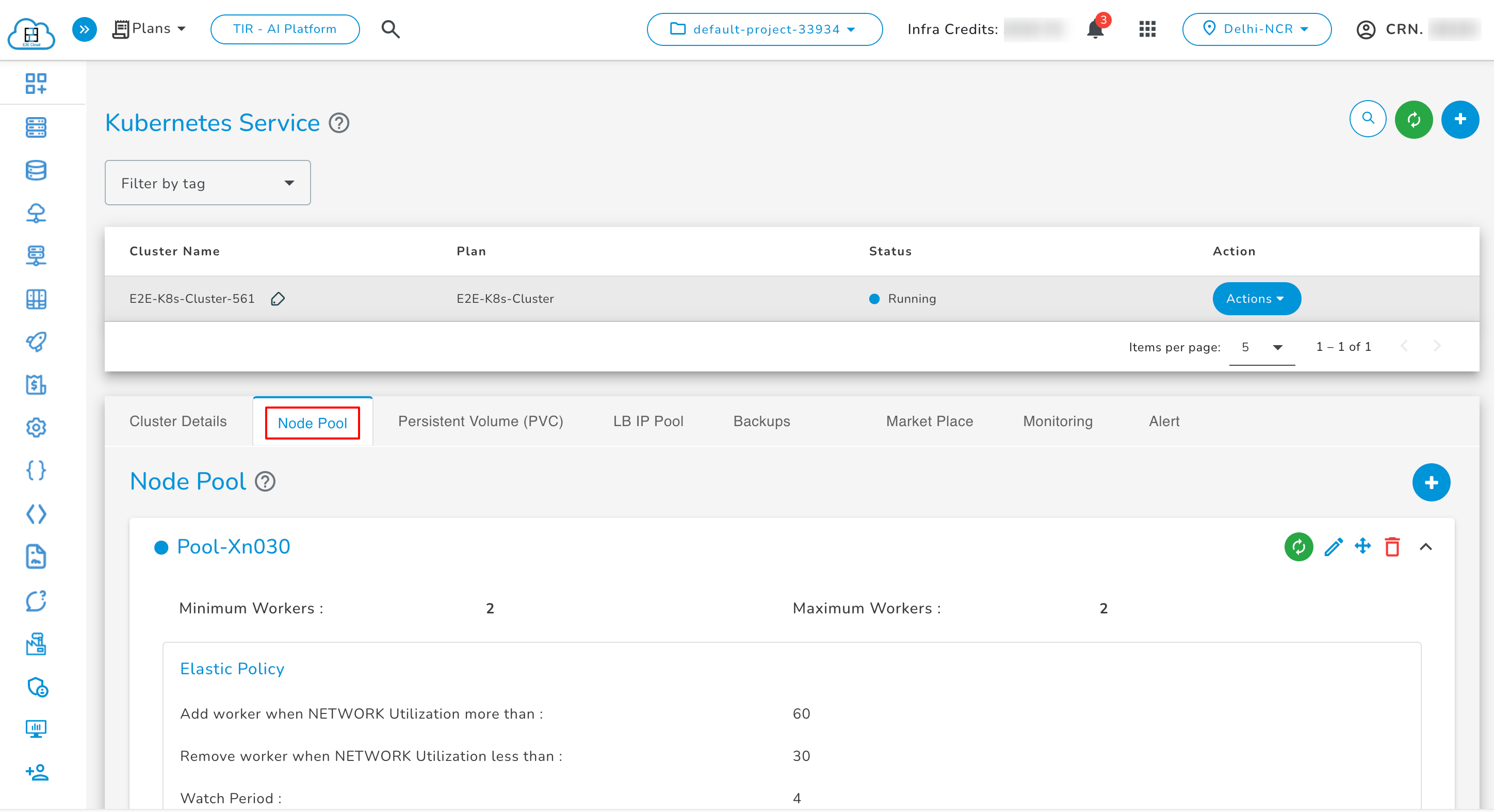

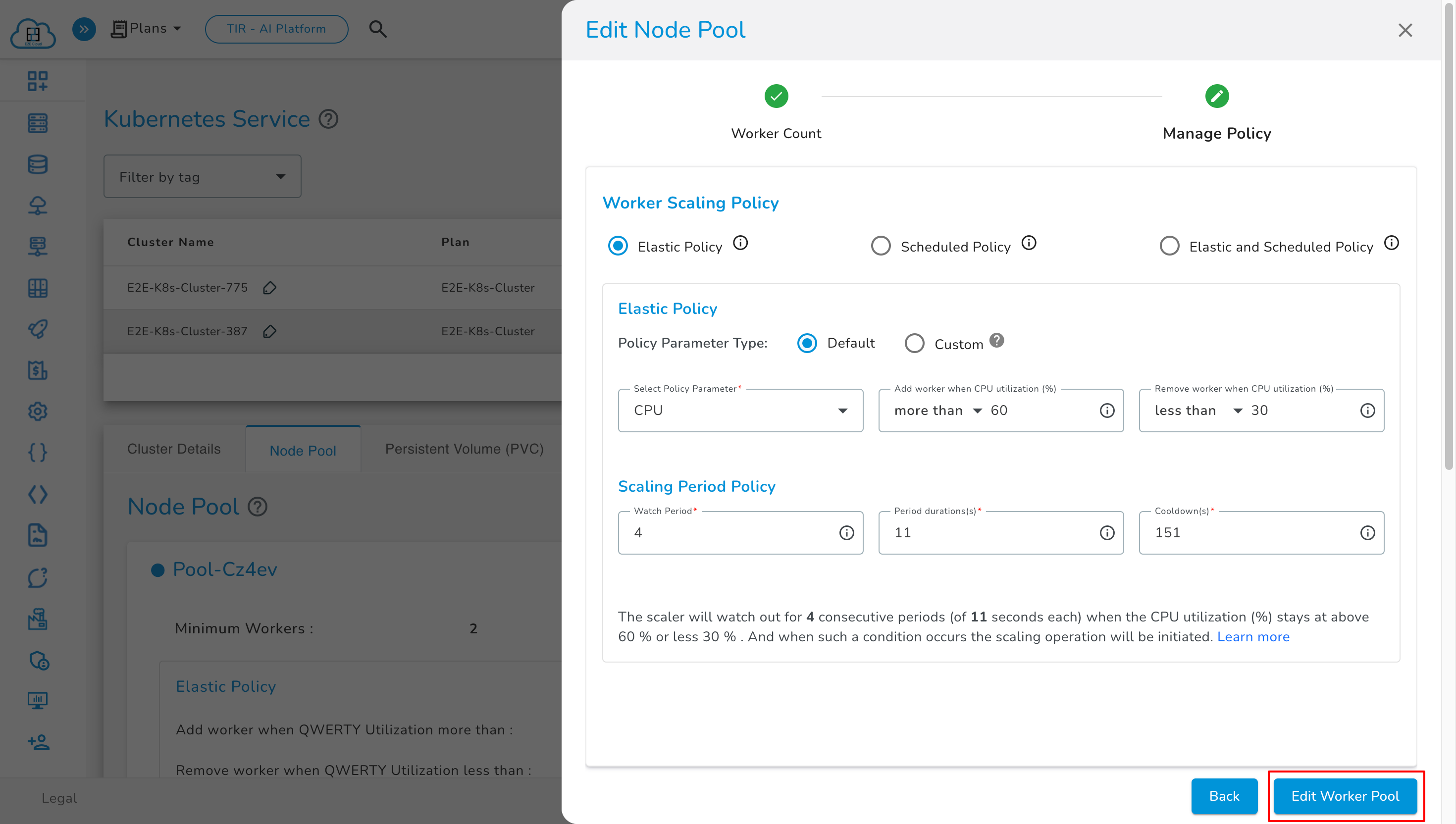

Elastic Policy

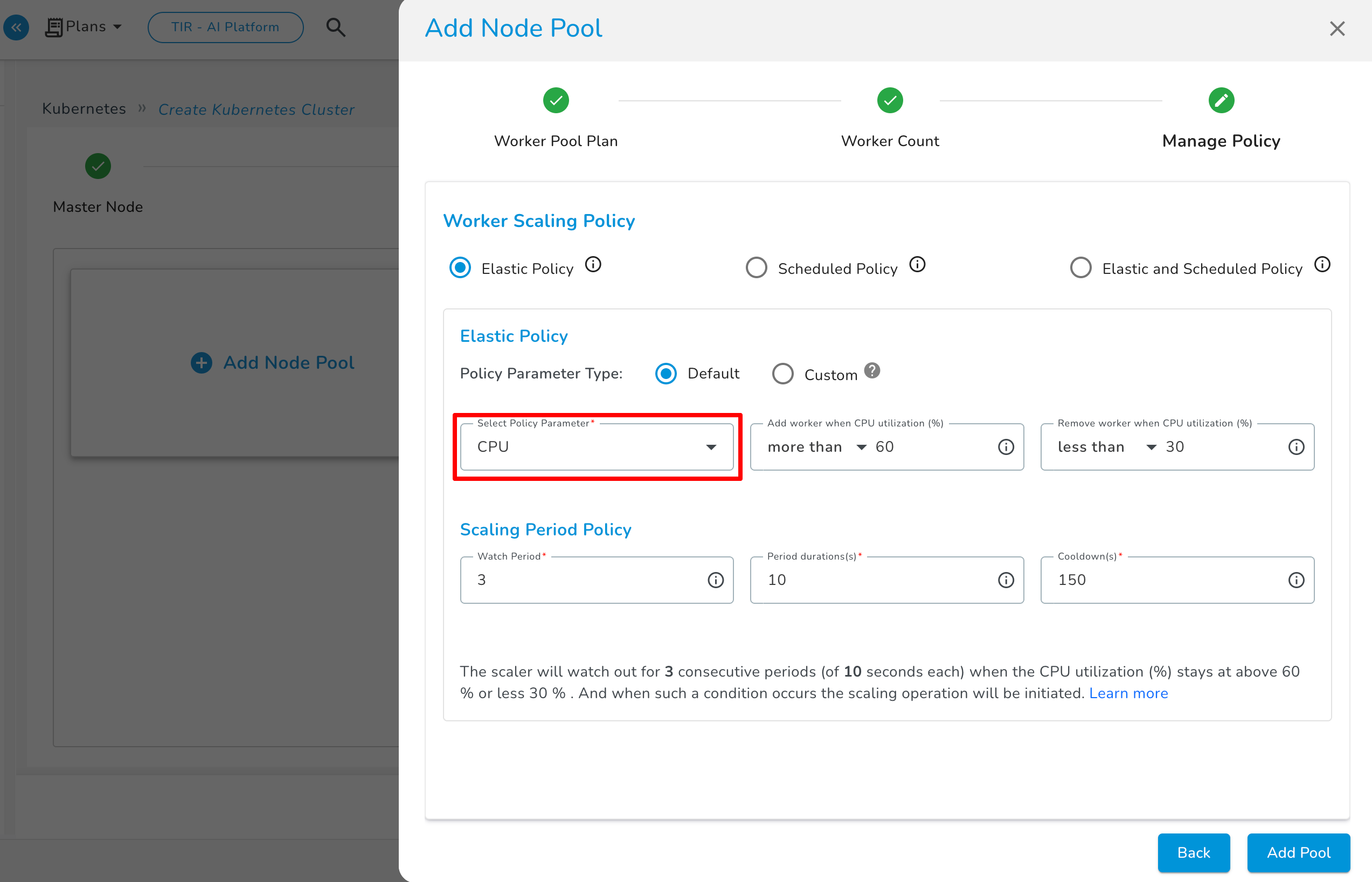

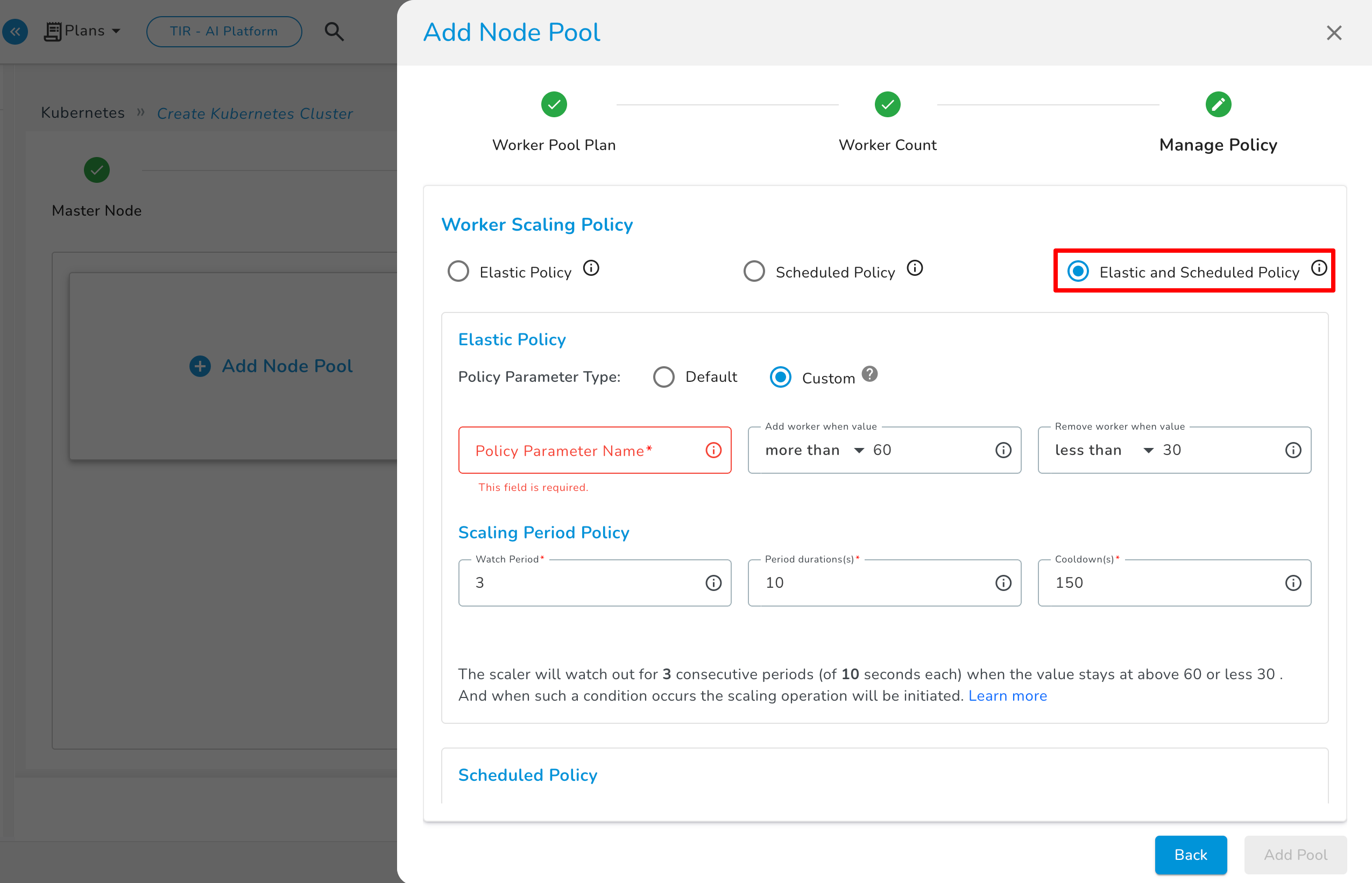

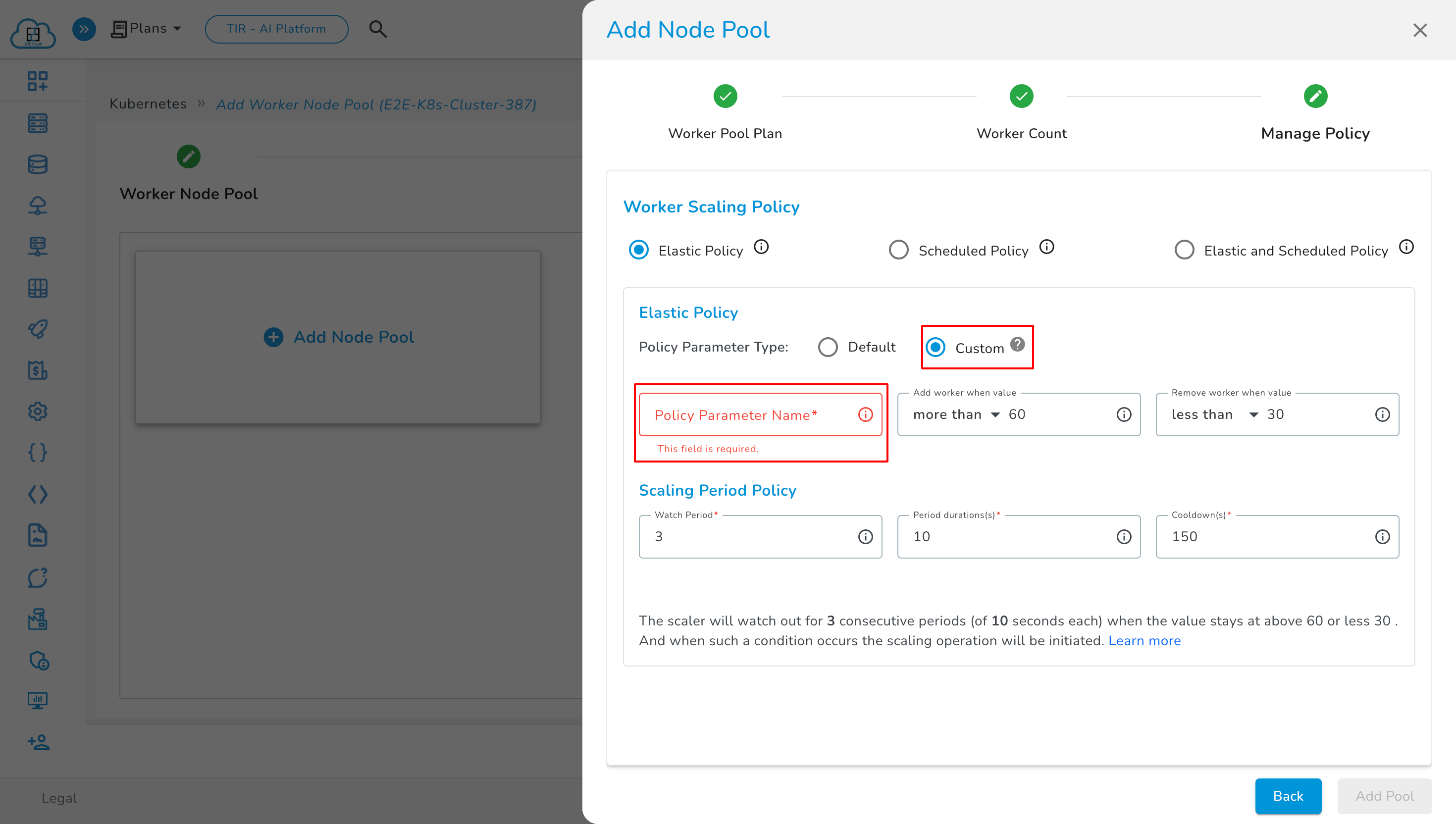

The Elastic Policy allows you to choose between two scaling policies: Default or Custom. If you choose Default, scaling will be based on CPU or Memory utilization. If you choose Custom, you can specify a custom attribute to determine scaling.

Default: In the Default policy parameter type, you can select two types of policy parameters.

-

CPU: This policy scales the number of resources based on CPU utilization. When CPU utilization reaches a certain threshold, the number of resources will increase according to your set policy. When CPU utilization decreases, the number of resources will decrease.

-

MEMORY: This policy scales the number of resources based on MEMORY utilization. When MEMORY utilization reaches a certain threshold, the number of resources will increase according to your set policy. When MEMORY utilization decreases, the number of resources will decrease.

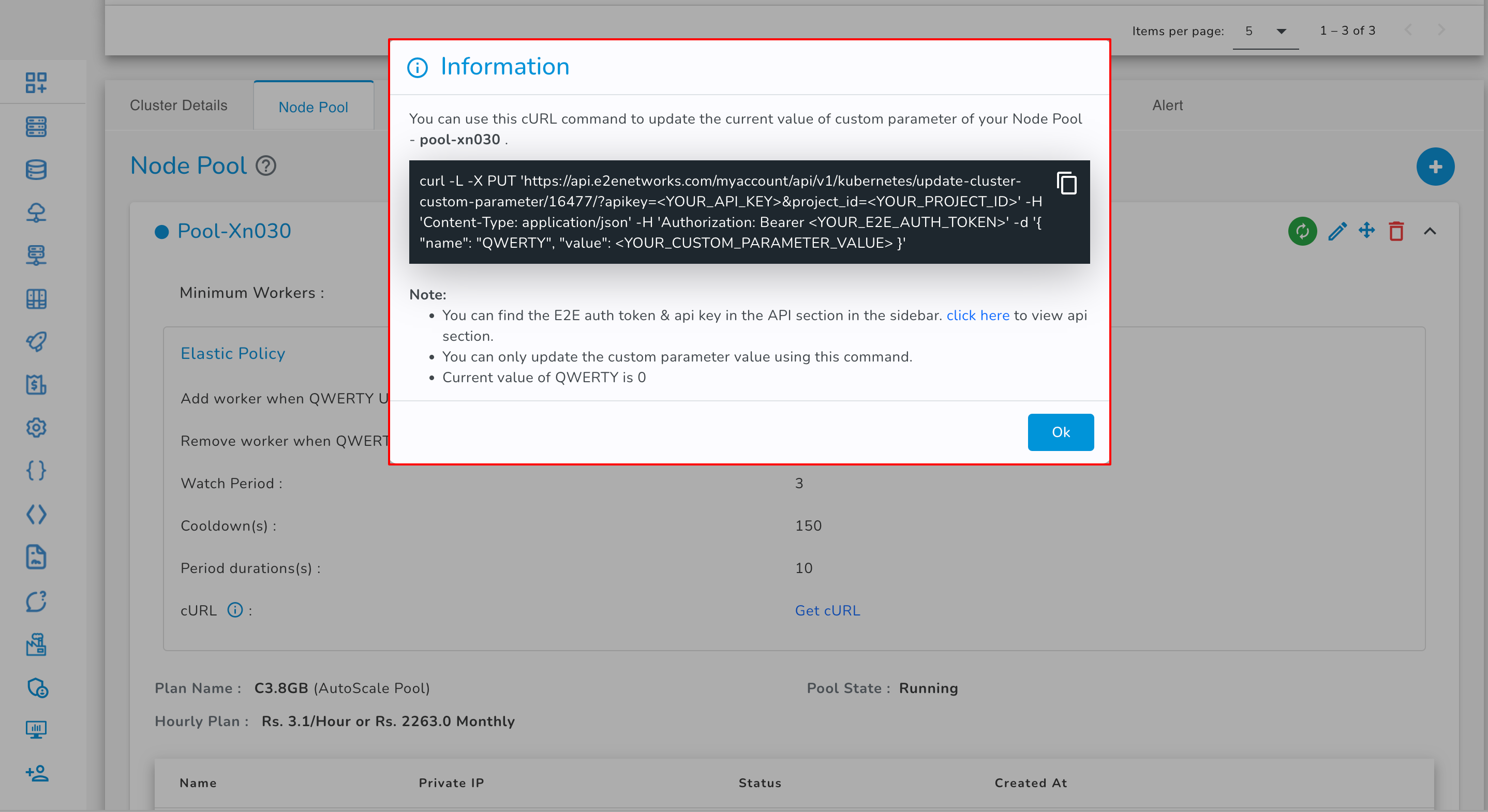

Custom: Once an auto-scaling configuration is created with custom parameters, you'll receive a CURL command for updating the custom parameter value. This command can be used within scripts, hooks, cron jobs, and similar actions. When the value of this custom parameter reaches a specific threshold, the number of resources will increase. Conversely, when the value decreases, the number of resources will decrease.

The default value of the custom parameter is set to 0.

Manage Custom Policy

The custom policy feature in the Kubernetes Autoscale Pool enables you to define your custom attribute. The auto-scaling service utilizes this attribute to make scaling decisions. After setting this custom attribute, you can scale or downscale your node pool nodes. Once the Kubernetes cluster is launched, you will receive a curl command to update this custom attribute according to your use case.

Policy Parameter Name: This field is where you enter the name of the custom attribute you want to monitor. Use descriptive names related to the aspect of your service being monitored, such as “NETTX” for Network traffic or “DISKWRIOPS” for disk write operations.

Node Utilization Section: Specify the values that will trigger a scale-up (increase in cardinality) or scale-down (decrease in cardinality) operation based on your preferences.

Scaling Period Policy: Define the watch period, duration of each period, and cooldown period.

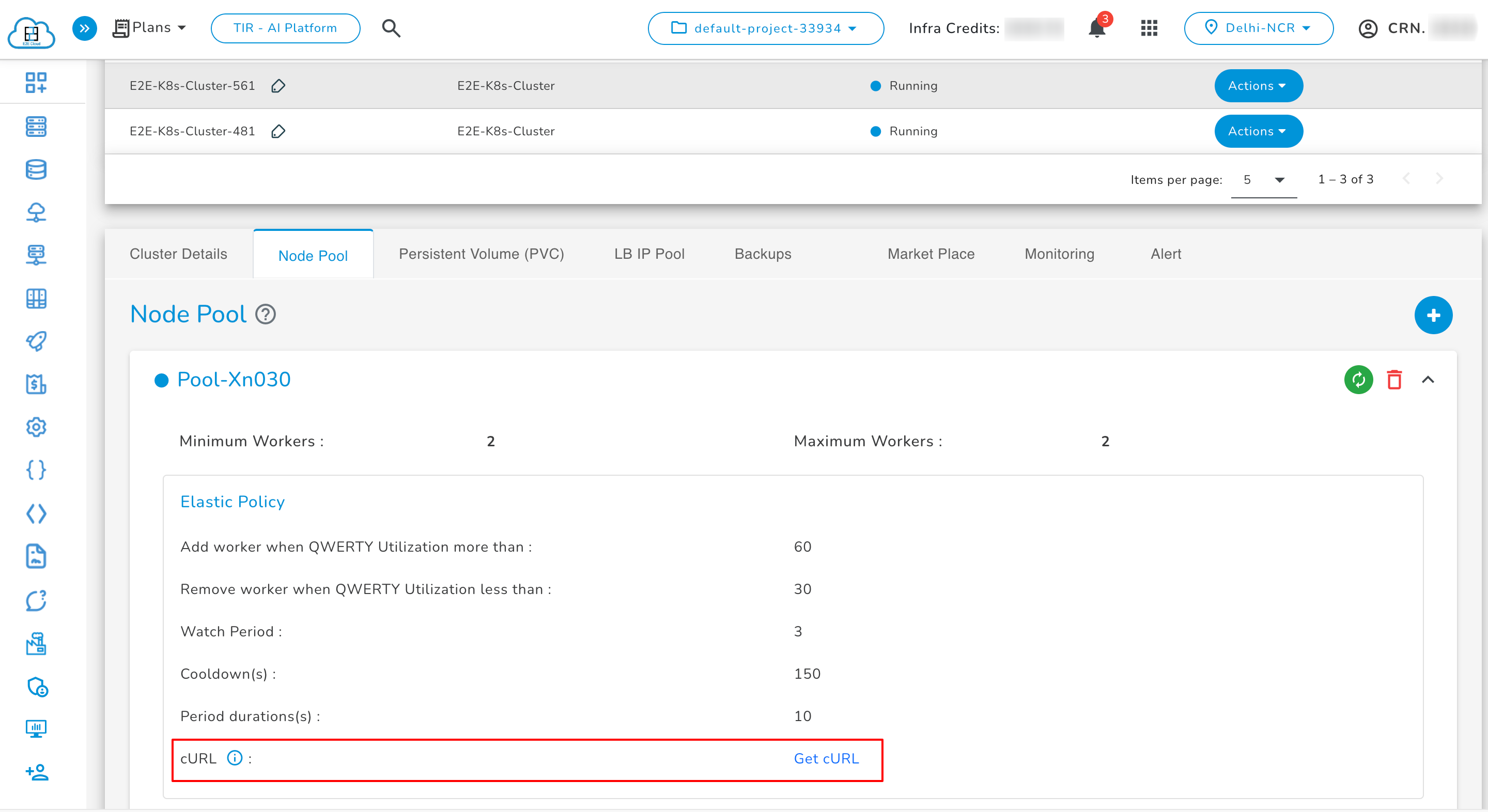

How to Get a CURL

You can obtain a curl command by clicking on 'Get Curl'.

After you complete filling the fields, click the 'create cluster' button. When the cluster is up and running, you'll get a curl command. Use this command in Postman, inputting your API Key and token. Then, in the Postman's body section, modify the value of the custom parameter.

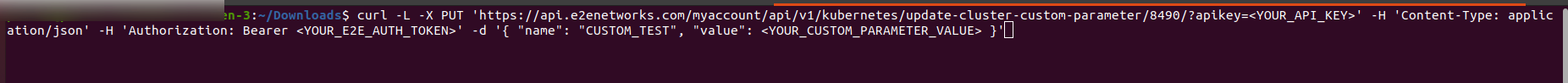

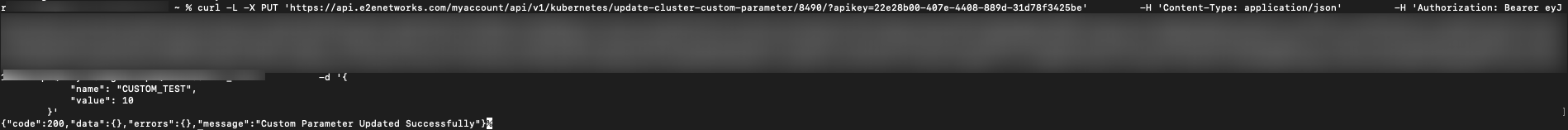

How to change custom parameter value in a CURL through CLI:

When you receive a curl command, paste it into the terminal along with your API Key, Bearer token, and set the value of the custom parameter as you prefer.

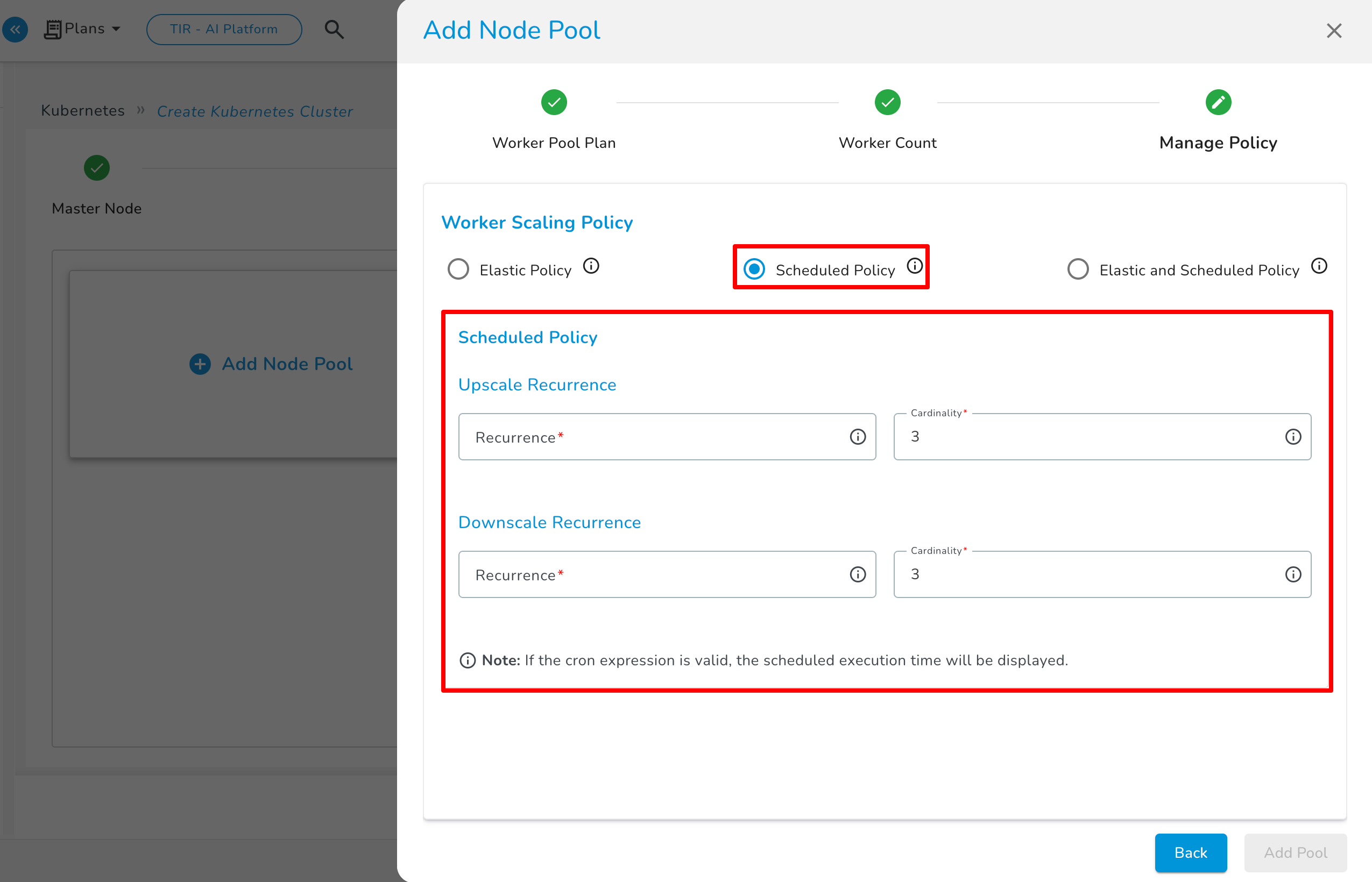

Scheduled Policy

Auto scale pool schedule policy is a feature that allows you to define a predetermined schedule for automatically adjusting the capacity of your resources. A scheduled autoscaling policy allows you to scale your resources based on a defined schedule. For example, you may use a scheduled autoscaling policy to increase the number of instances in your service during peak traffic hours and then decrease the number of instances during off-peak hours.

-

Recurrence: Refers to the ability to schedule scaling actions to occur on a recurring basis. This can be useful for applications that experience predictable traffic patterns, such as a website that receives more traffic on weekends or a web application that receives more traffic during peak business hours.

-

Upscale and Downscale Recurrence in auto scale pool refers to the process of increasing and decreasing the number of resources in Kubernetes, respectively. This can be done on a recurring basis, such as every day, week, or month.

-

Upscale Recurrence: Specify the cardinality of nodes at a specific time by adjusting the field in the cron settings. Ensure that the value is lower than the maximum number of nodes you had previously set.

-

Downscale Recurrence: Specify the cardinality of nodes at a specific time by adjusting the field in the cron settings. Ensure that the value is greater than the maximum number of nodes you had previously set.

To choose scheduled policy as your option, select Schedule Policy in place of Elastic Policy and then set the upscale and downscale recurrence and click on Create Scale button.

Elastic and Scheduled Policy

If the user desires to create a Kubernetes service using both options, they can choose the Elastic and Scheduled Policy option, configure the parameters, and proceed with creating the cluster service.

Deploy Kubernetes

After filling in all the details successfully, click on the Create Cluster button. It will take a few minutes to set up the scale group, and you will be taken to the ‘Kubernetes Service’ page.

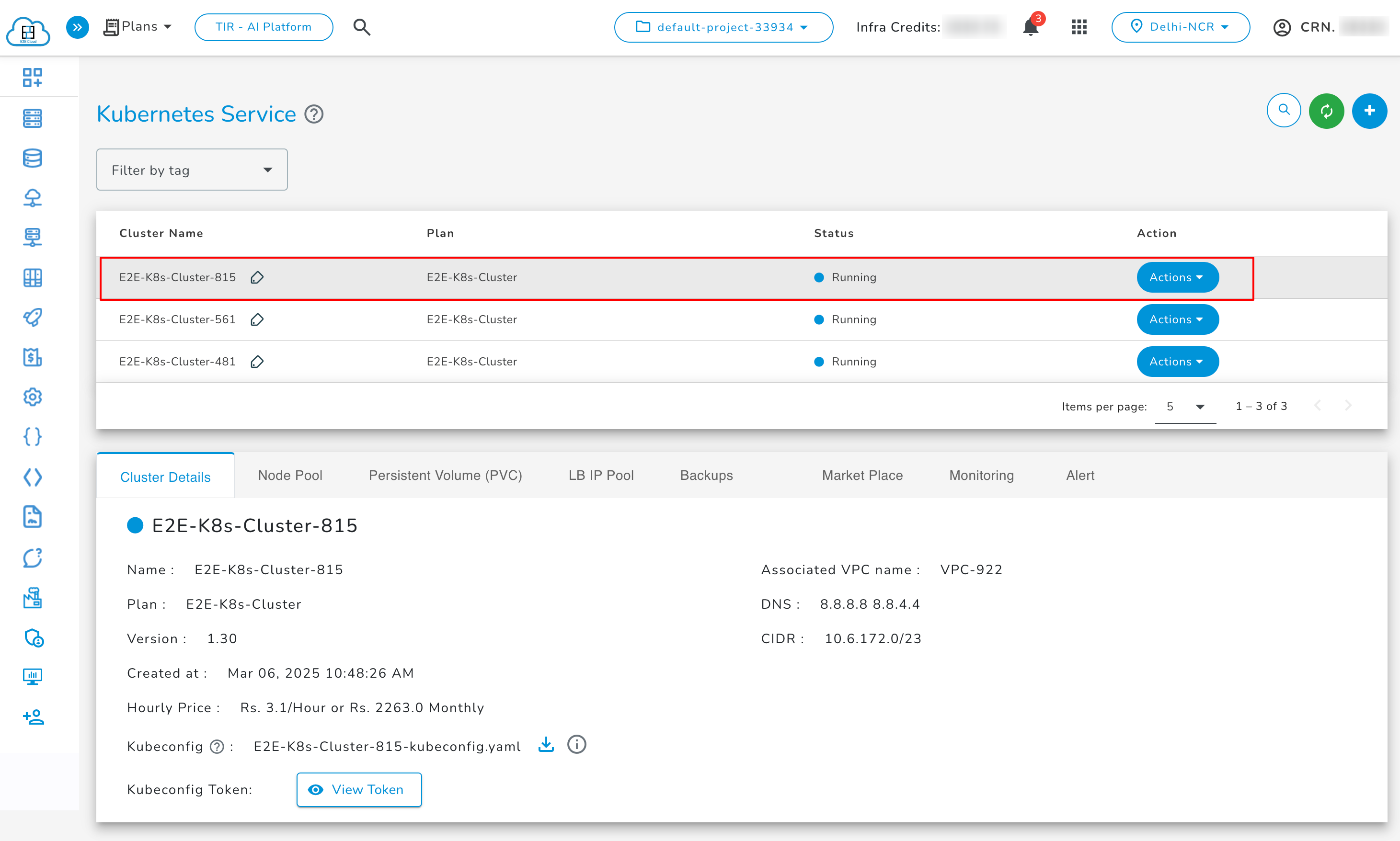

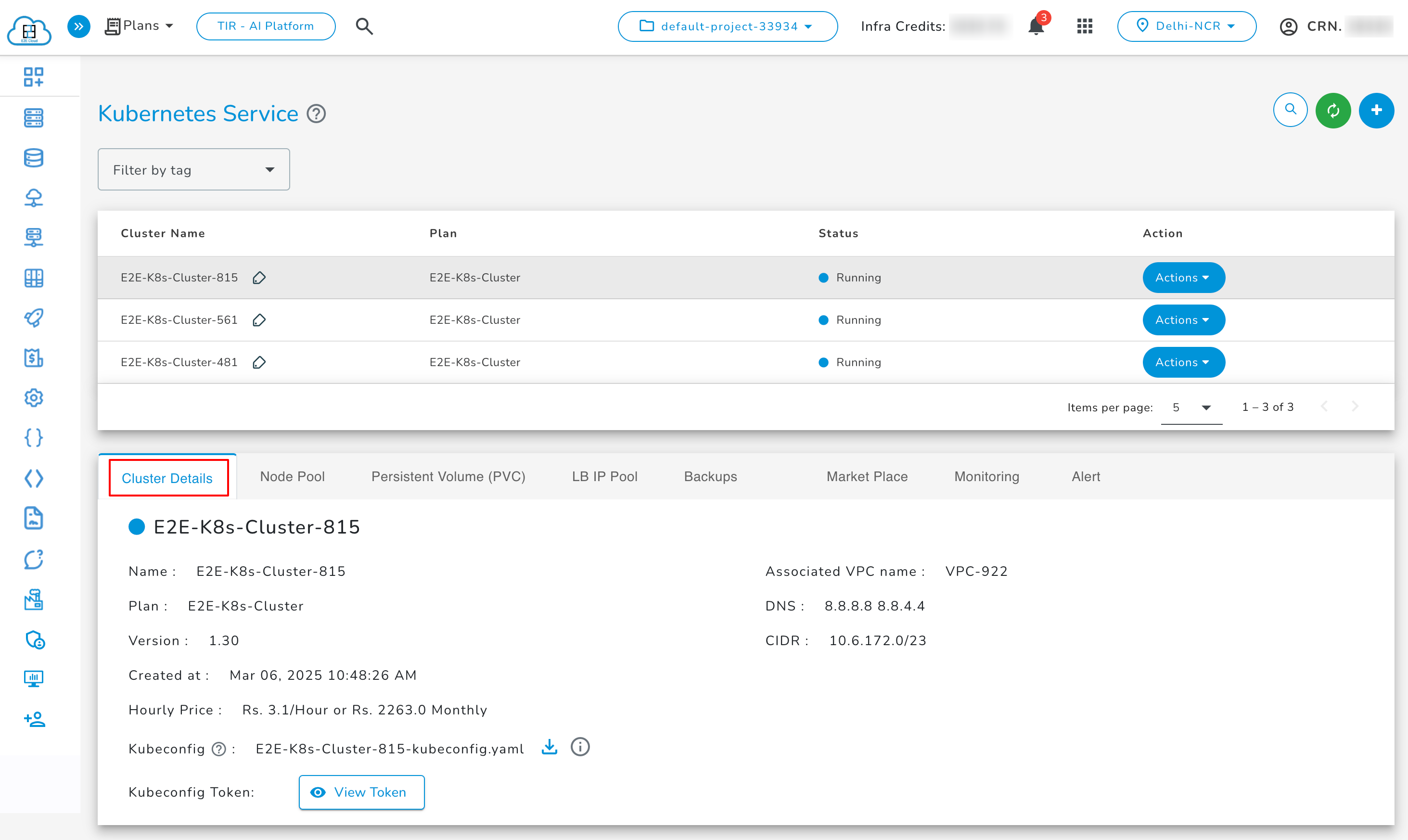

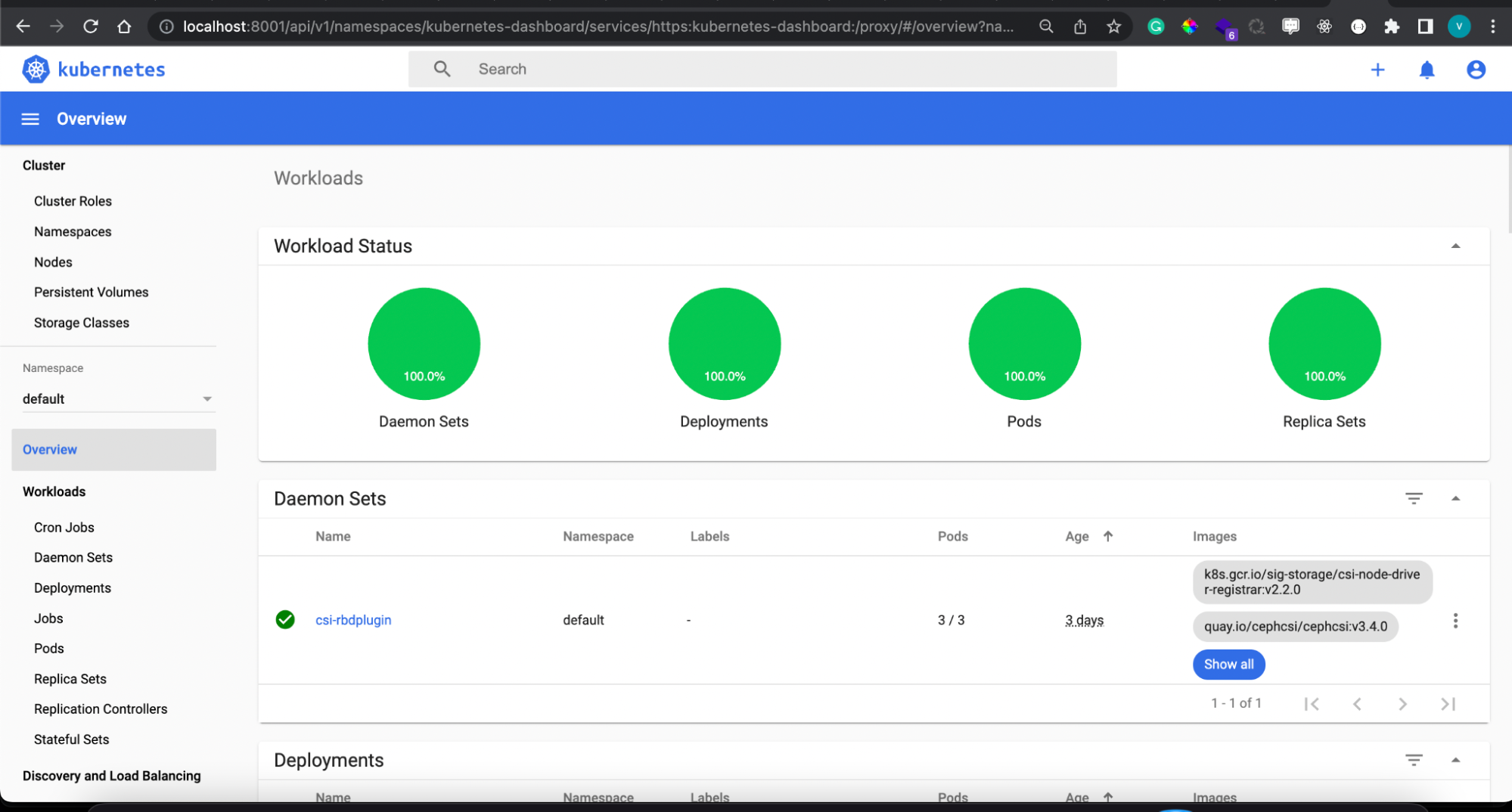

Kubernetes Service

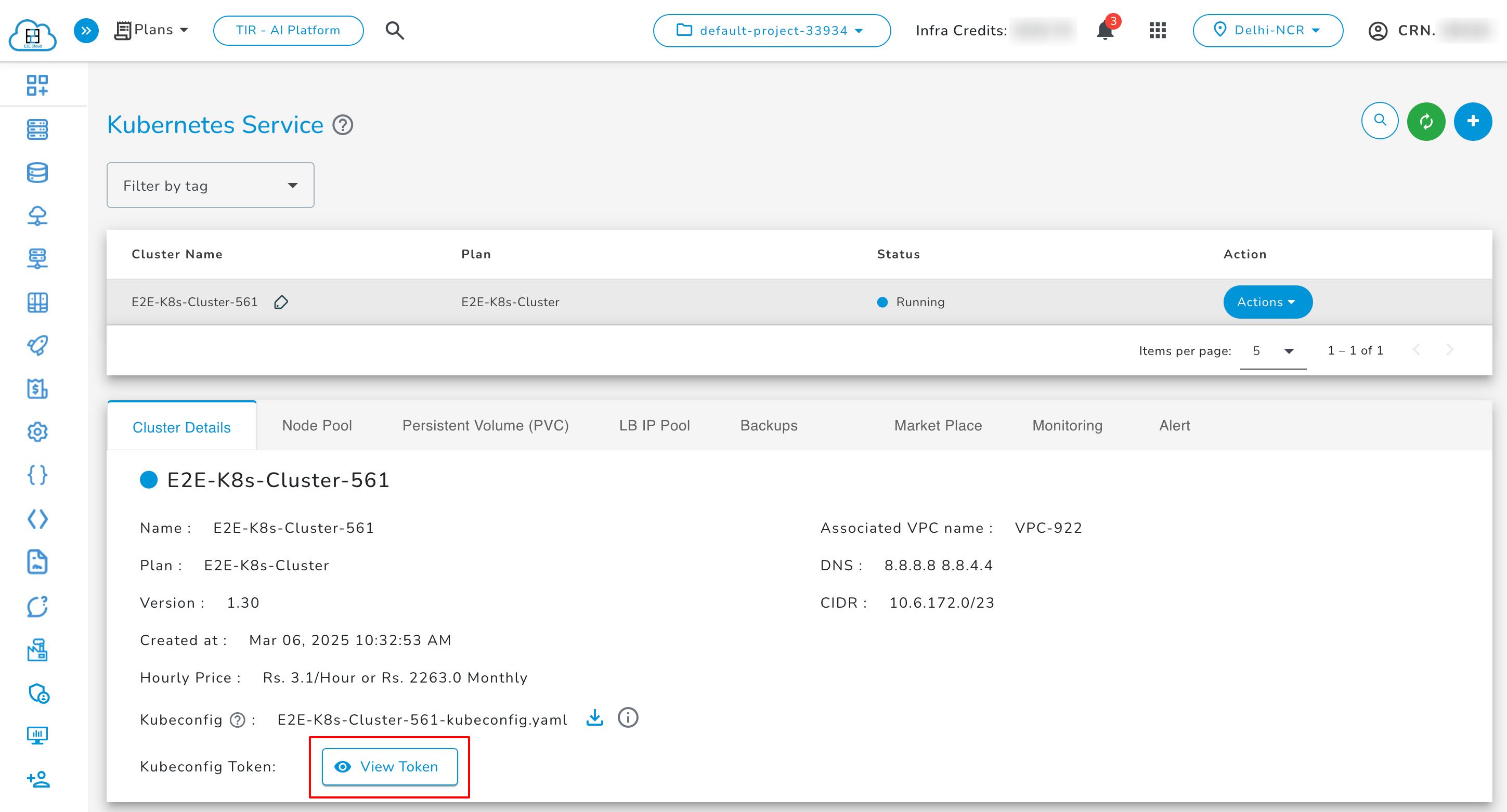

Cluster Details

You will be able to check all the basic details of your Kubernetes. You can check the Kubernetes name and version details.

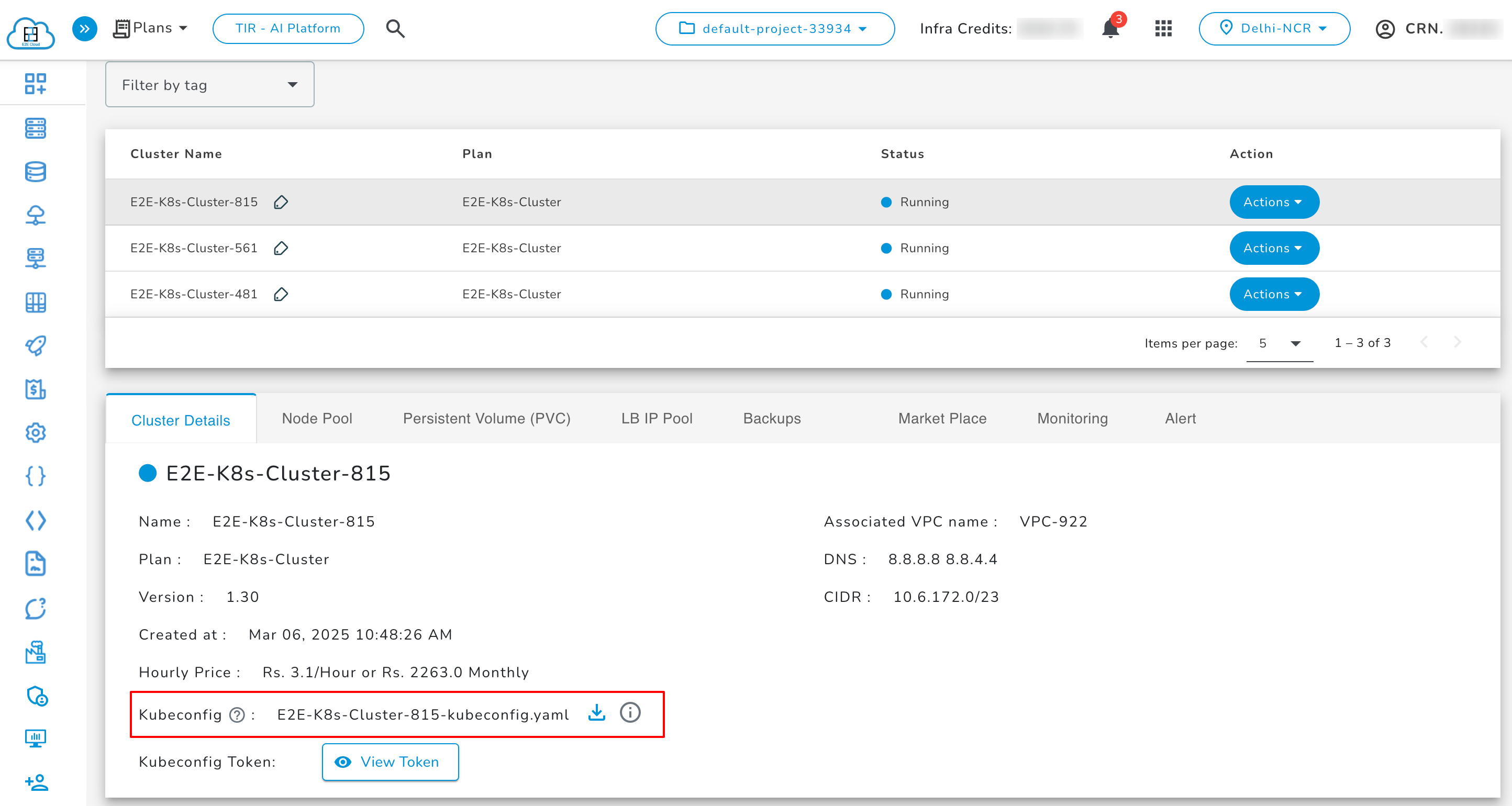

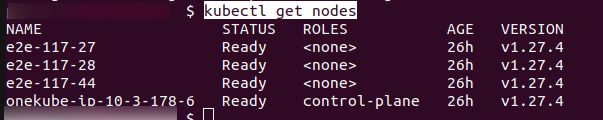

How To Download Kubeconfig.yaml File

- After downloading the Kube config, please make sure

kubectlis installed on your system. - To install

kubectl, follow this doc. - Run

kubectl --kubeconfig="download_file_name" proxy.

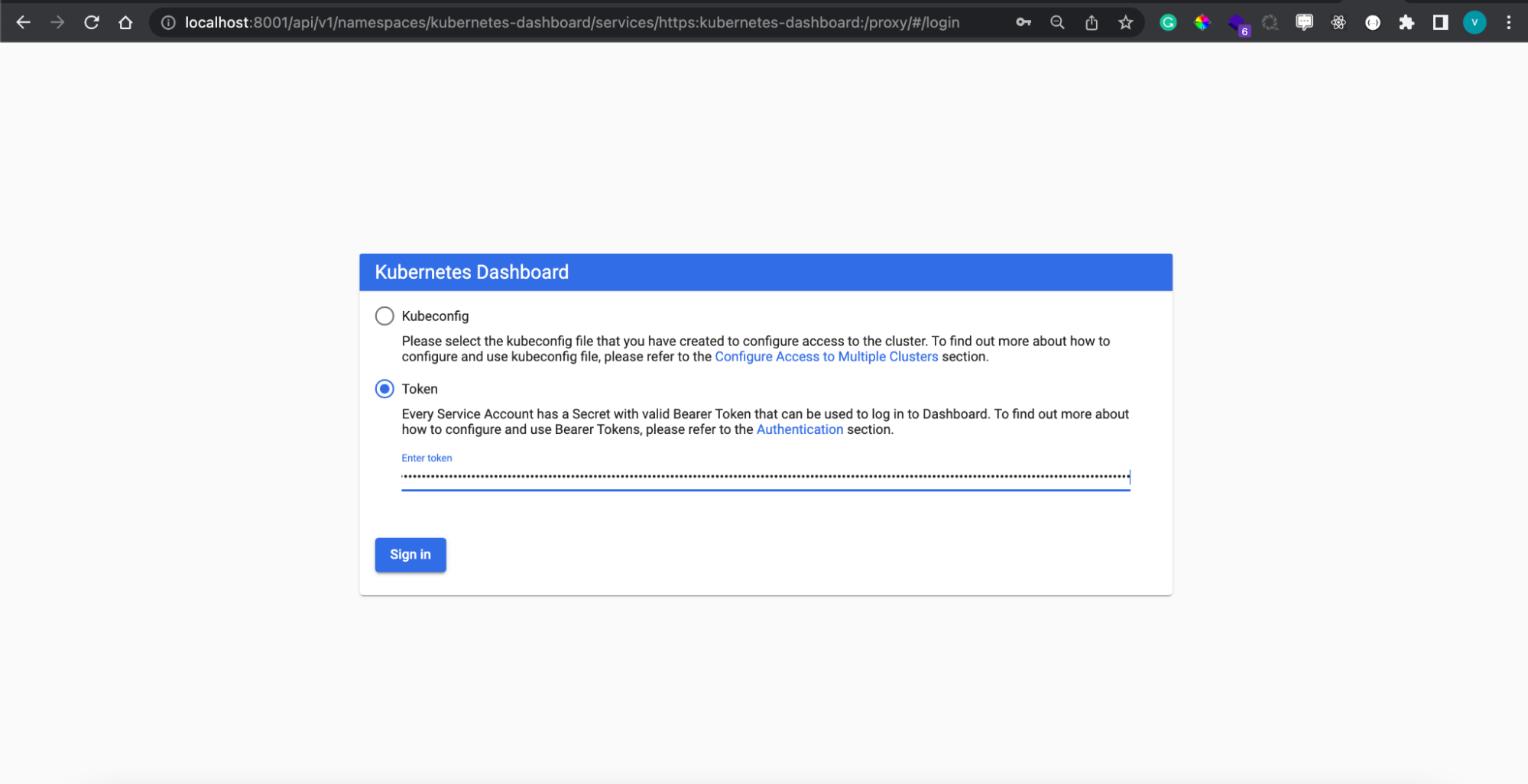

- Open the below URL in the browser:

- Copy the kubeconfig token in the option below:

- Paste the Kubeconfig Token to access the Kubernetes dashboard.

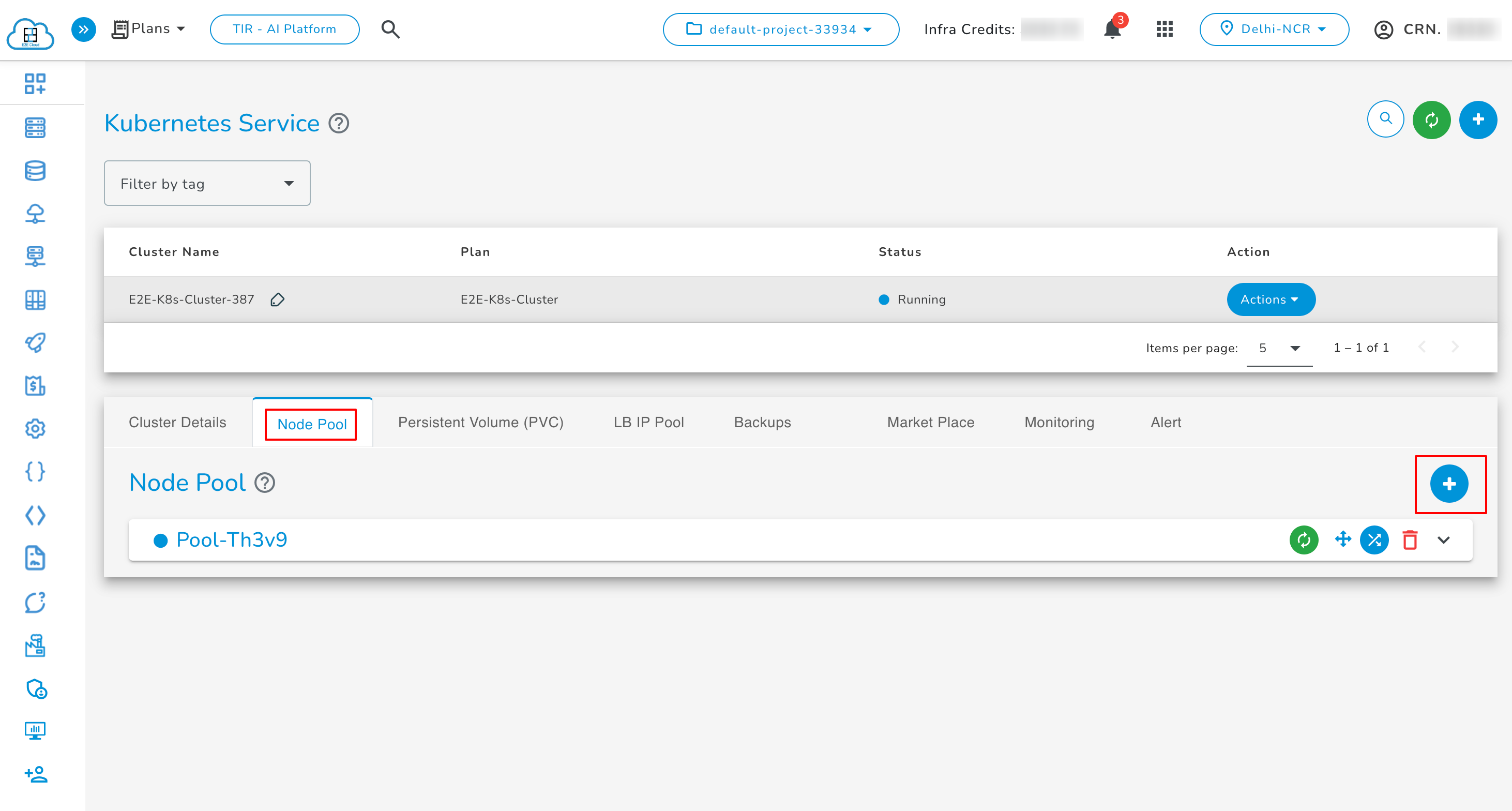

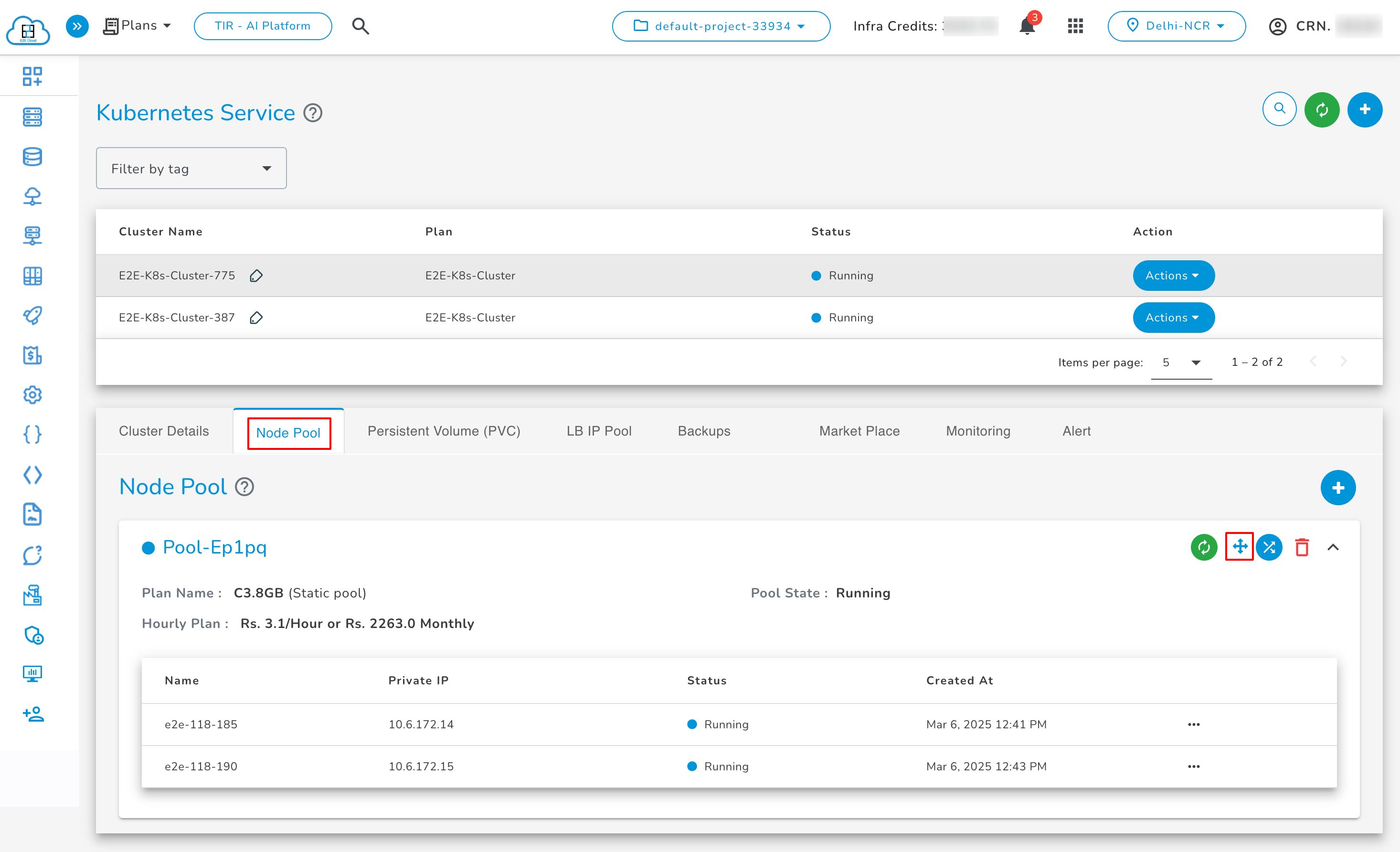

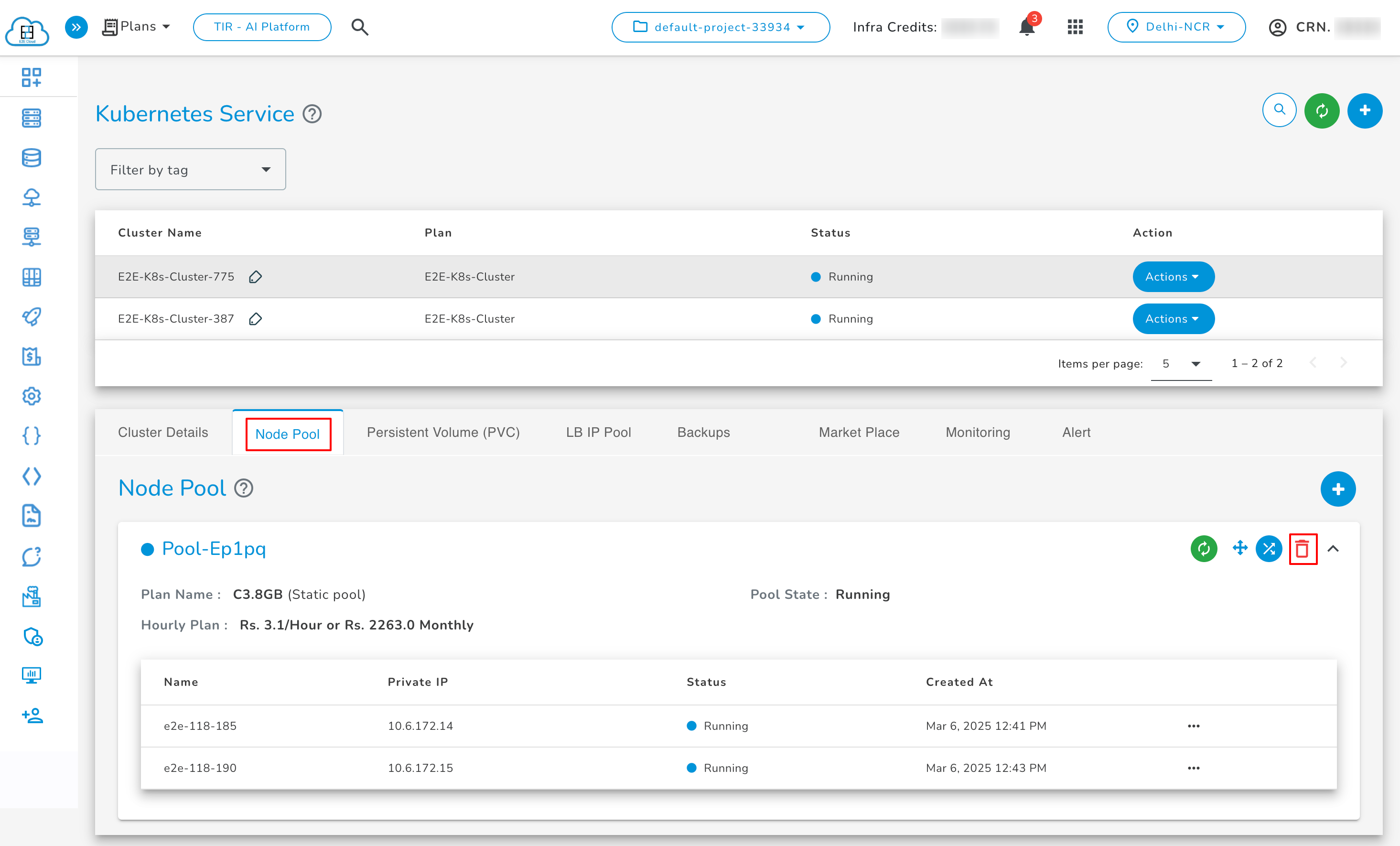

Node Pool

The Node Pool Details tab provides information about the Worker nodes. Users can also add, edit, resize, power on/off, reboot, and delete the worker nodes.

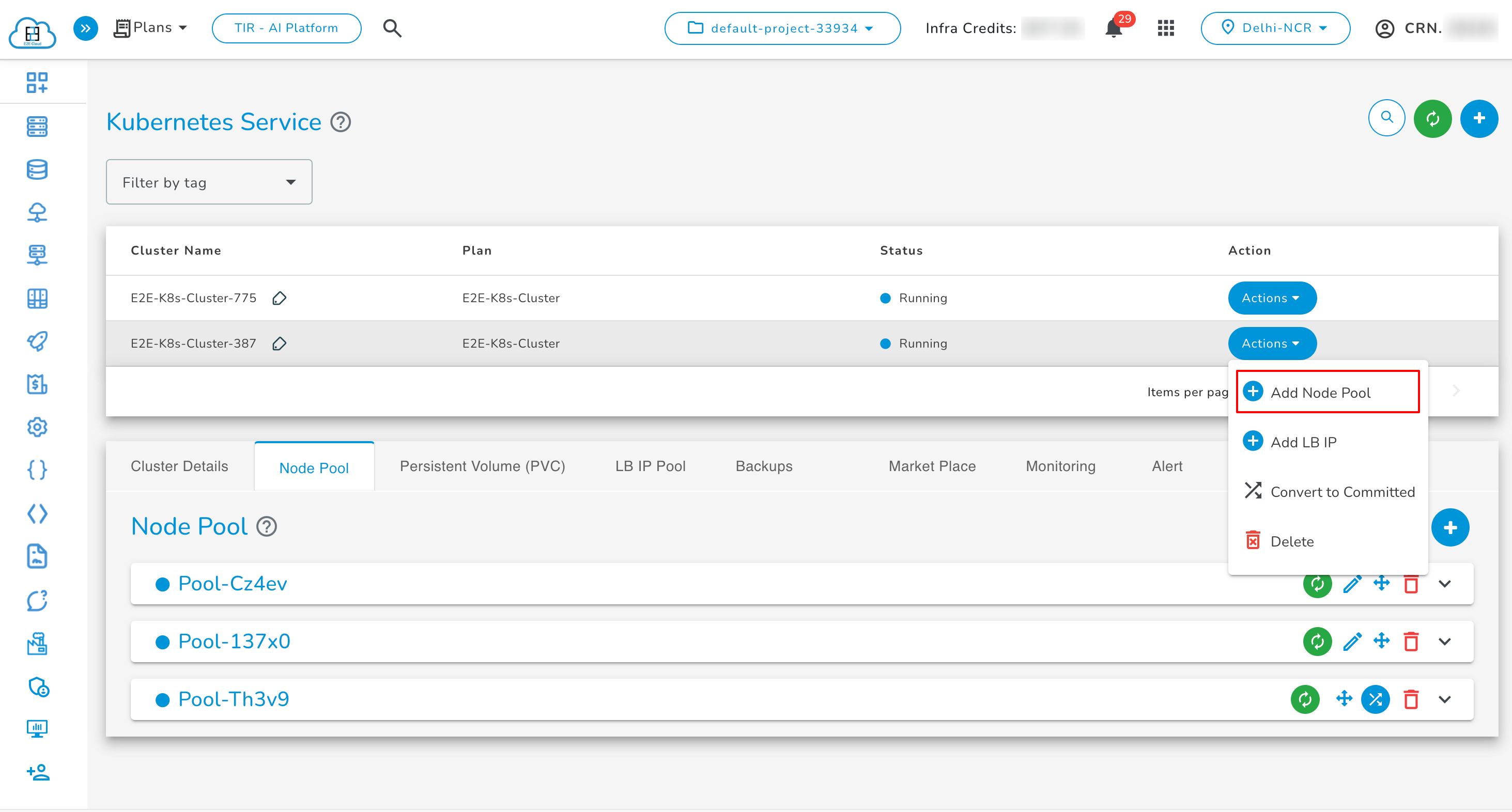

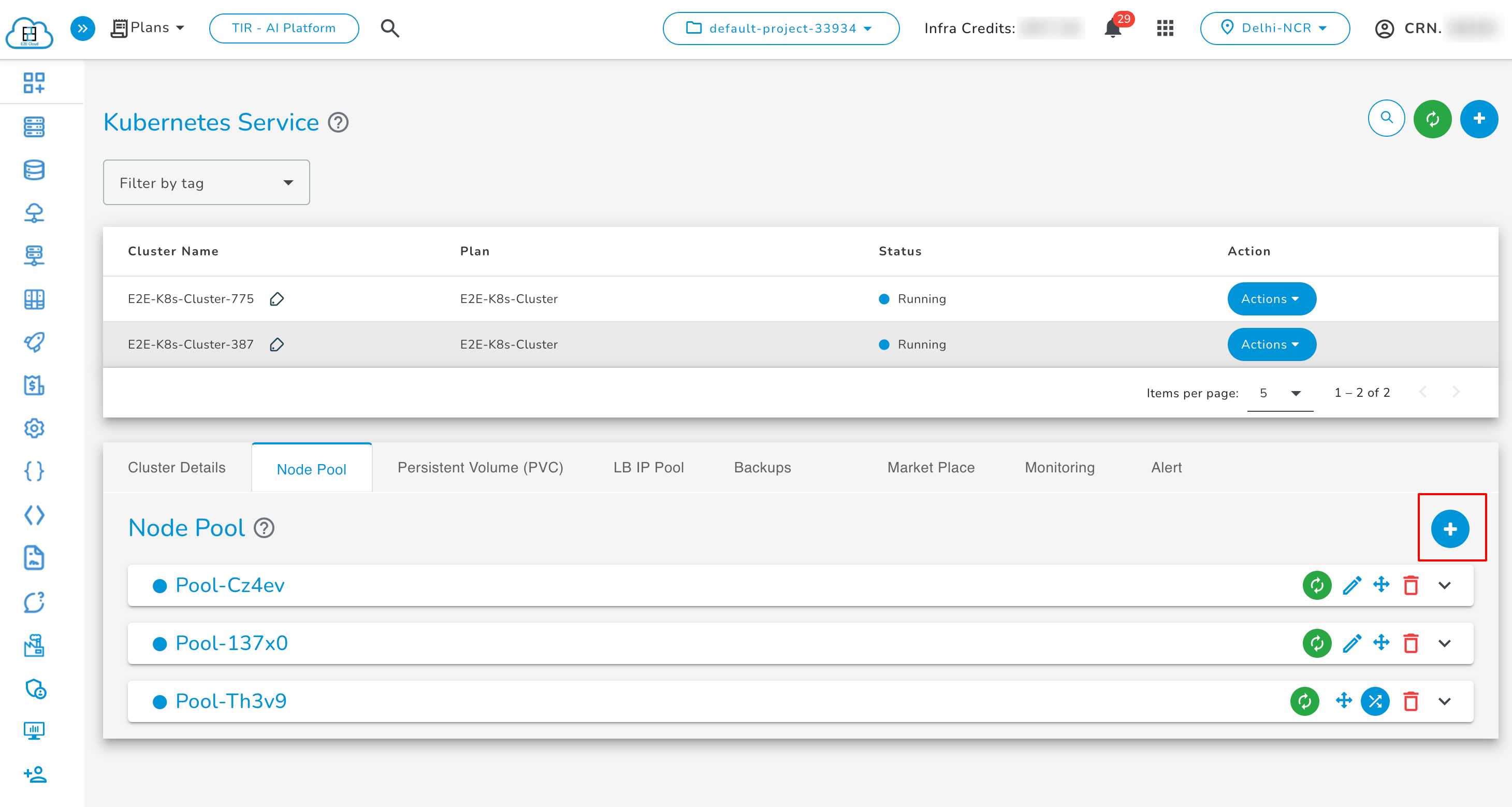

To Add Node Pool

To add a node pool, click on the Add node icon.

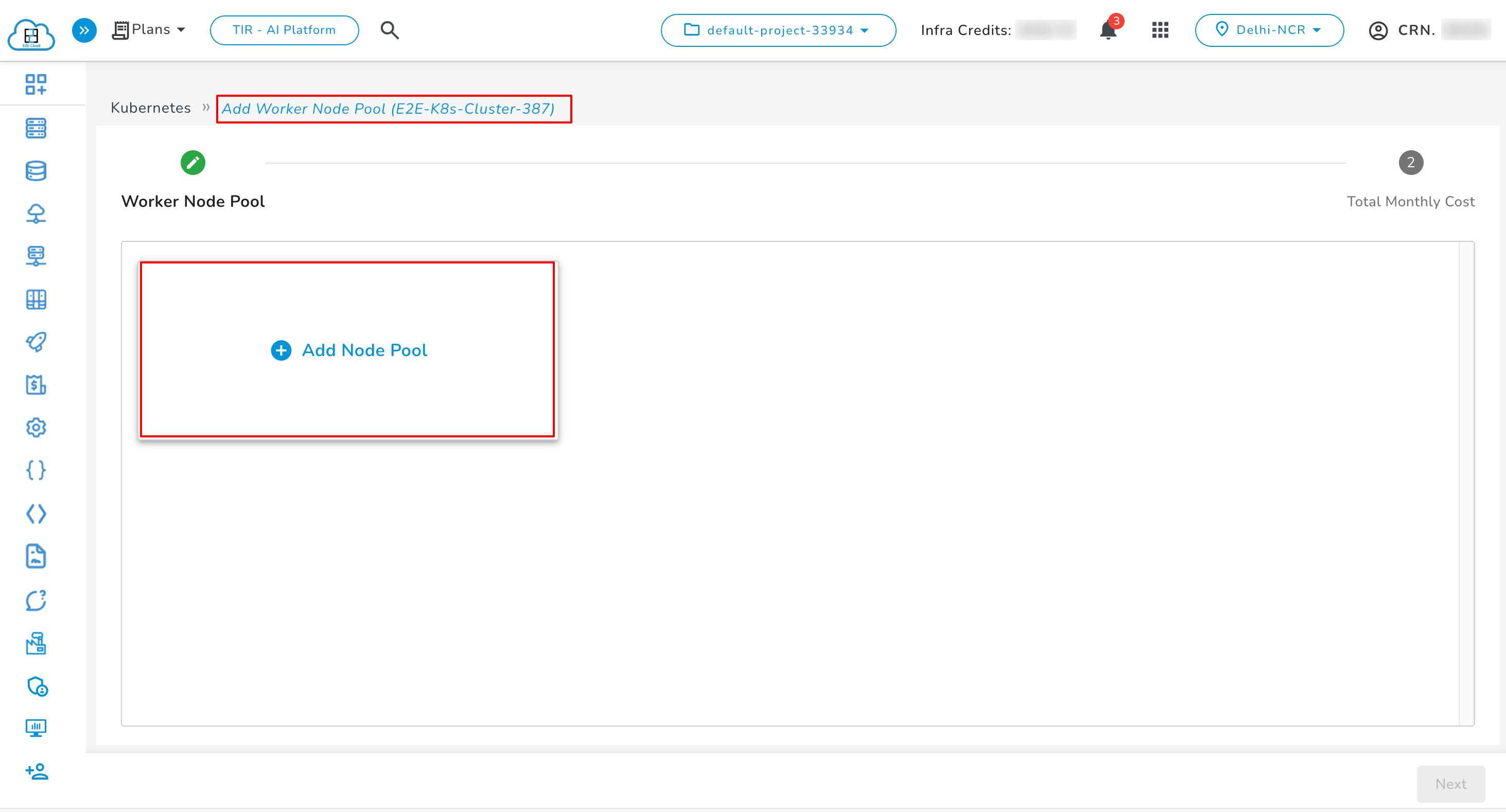

After clicking on the icon, you can add a node pool by clicking the "Add node pool" button.

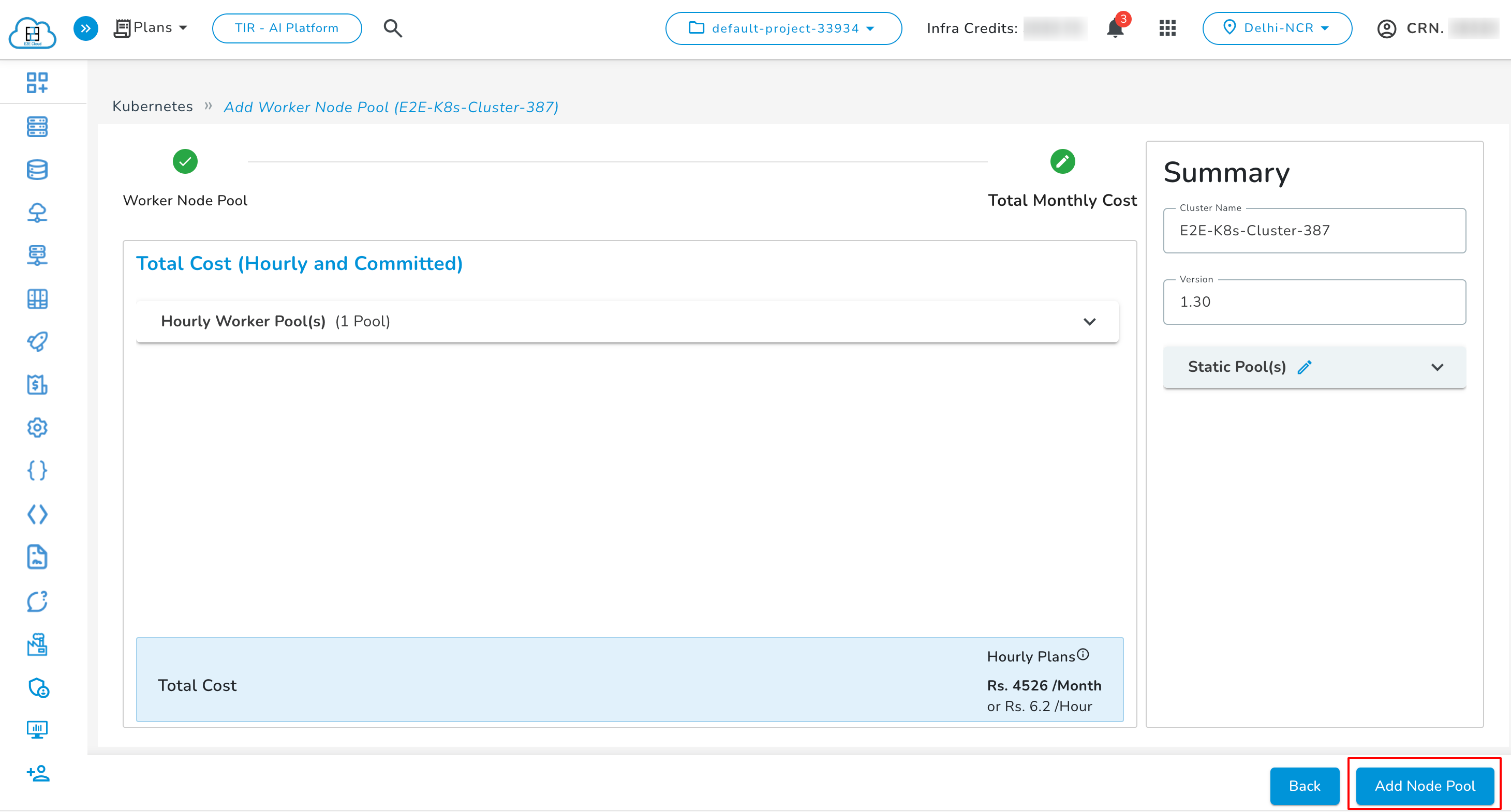

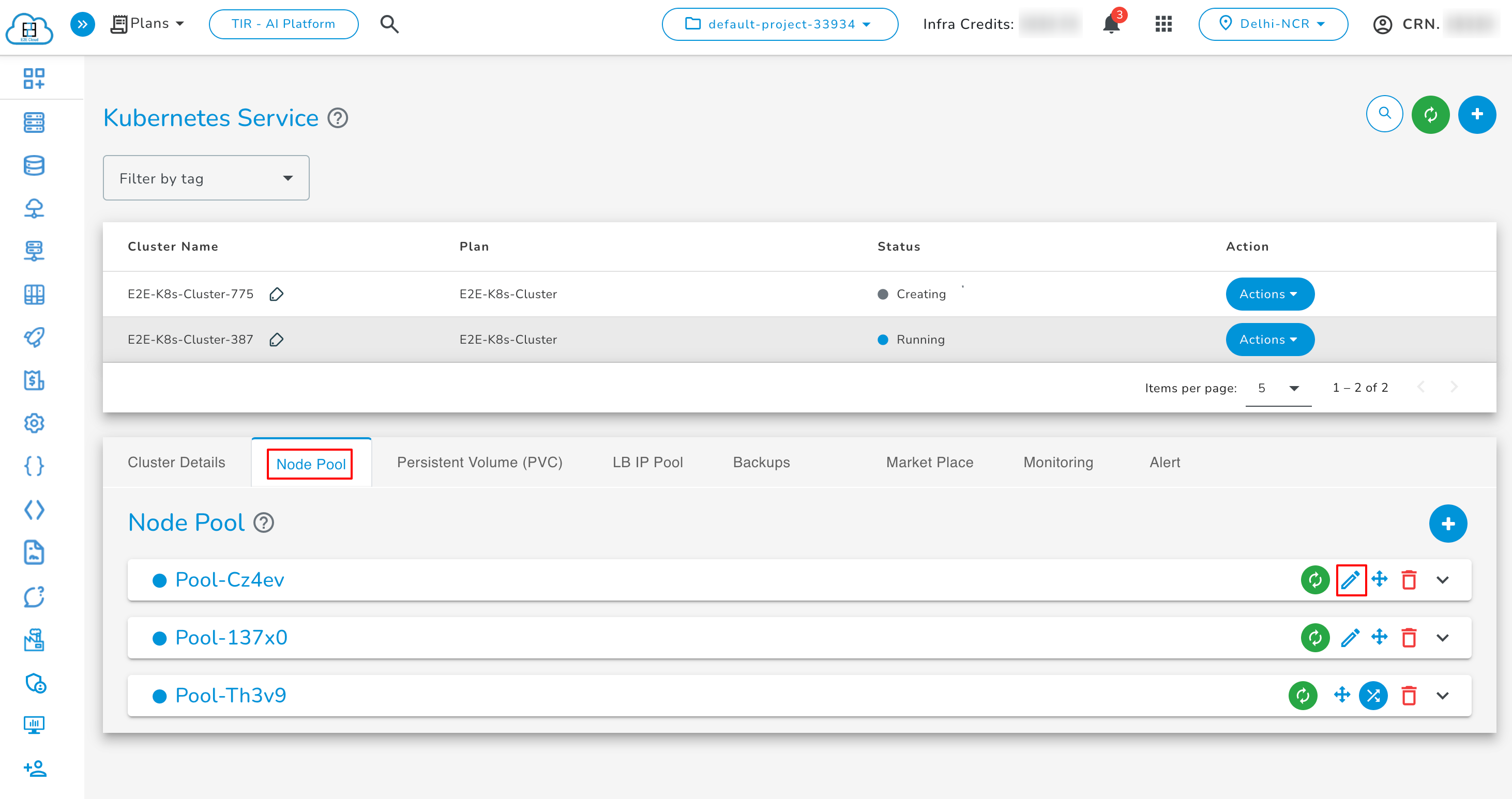

After successfully adding a new node pool, it will now appear at the top of the Node Pools list, making it easier to access the most recently added pool. The "Add Node Pool" button has been moved to the bottom of the list for better usability and to reflect the most relevant changes first.

To Edit Node Pool

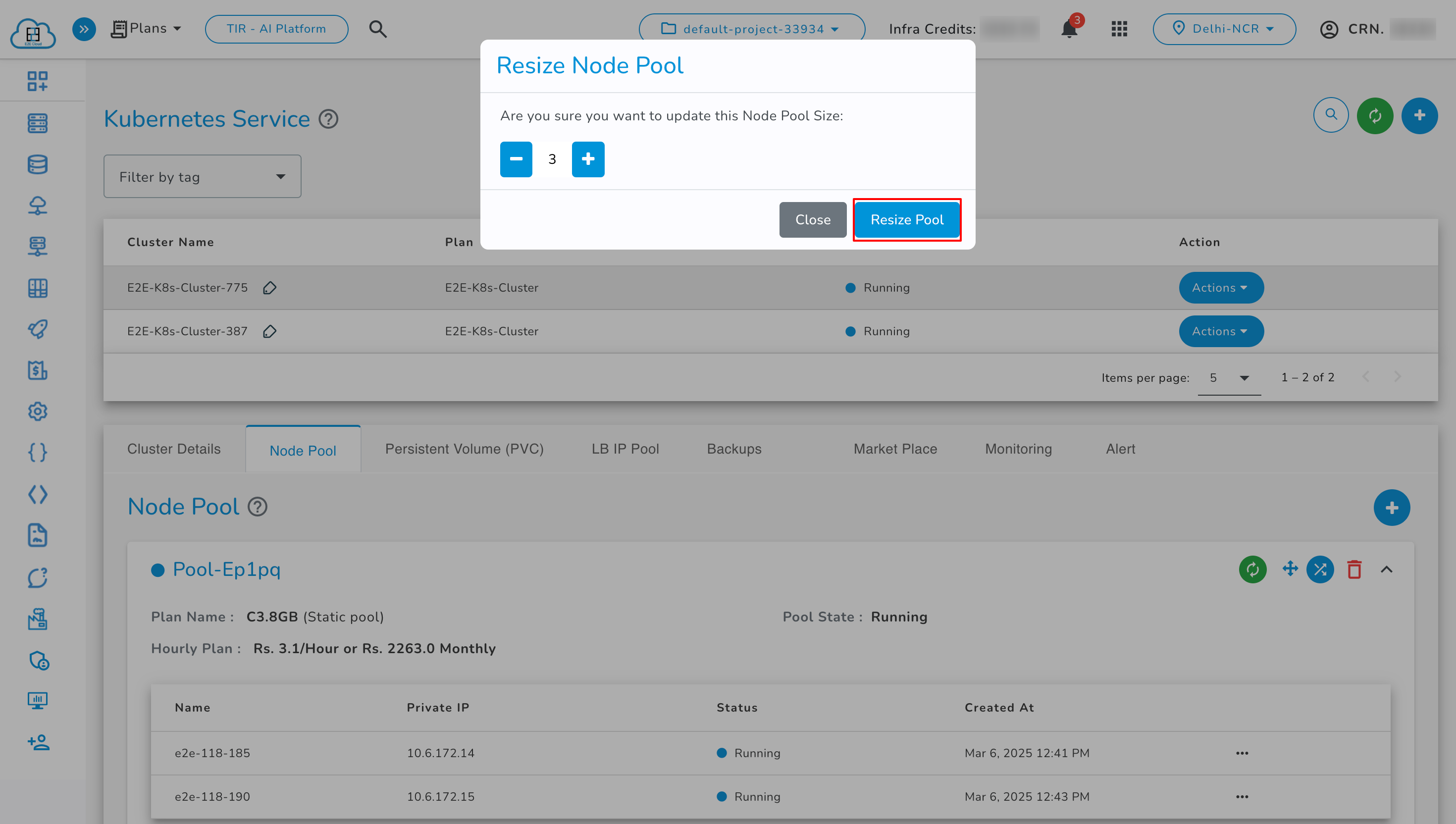

To Resize Node Pool

Users can also resize the worker nodes pool. Click on the resize button.

To Delete Node Pool

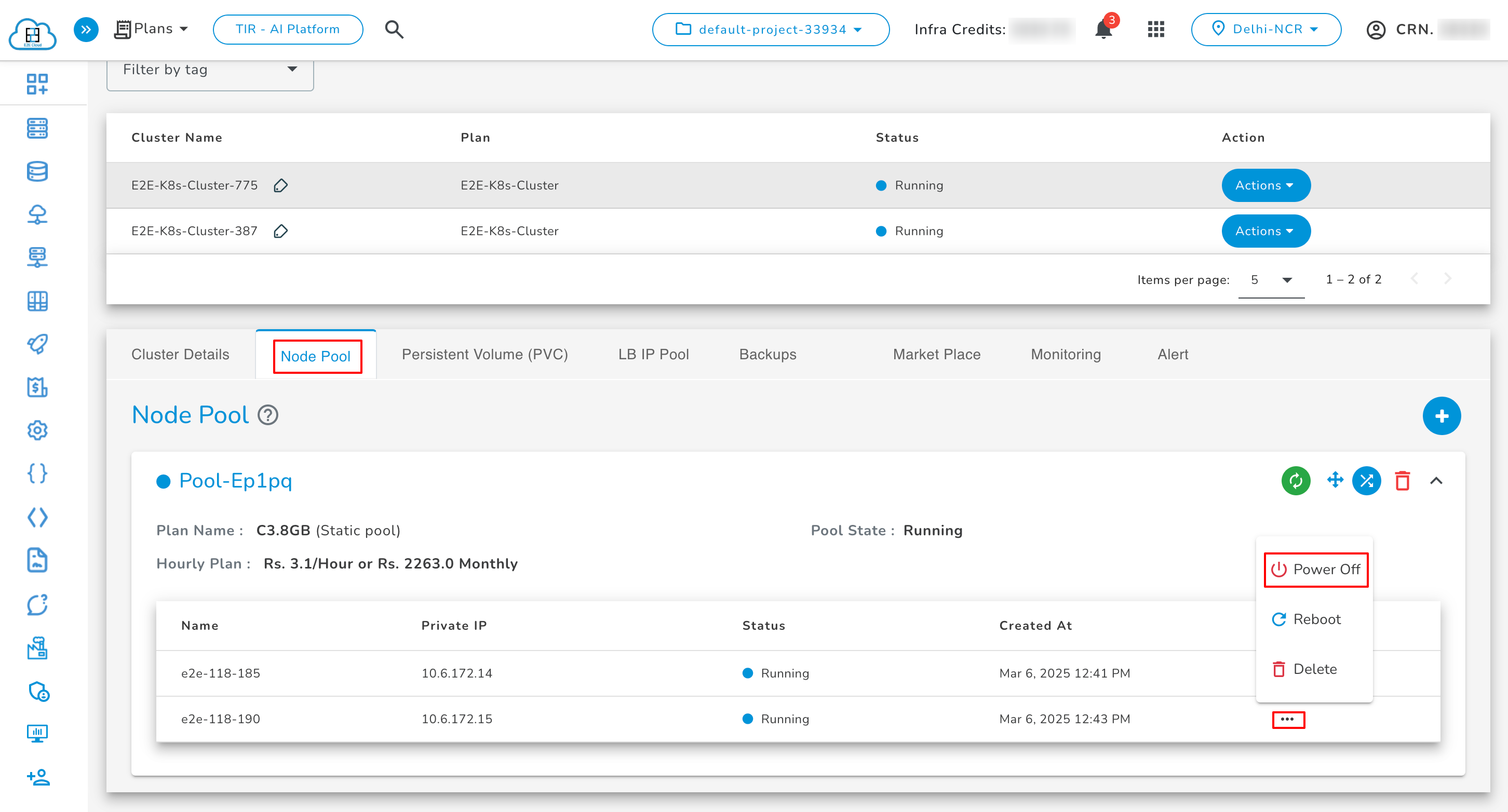

To Power Off a Worker Node

When a user clicks the three dots icon associated with a worker node, they will find a list of available actions. Simply select the 'Power Off' option from this list to power off the node.

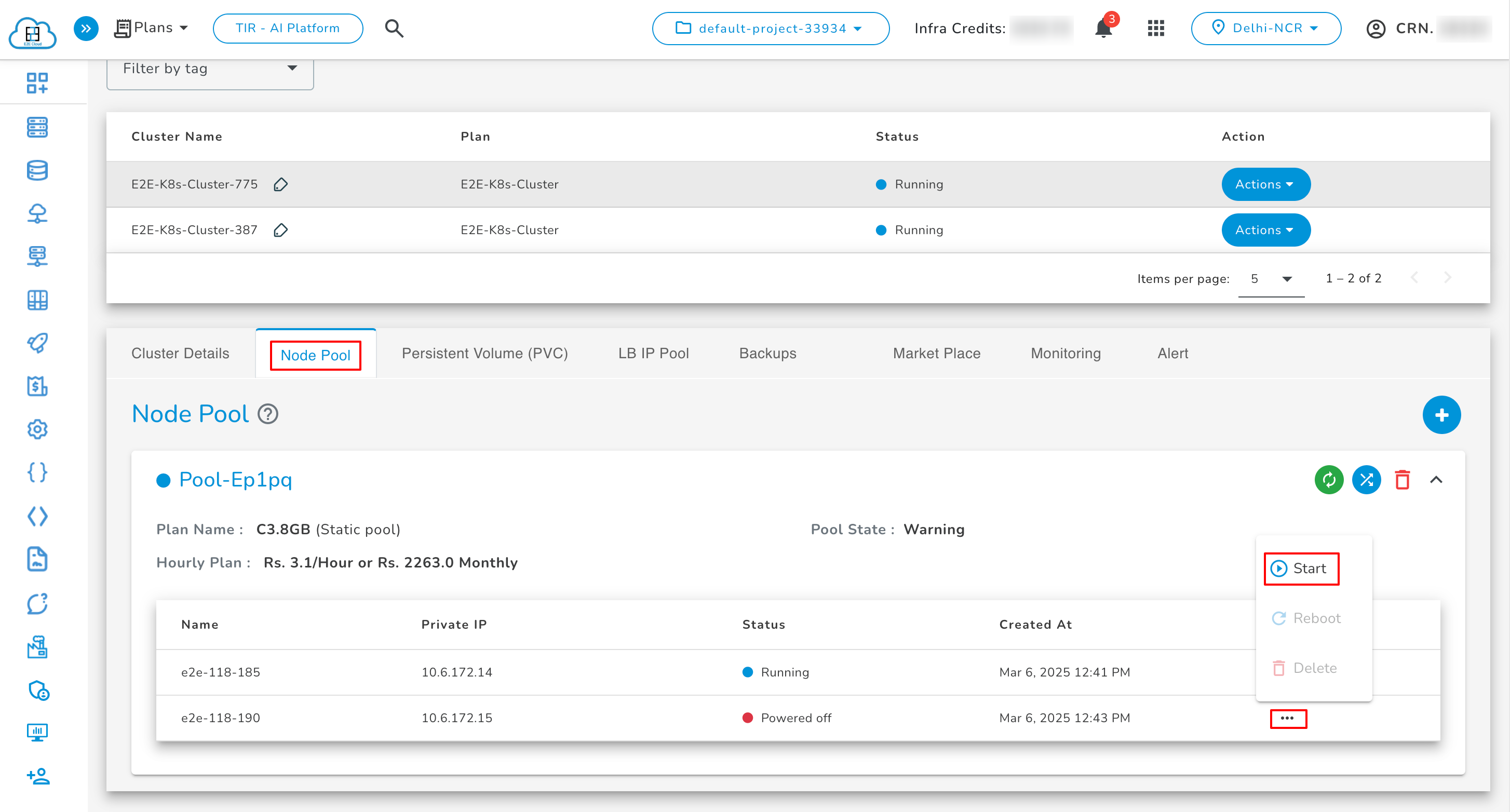

To Power On a Worker Node

To bring the powered-off node back online, locate the three dots icon associated with it. You'll notice that only the "start" action is available, while the "reboot" and "delete" actions are unavailable. Simply select "start" to bring the node to a running state.

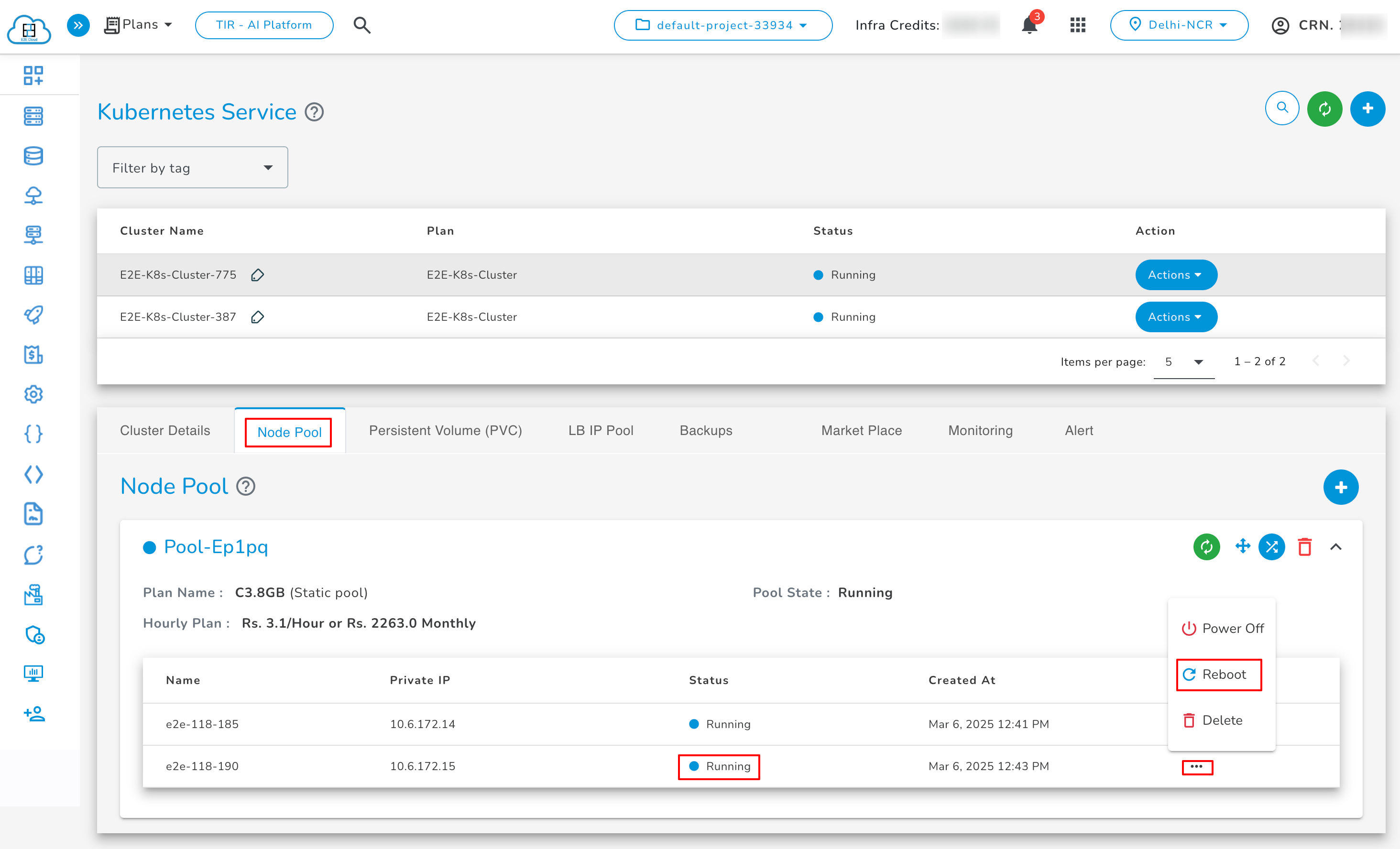

To Reboot a Worker Node

To reboot a running node, locate the three dots icon associated with the node. Then, select the "Reboot" option from the menu that appears. This will initiate the reboot process for the node.

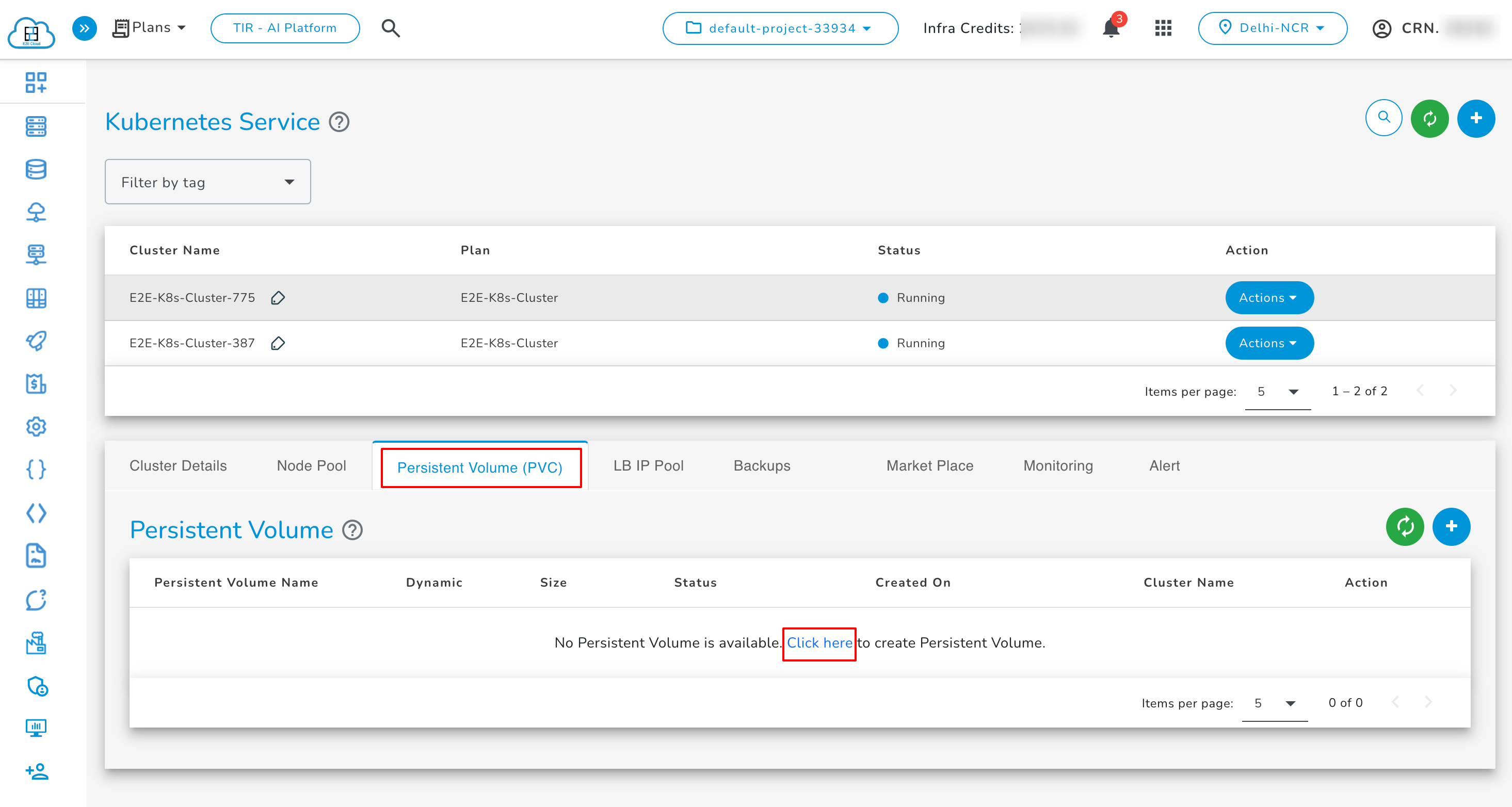

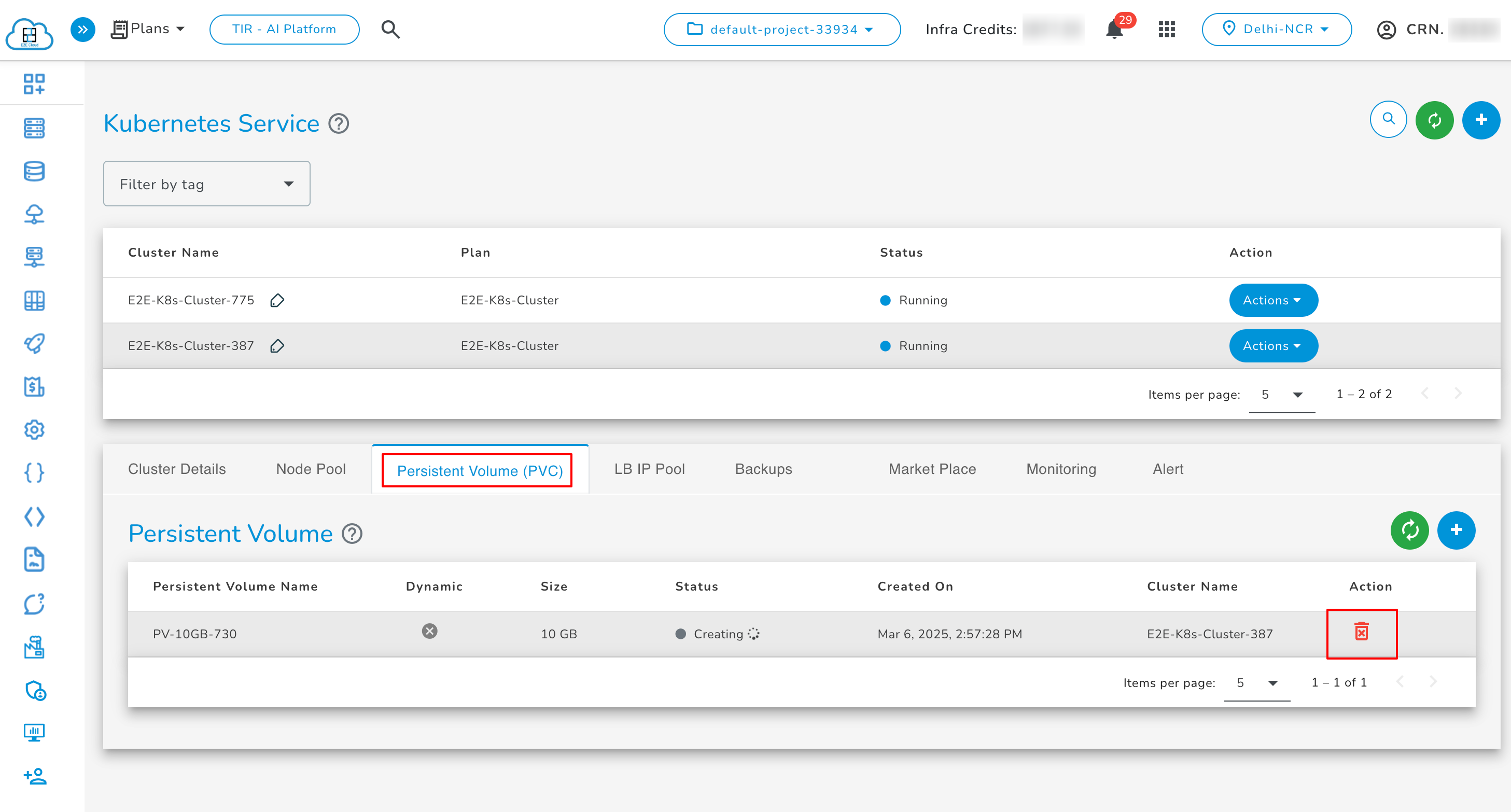

Persistent Volume (PVC)

To check PVC, run the command shown below.

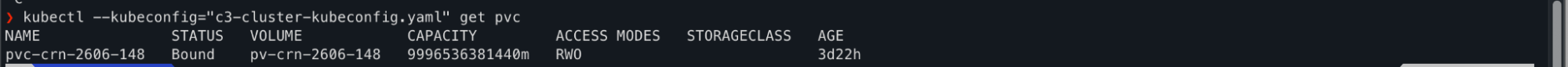

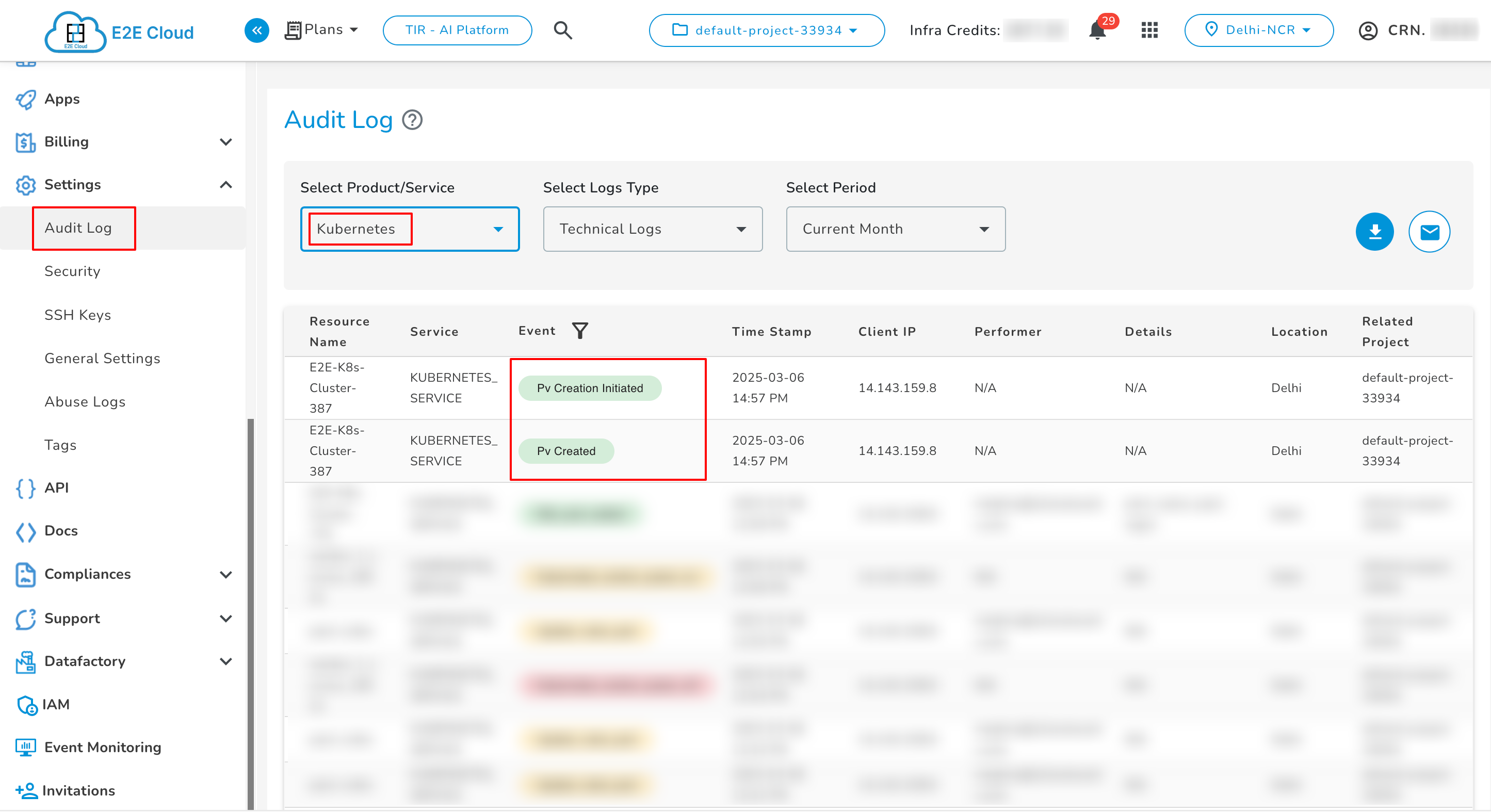

Create Persistent Volume

On the top right section of the manage persistent volume, click on the “Add persistent volume” button which will prompt you to the Add persistent volume page where you will click the create button.

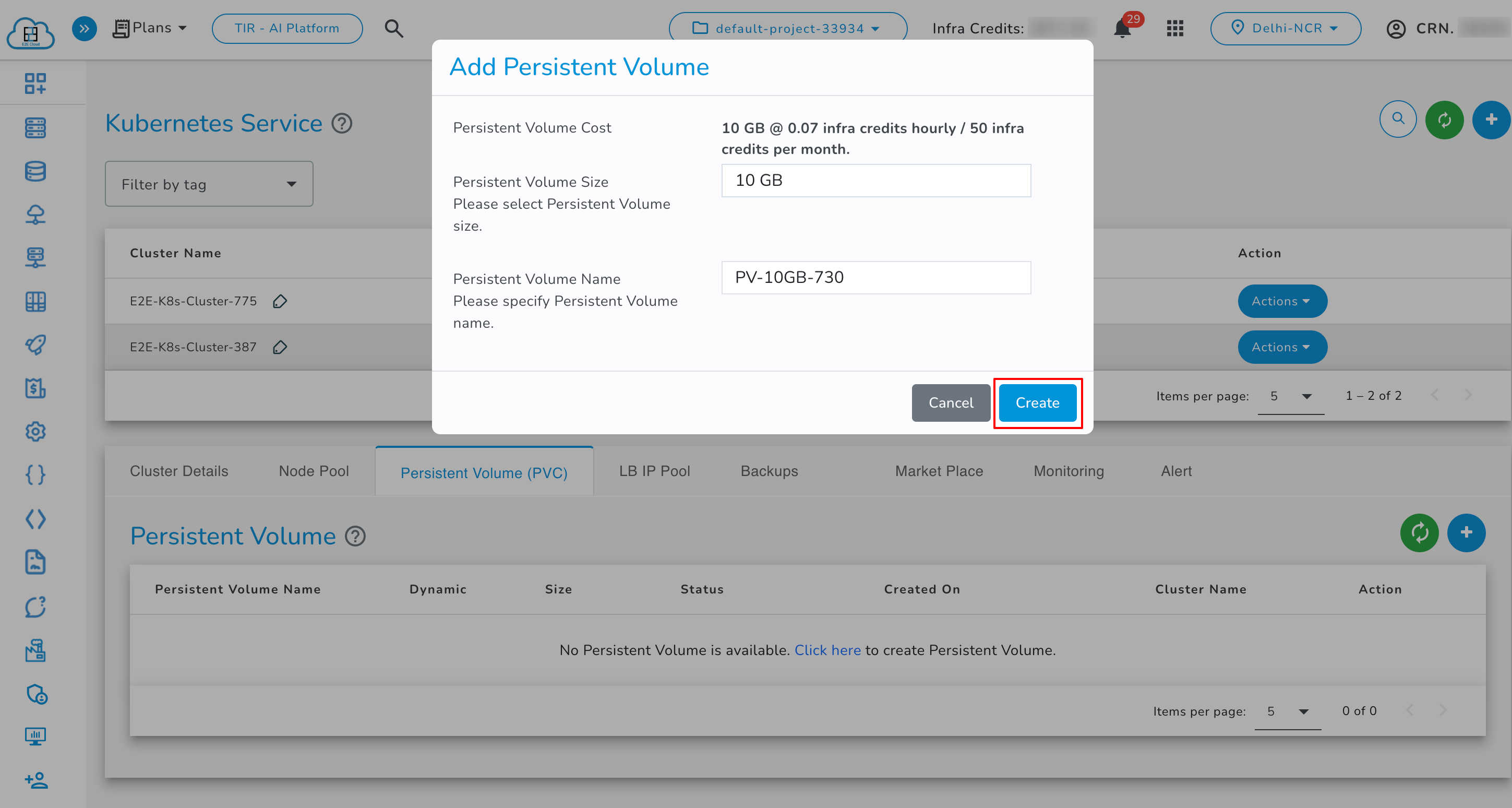

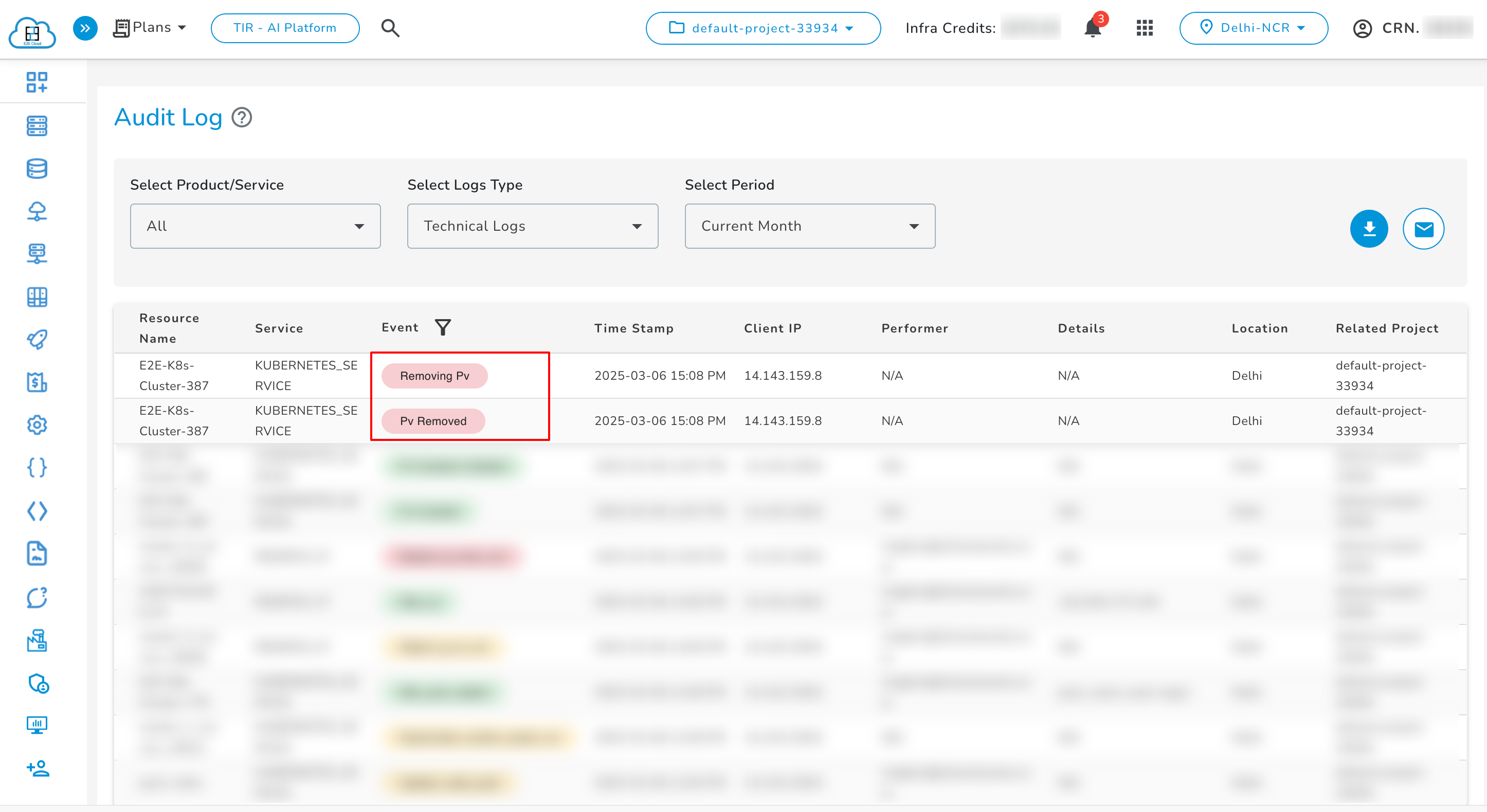

You can check the audit logs for details about the creation of the persistent volume.

Delete Persistent Volume

To delete your persistent volume, click on the delete option. Please note that once you have deleted your persistent volume, you will not be able to recover your data.

You can check the audit logs for details about the deletion of the persistent volume.

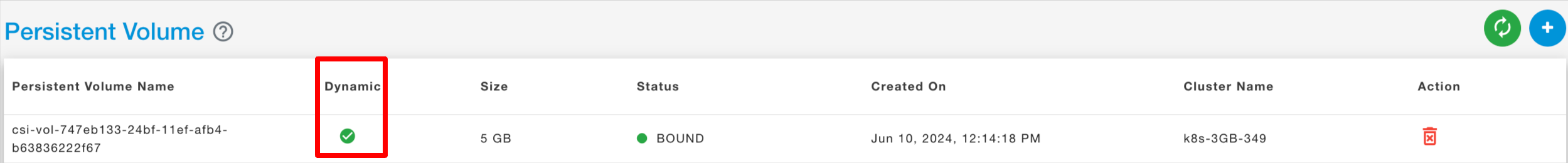

Dynamic Persistent Volume (PVC)

Note: The sizes shown over the UI and in the bill are calculated in GB; however, in creation, the size used by K8s is in GiB. Decreasing the size of an existing volume is not possible. The Kubernetes API doesn’t even allow it.

Dynamic volume provisioning allows storage volumes to be created on-demand.

Note: To create a dynamic PersistentVolumeClaim, at least one PersistentVolume should be created in your Kubernetes cluster from the MyAccount Kubernetes PersistentVolume section.

Create a Storage Class

To enable dynamic provisioning, a cluster administrator needs to pre-create one or more StorageClass objects for users. StorageClass objects define which provisioner should be used and what parameters should be passed to that provisioner when dynamic provisioning is invoked.

The following manifest creates a storage class "csi-rbd-sc" which provisions standard disk-like persistent disks.

cat <<EOF > csi-rbd-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 4335747c-13e4-11ed-a27e-b49691c56840

pool: k8spv

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

EOF

kubectl apply -f csi-rbd-sc.yaml

CREATE A PERSISTENT VOLUME CLAIM

Users request dynamically provisioned storage by including a storage class in their PersistentVolumeClaim. To select the "csi-rbd-sc" storage class, for example, a user would create the following PersistentVolumeClaim

cat << EOF > pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 4Gi

storageClassName: csi-rbd-sc

EOF

kubectl apply -f pvc.yaml

This claim results in an SSD-like Persistent Disk being automatically provisioned. When the claim is deleted, the volume is destroyed.

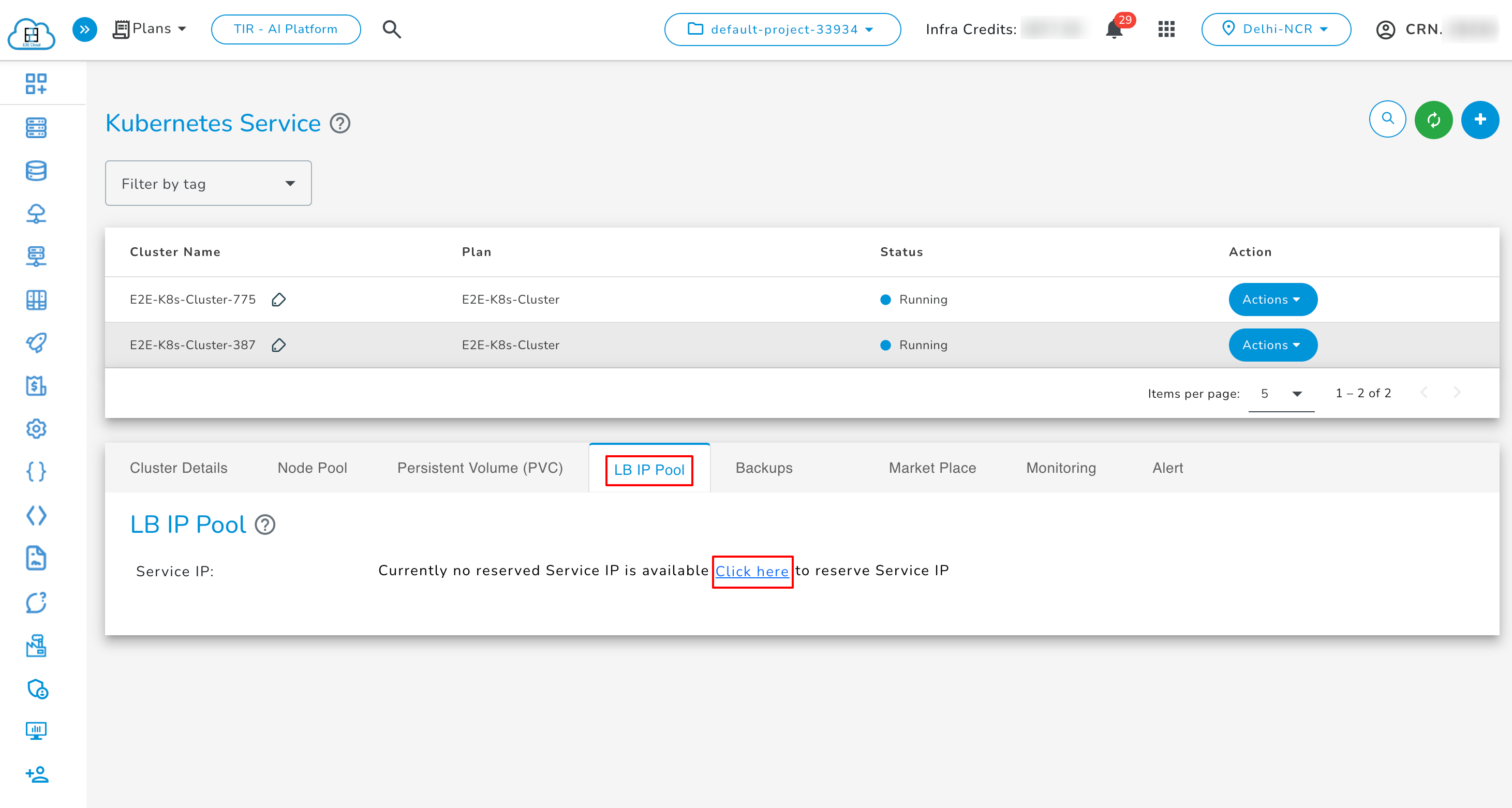

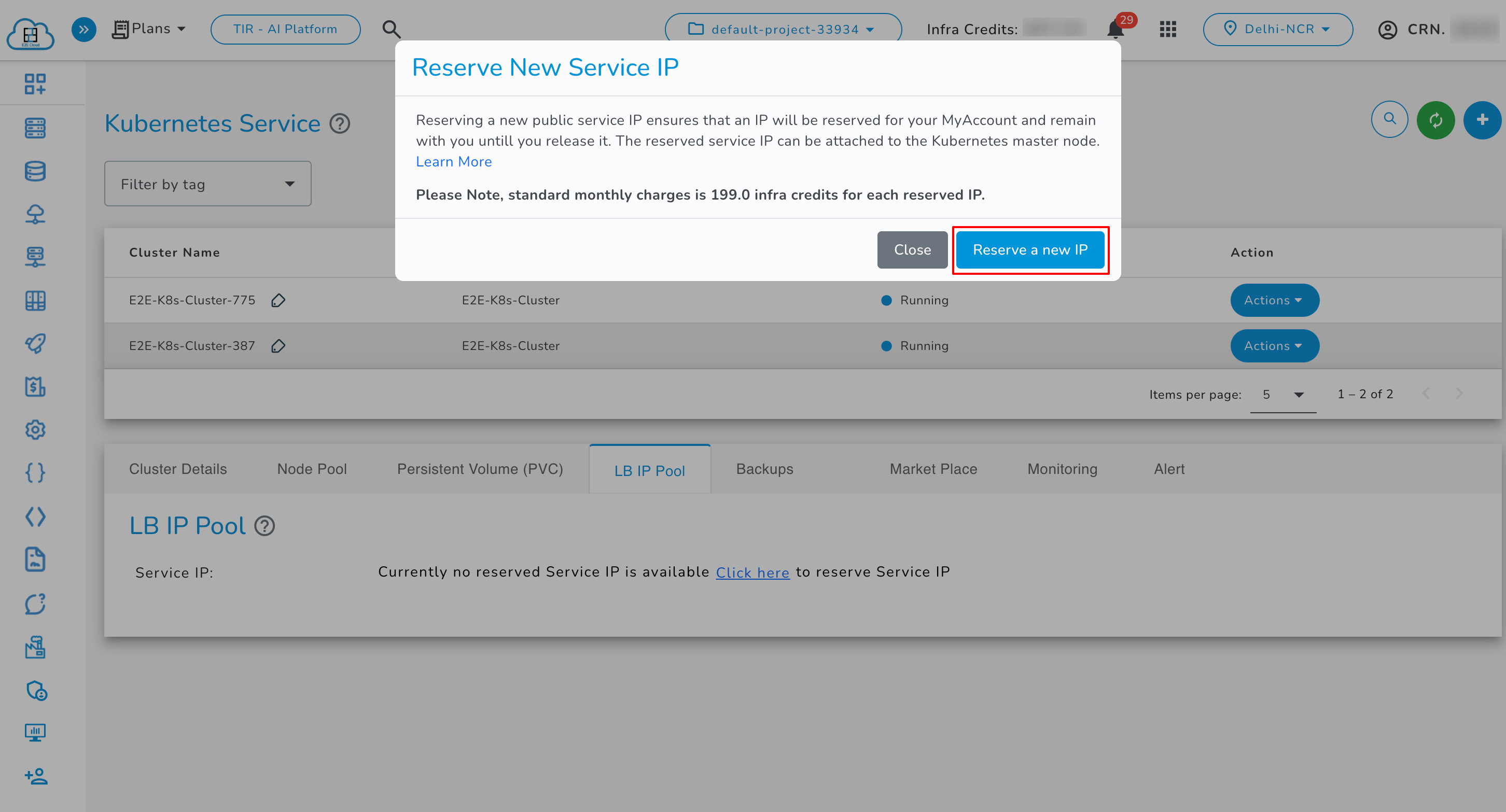

Public IPv4 Address

A public IP address is an IPv4 address that’s reachable from the Internet. You can use public addresses for communication between your Kubernetes and the Internet. You can reserve the default assigned public IP for your account until you release it. If you want to allocate public IP addresses for your Kubernetes, this can be done using a load-balancer type.

Private IPv4 Address

A private IPv4 address is an IP address that’s not reachable over the Internet. You can use private IPv4 addresses for communication between instances in the same VPC. When you launch an E2E Kubernetes, we allocate a private IPv4 address for your Kubernetes.

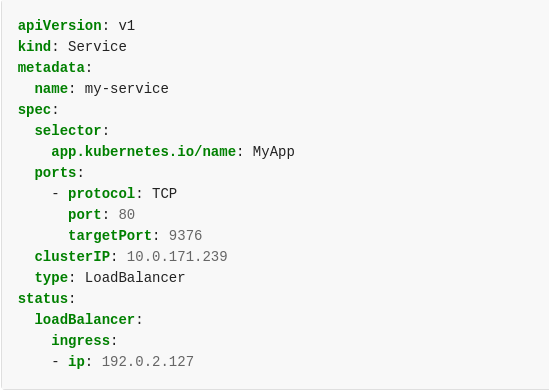

LoadBalancer

We have a native integration of the bare-metal Load Balancer MetalLB in our Kubernetes Appliance.

MetalLB hooks into our Kubernetes cluster and provides a network load-balancer implementation. It has two features that work together to provide this service: address allocation and external announcement.

With MetalLB, you can expose the service on a load-balanced IP (External/Service IP), which will float across the Kubernetes nodes in case some node fails. This differs from a simple External IP assignment.

As long as you ensure that the network traffic is routed to one of the Kubernetes nodes on this load-balanced IP, your service should be accessible from the outside.

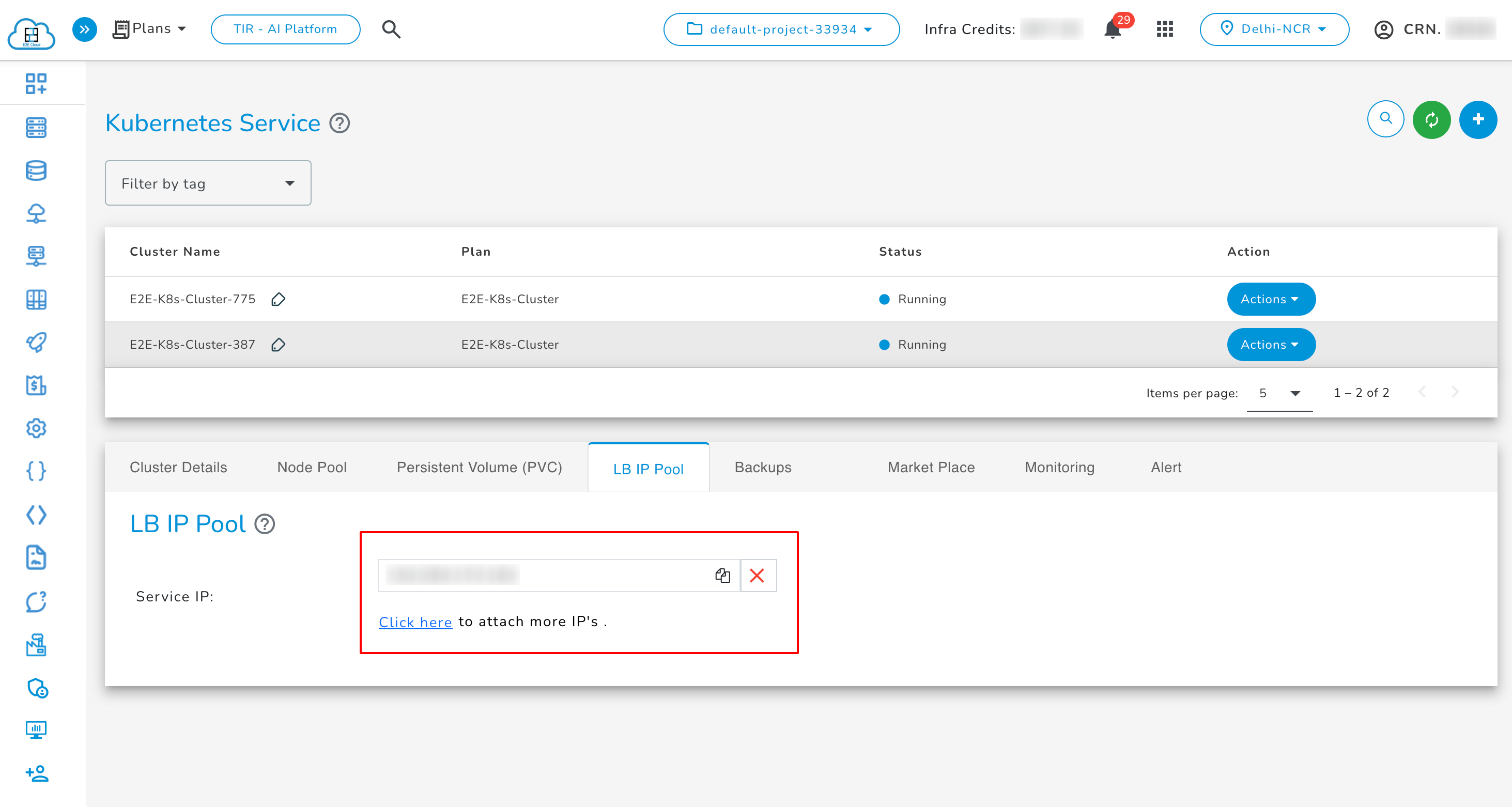

We provide pools of IP addresses that MetalLB will use for allocation. Users have the option to configure Service IP.

With Service IP, customers can reserve IPs that will be used to configure pools of IP addresses automatically in the Kubernetes cluster. MetalLB will handle assigning and unassign individual addresses as services come and go, but it will only ever hand out IPs that are part of its configured pools.

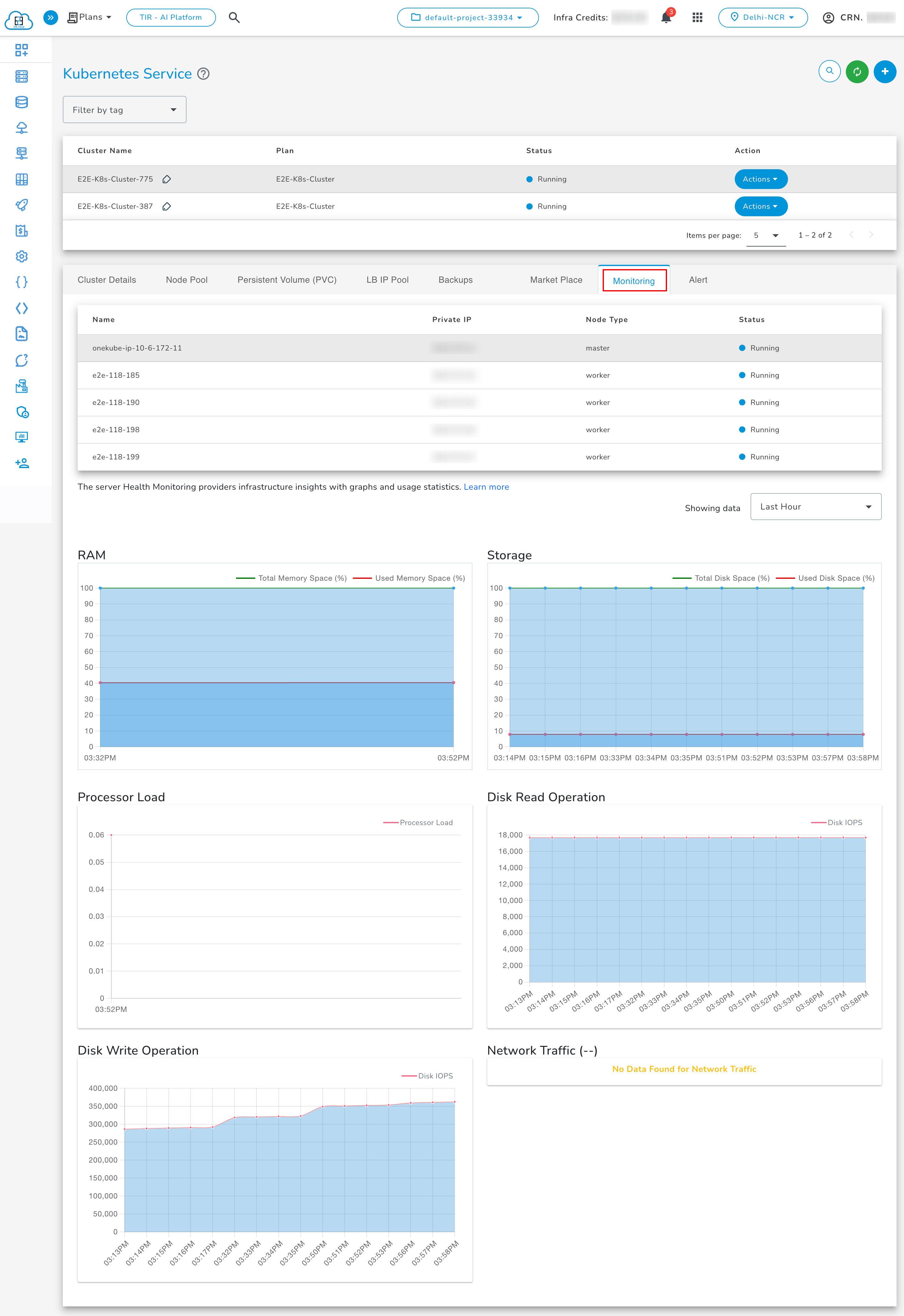

Monitoring Graphs

Monitoring of server health is a free service that provides insights into resource usage across your infrastructure. There are several different display metrics to help you track the operational health of your infrastructure. Select the worker node for which you want to check the metrics.

- Click on the ‘Monitoring’ tab to check the CPU Performance, Disk Read/Write operations, and Network Traffic Statistics.

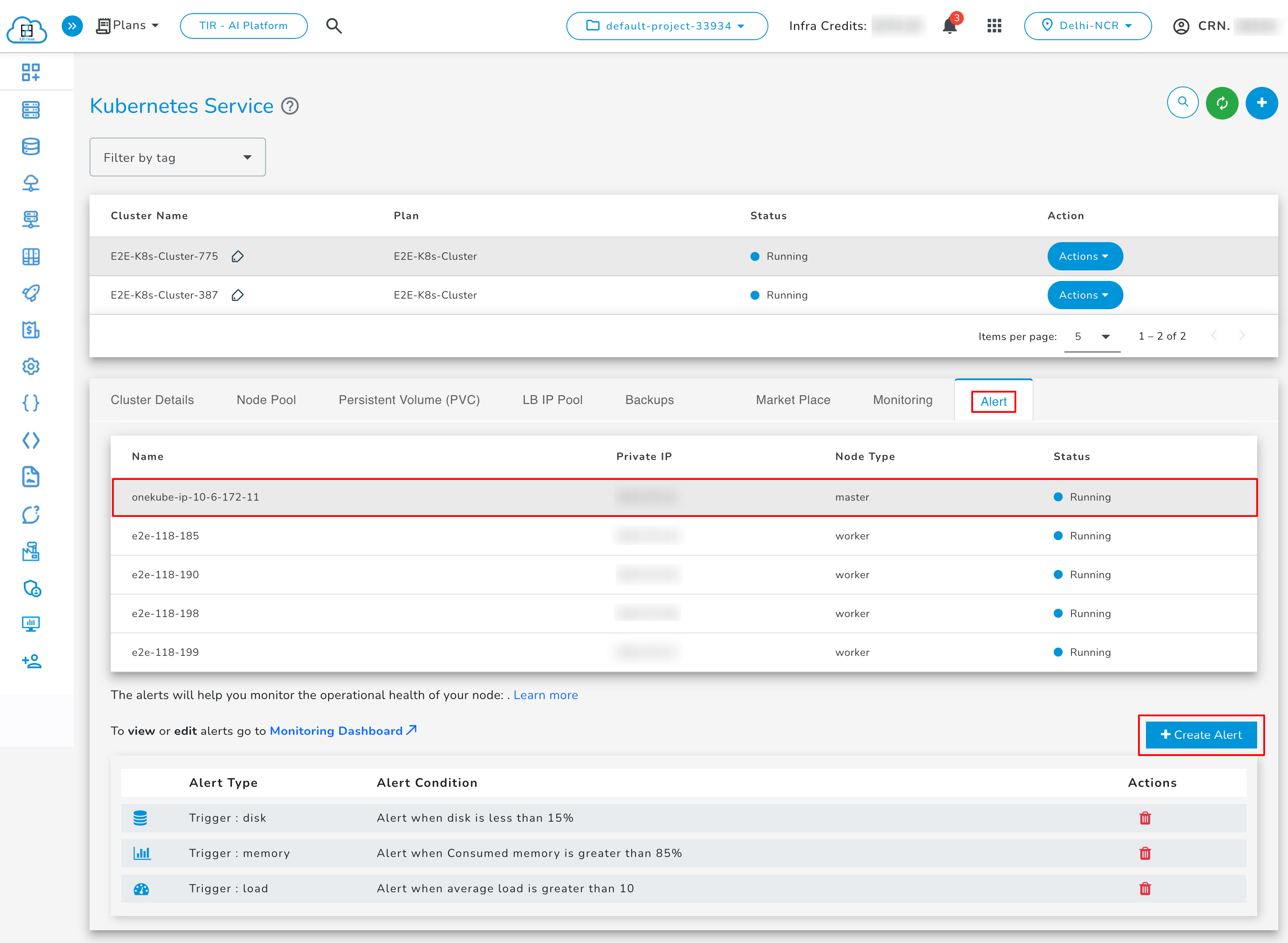

Alerts

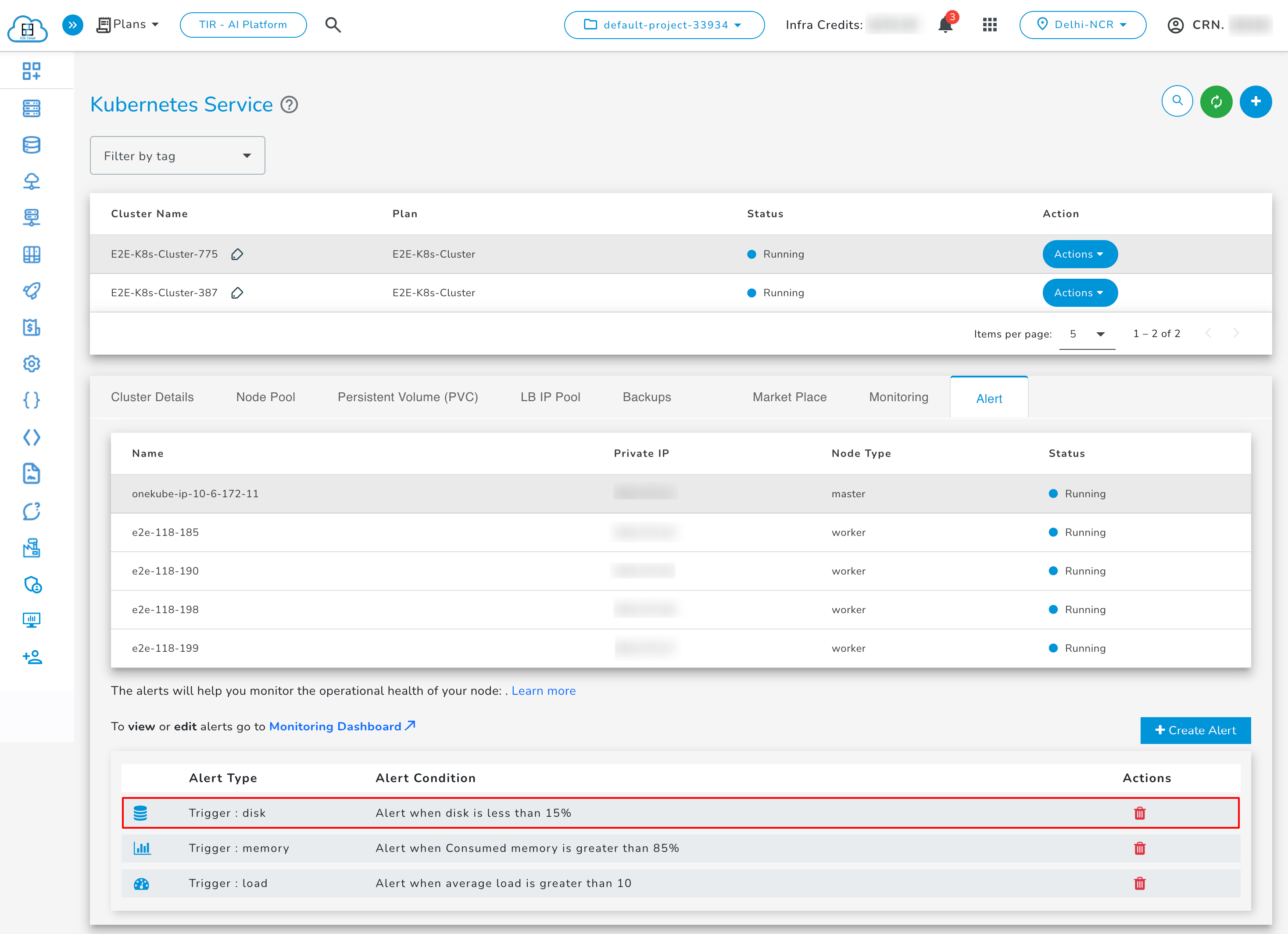

Setup Monitoring Alert for Worker Node

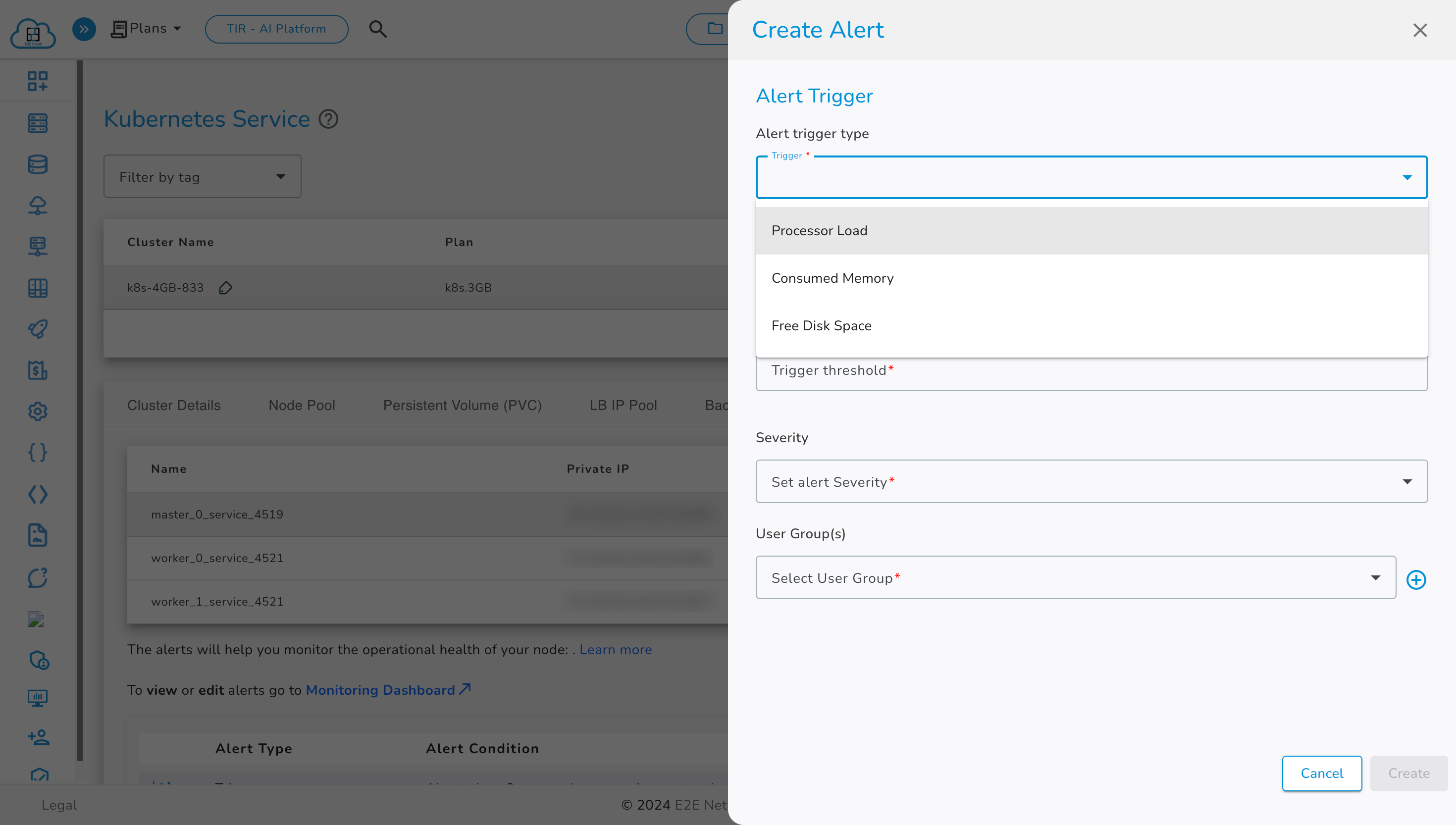

Go to the 'Alerts' tab, select the master or worker node for which you want to create an alert, and then click the 'Create Alert' button.

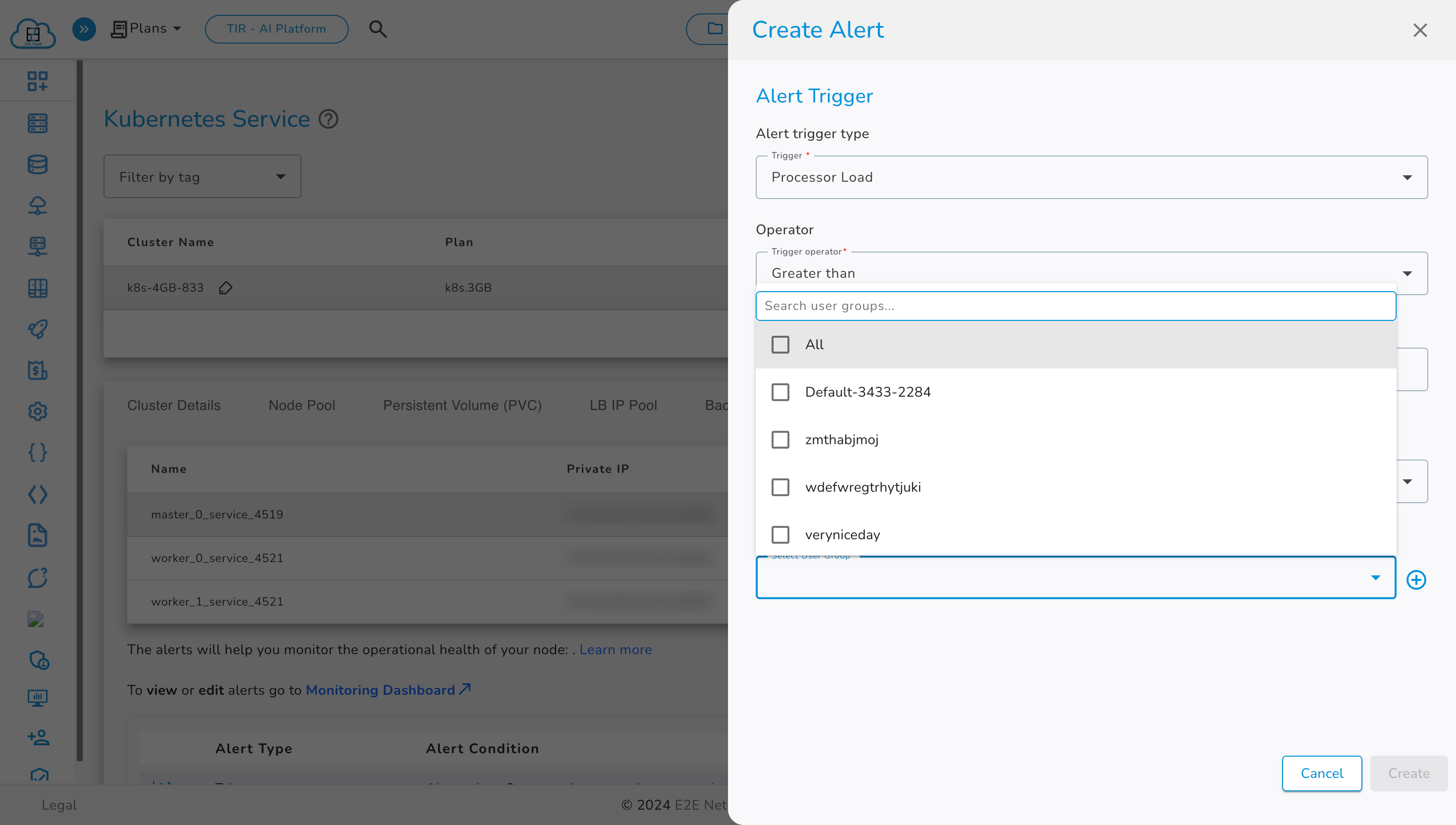

Now select Trigger Type.

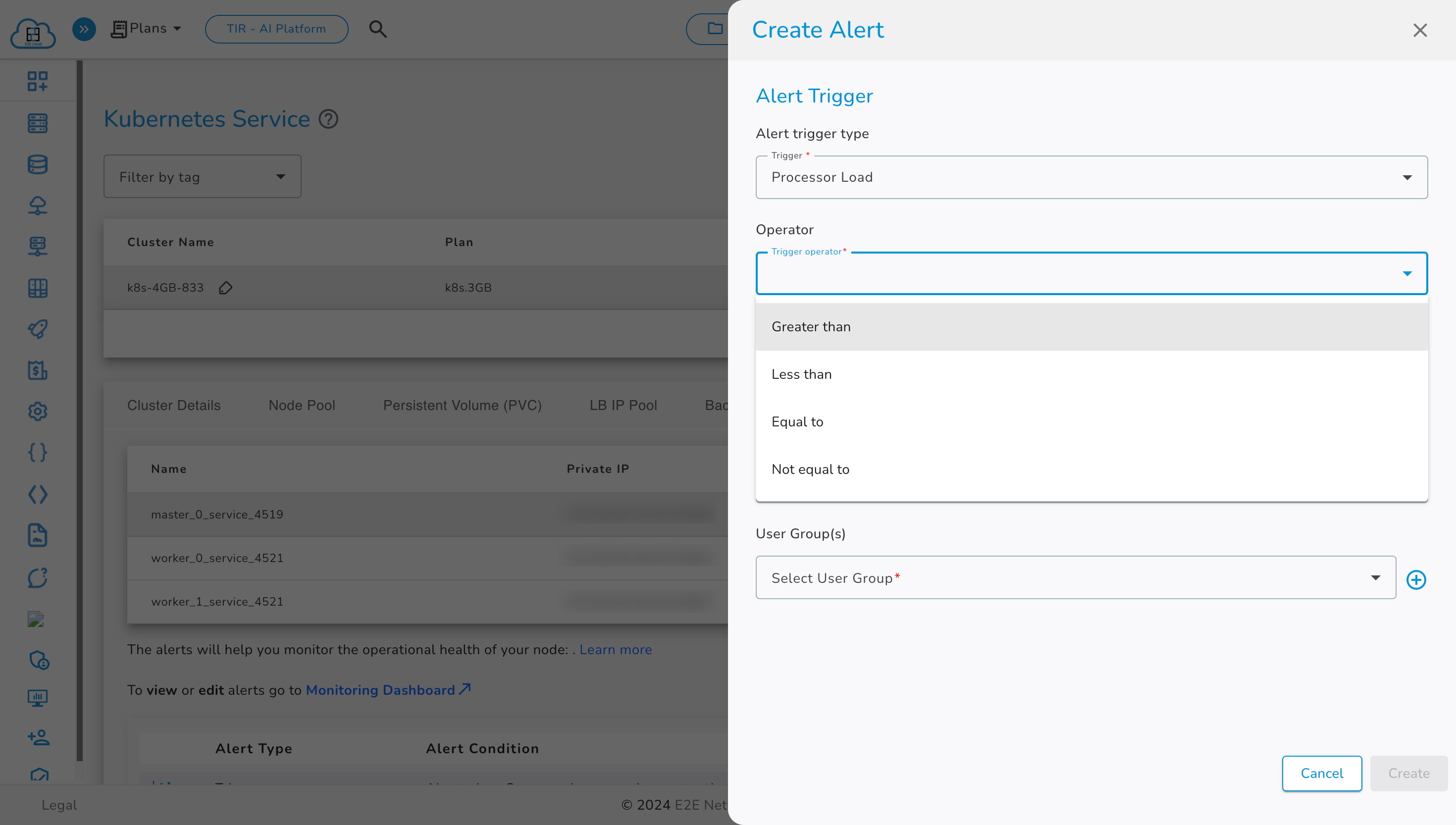

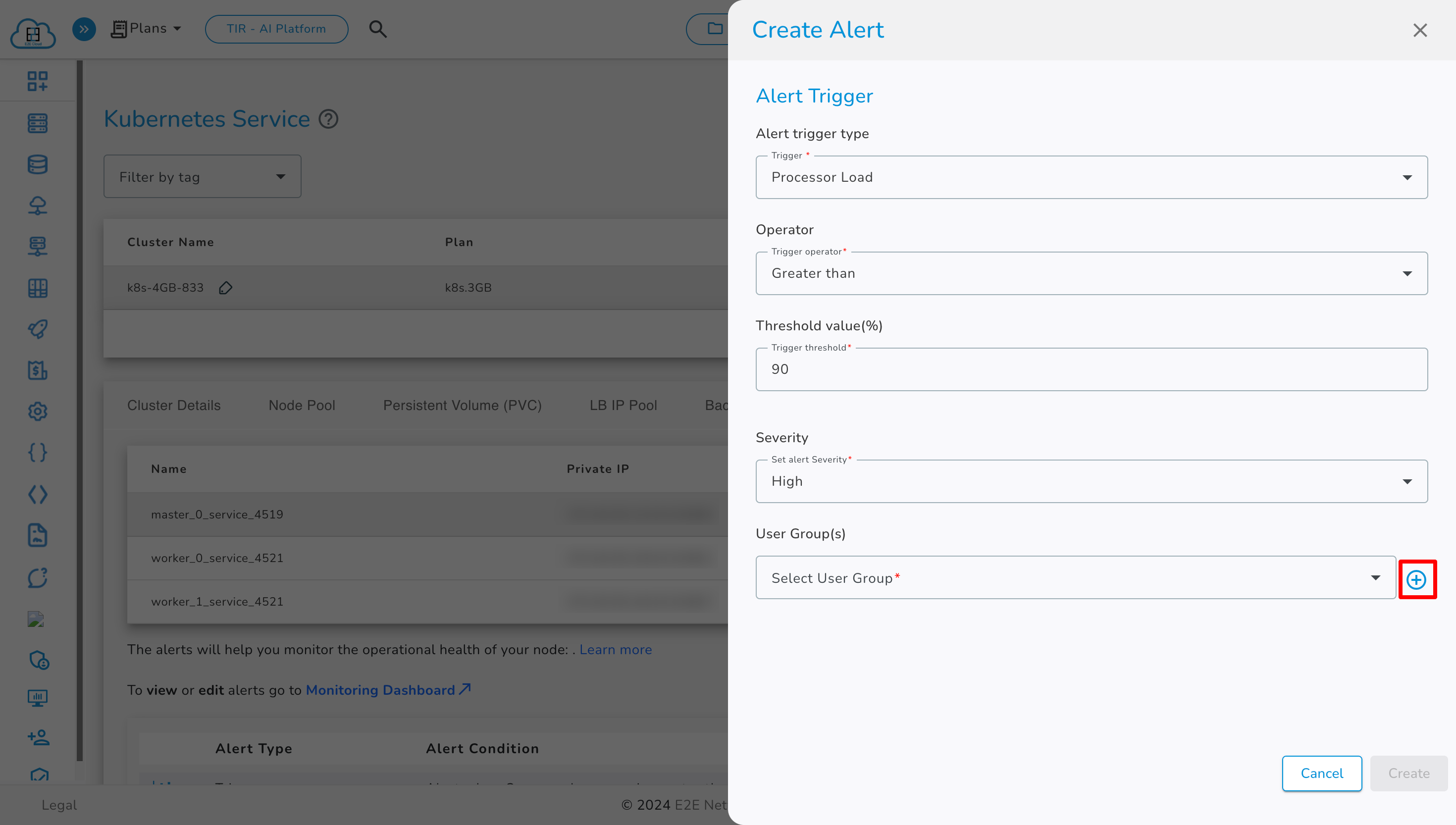

Next, select Trigger Operator.

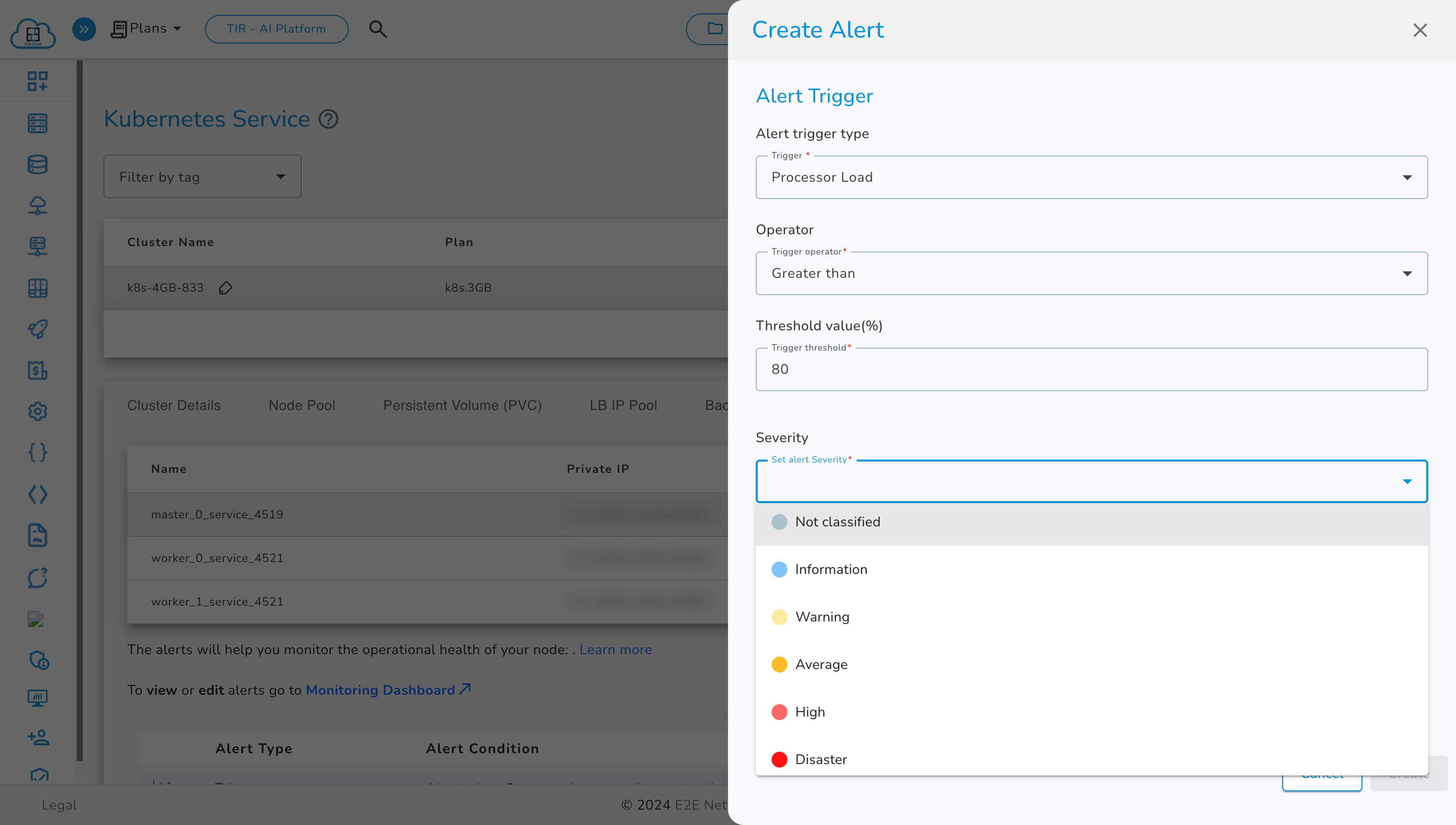

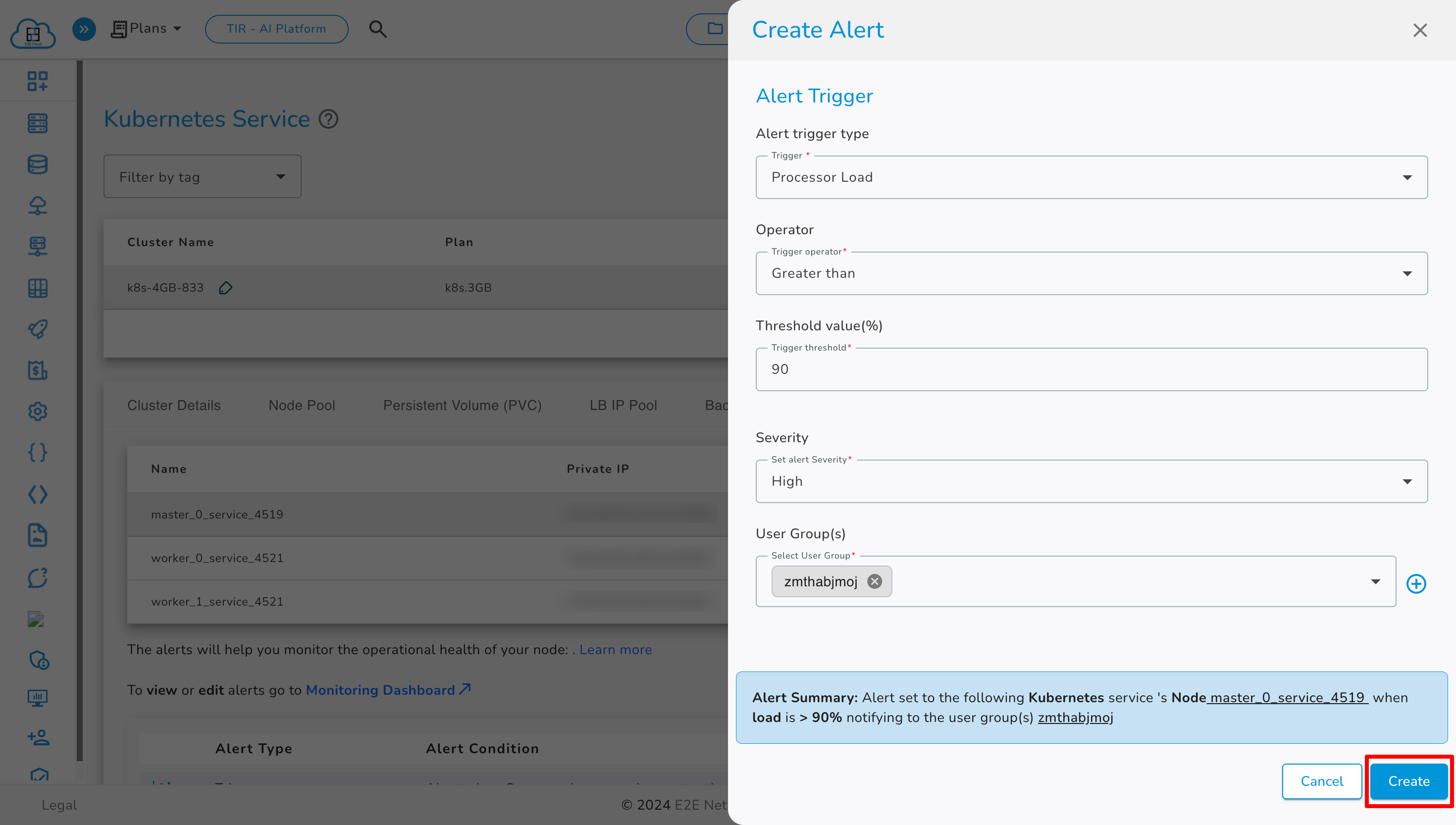

Define the Threshold Value (%). This threshold value will be validated, and if the condition is met, an alert event is triggered.

After entering the threshold value, select Severity.

Select the User Group you want to send the alert to. For more information about user groups, Click Here.

To create a new user group, click the '+' button. The rest of the process is the same as described in the Node Monitoring section. To learn more, Click Here.

After selecting the user group, click the 'Create' button to create the alert.

Once the alert is created, you can view it in the Alerts section.

If an alert condition is met, users in the specified user groups will receive a notification.

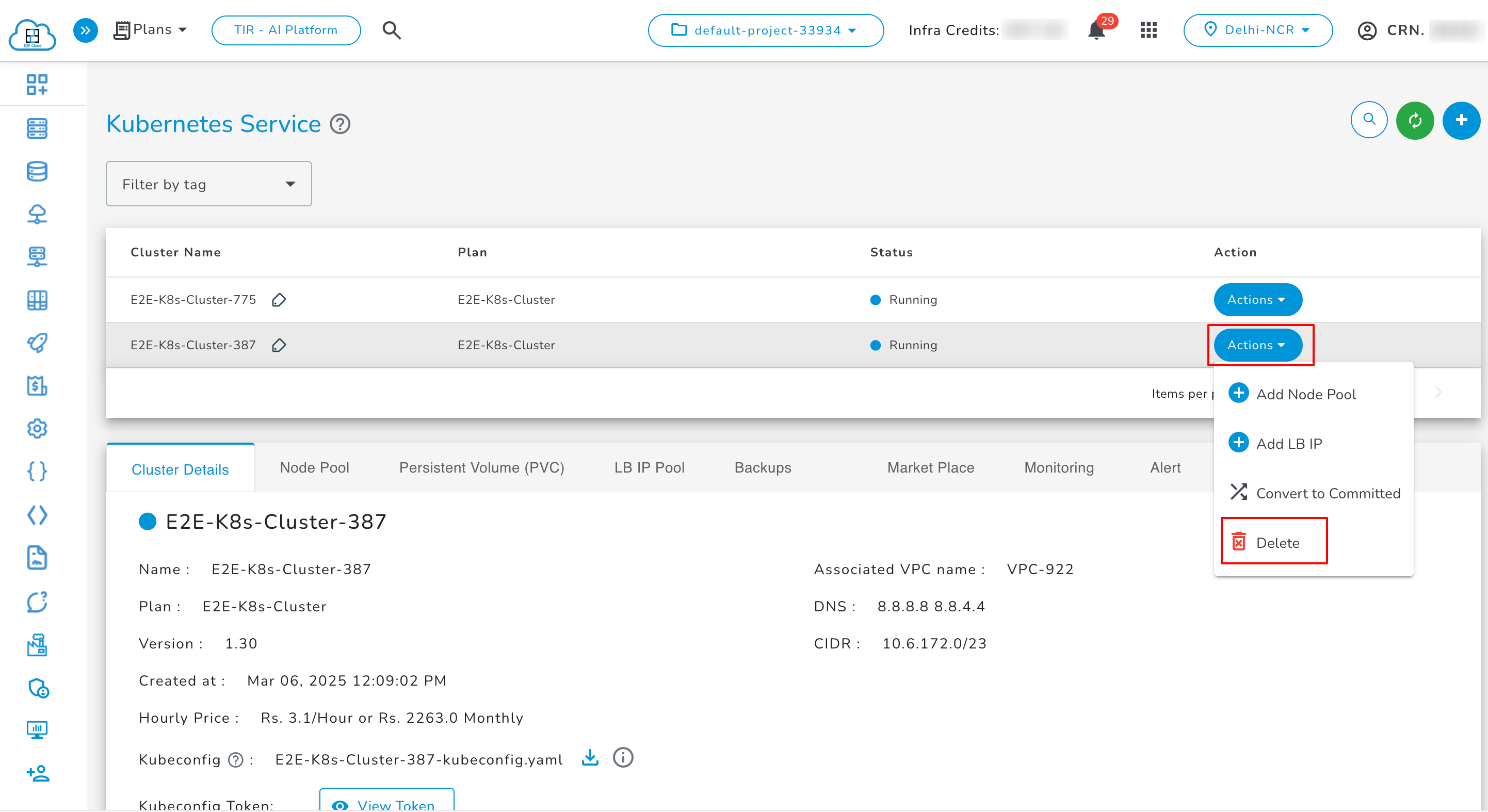

Actions

You can perform the following actions available for the respective cluster.

Add Node Pool

You can upgrade your Kubernetes current plan to a higher plan by clicking on the Add Node Pool button.

Default

Custom

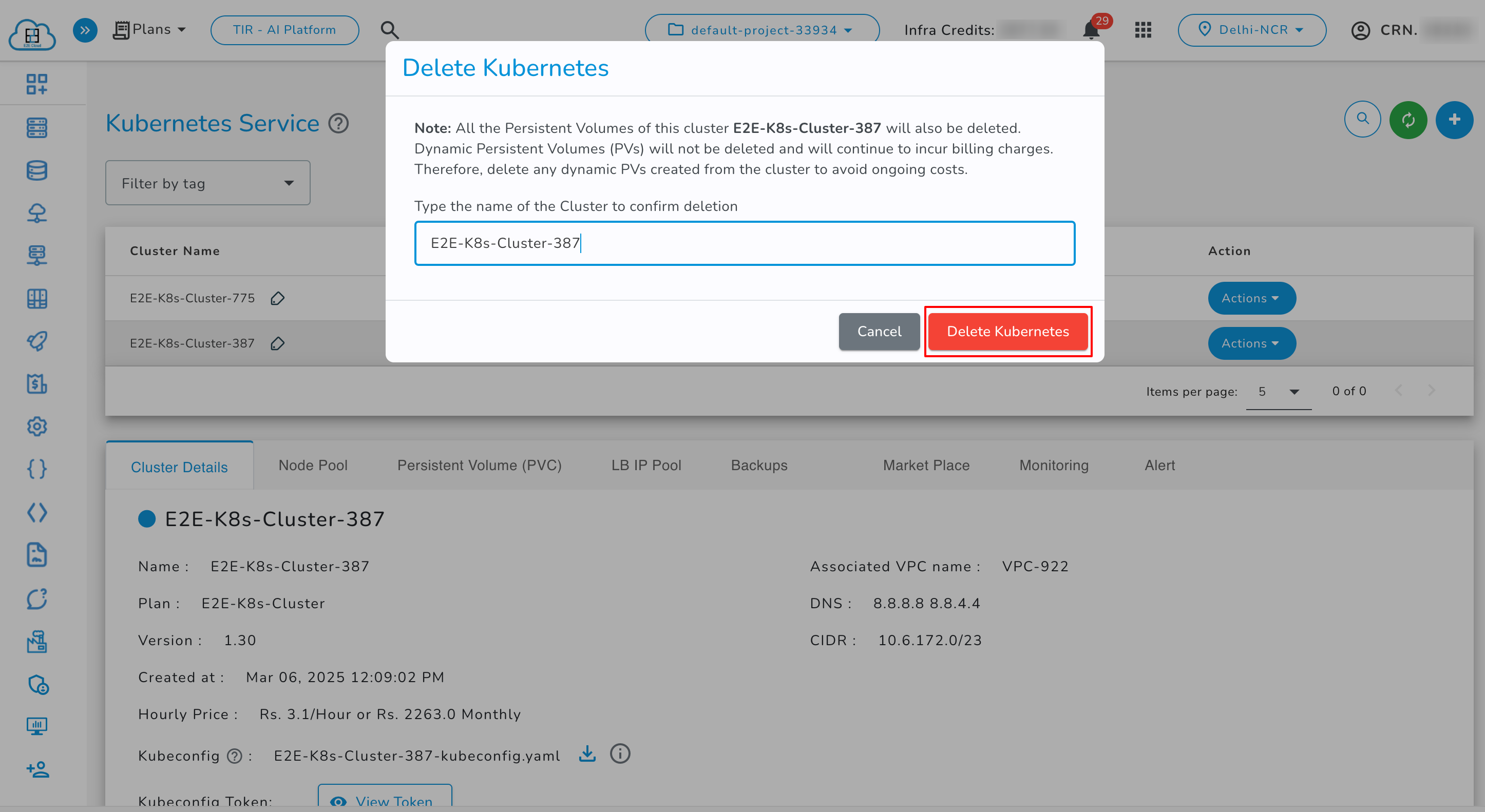

Delete Kubernetes

Click on the Delete button to delete your Kubernetes. Termination of a Kubernetes will delete all the data on it.

Create a Secret for Container Registry

Secrets

A Secret is an object that contains a small amount of sensitive data such as a password, a token, or a key. Such information might otherwise be put in a Pod specification or in a container image. Using a Secret means that you don't need to include confidential data in your application code.

Create Secrets

To create a Docker registry secret, use the following command:

kubectl create secret docker-registry name-secrets \

--docker-username=username \

--docker-password=pass1234 \

--docker-server=registry.e2enetworks.net

Create a Pod that uses container registry Secret

cat > private-reg-pod-example.yaml << EOF

apiVersion: v1

kind: Pod

metadata:

name: node-hello

spec:

containers:

- name: node-hello-container

image: registry.e2enetworks.net/vipin-repo/node-hello@sha256:bd333665069e66b11dbb76444ac114a1e0a65ace459684a5616c0429aa4bf519

imagePullSecrets:

- name: name-secrets

EOF