How to Leverage E2E Object Storage(EOS) for your TensorFlow Projects

Introduction

To employ high-performance object storage in the design of your AI/ML pipelines. E2E Object Store (EOS) is a simple, cost-effective, and S3-compatible storage service that enables you to store, backup and archive large amounts of content for your web apps or data for AI/ML pipelines. Our S3 Compatible REST API enables data access from anywhere on the internet or within your private network.

One of the frameworks that have emerged as the lead industry standards is Google’s TensorFlow. Highly versatile, one can get started quickly and write simple models with their Keras framework. If you seek a more advanced approach TensorFlow also allows you to construct your own machine learning models using low-level APIs. No matter what strategy you choose, TensorFlow will make sure that your algorithm gets optimized for whatever infrastructure you select for your algorithms - whether it’s CPU’s, GPU’s or TPU’s.

Tensorflow supports reading and writing data to object storage. EOS is an object storage API which is nearly ubiquitous, and can help in situations where data must accessed by multiple actors, such as in distributed training.

This document guides you through the required setup, and provides examples on usage. Download the dataset in your system or in cloud server and upload it to our EOS using MinIO Client.

Installation

Here we are choosing an example dataset to upload it to the EOS.

#curl -O http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz

#mc mb e2e/datasets

#mc cp aclImdb_v1.tar.gz e2e/datasets/

To download above dataset from MinIO using MinIO Python client SDK which is available in minio-py

The MinIO Python Client SDK provides simple APIs to access any S3 compatible object storage server.

Requirements

Python 2.7 or higher

Tensorboard

Tensorflow

TensorFlow-hub

Object Storage

Options to download minio-python client SDK

Download from pip

#pip install minio

Download from pip3

#pip3 install minio

Download from source

#git clone https://github.com/minio/minio-py

#cd minio-py

#python setup.py install

Configurations

Now, lets start writing example config to download and uncompress the dataset under /tmp in a file sample-minio-pipeline.py.

In our tutorial we have taken a part of code to make understand how to use minio-client sdk with python-based framework Tensorflow to connect with MinIO the same way you can use this to the full-size code in production.

import tensorflow as tf

import tensorflow_hub as hub

from minio import Minio

from minio.error import ResponseError

random_seed = 44

batch_size = 128

datasets_bucket = 'datasets'

preprocessed_data_folder = 'preprocessed-data'

tf_record_file_size = 500

# Set the random seed

tf.random.set_seed(random_seed)

# How to access MinIO

minio_address = 'objectstore.e2enetworks.net'

minio_access_key = 'xxxxxxxxxxxxxxxx'

minio_secret_key = 'xxxxxxxxxxxxxxxxxxxxxxxxxxxx'

from minio import Minio

from minio.error import (ResponseError, BucketAlreadyOwnedByYou,

BucketAlreadyExists)

# Initialize minioClient with an endpoint and access/secret keys.

minioClient = Minio(minio_address,

access_key=minio_access_key,

secret_key=minio_secret_key,

secure=True)

try:

minioClient.fget_object(

datasets_bucket,

'aclImdb_v1.tar.gz',

'/tmp/dataset.tar.gz')

except ResponseError as err:

print(err)

#Now let's uncompress the dataset to a temporary folder (/tmp/dataset) to preprocess our data

import tarfile, glob

extract_folder = f'/tmp/{datasets_bucket}/'

with tarfile.open("/tmp/dataset.tar.gz", "r:gz") as tar:

tar.extractall(path=extract_folder)

If you run python script sample-minio-pipeline.py then the dataset will get downloaded and uncompressed under /tmp.

# python sample-minio-pipeline.py

Next to this you can start building the further stages of machine learning like pre-processing and training of data using Tensorflow by fetching and sending data to Object storage. According to your code flow, you can tweak the code with our above given format to access MinIO storage buckets.

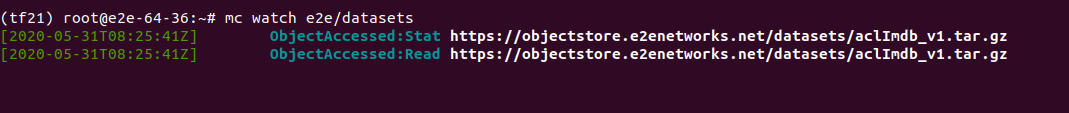

To Monitor Object Notifications Events

Running a single command we can start monitoring data flow between Tensorflow and MinIO.

# mc watch e2e/datasets

Conclusion

By separating storage and compute, one can build a framework that is not dependent on local resources - allowing you to run them on a container inside Kubernetes. This adds considerable flexibility.I hope that this tutorial is useful! Once again, For more details related to E2E Object Storage(EOS)